longhorn: [BUG] Nil pointer dereference error on restoring the system backup

Describe the bug (🐛 if you encounter this issue)

A system backup which was taken in the v1.4.0-rc1 on restoring stuck in Pending state with nil pointer dereference error.

To Reproduce

Steps to reproduce the behavior:

- Install Longhorn v1.4.0-rc1

- Create some volumes.

- Take the volume backup and system backup.

- Create a cluster with Longhorn v1.4.0-rc2.

- Restore the system backup.

- Restoring stuck in

Pendingstate.

Expected behavior

Restore should complete.

Log or Support bundle

supportbundle_06317708-75d1-48d5-8092-1de9ee200fe7_2022-12-22T20-25-53Z.zip

Environment

- Longhorn version: v1.4.0-rc2

- Installation method (e.g. Rancher Catalog App/Helm/Kubectl): kubectl

Additional context

If creating system backup in v1.4.0-rc2 and restoring it in the same version doesn’t have any problem.

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Reactions: 1

- Comments: 19 (19 by maintainers)

Verified passed on v1.4.x-head (longhorn-manager 9d57b4c). Different kinds of volumes (Created from Longhorn UI, dynamic provisioning, RWO, RWX, volume with backing image, encrypted volume) can all be backup and restored to the new cluster without problem.

This is a good test to cover different types of volume creation. cc @longhorn/qa

Tested on v1.4.x-head (longhorn-manager 9d57b4c). Trying to restore a volume with backing image, data is missing from the restored volume:

In cluster A: (1) Create a volume with backing image (2) Create a pod to use this volume:

(3) Write some data to the volume:

(4) Create system backup

In cluster B: (5) Manually create backing image (6) Restore the system backup (7) The volume with backing image can be restored and in detached state (8) Create a pod to use this restored volume like in step (2) (9) Check the volume data by

kubectl exec -it test-pod-1 -- /bin/sh, there is notest-1file created in step (3)test-1file can be found in the restored volume and the content is intact.Tested on v1.4.x-head, the following test scenario results in abnormal behavior:

(1) In cluster A, create volume “

test-1” and create pv/pvc in default namespace, all from Longhorn UI (2) Create a pod using this volume:(3) Create backup for this volume (4) Create system backup

(4) Create system backup

(5) In cluster B, restore this system backup => Volume test-1 can be found restored on Longhorn UI, but it’s in faulted state, the job log keeps printing the following messages forvever:

(6) delete the restore job and the faulted volume, and retry the restore process again, somehow the restore can be completed without problems. (7) In cluster B, create a pod like step 2 to use the restored volume

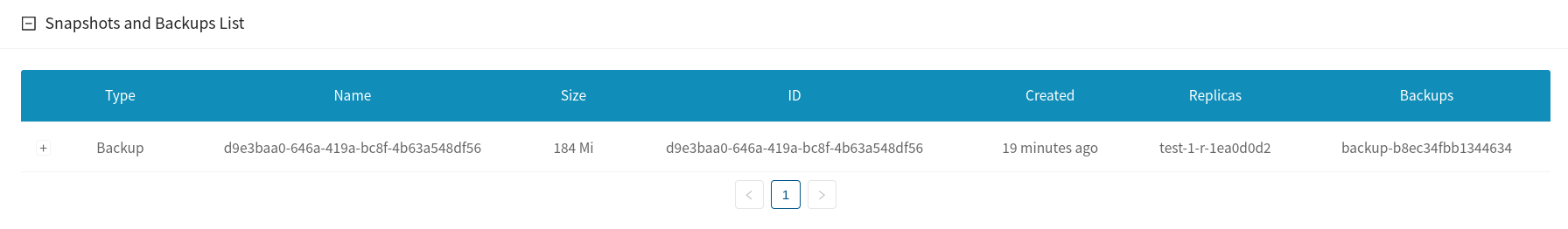

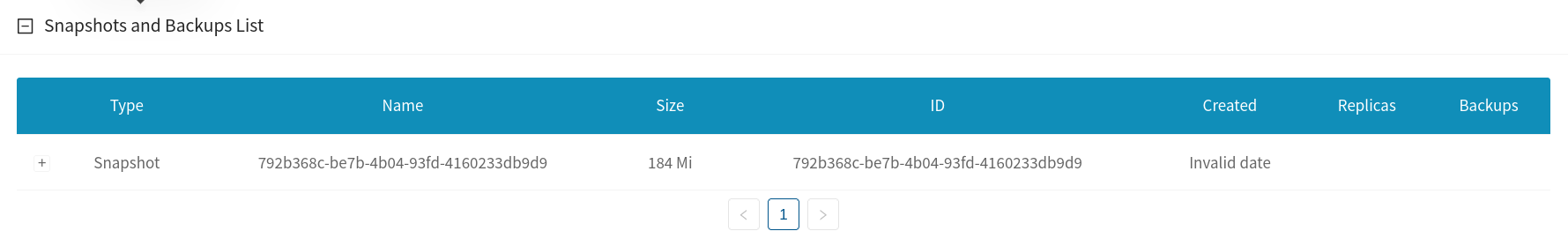

test-1=> After the volume is attached and becomes healthy, there is a abnormal snapshot appearing in the snapshots list:It’s expected because volume backup has to be created to restore the data in another cluster.

From @c3y1huang If the system backup volume doesn’t contain volume backup then the data will not get restored in cluster-B. Instead it will restore the volume only.

Yes, this also happens in v1.3.x-head.

Yes

The Snapshot is without the

creationTime.This doesn’t look related to the system-backup/restore and should be reproducible without the system backup/restore.

cluster-A:

cluster-B:

New issue created: https://github.com/longhorn/longhorn/issues/5153

Logs from cluster B

The replica failed at executing engine binary before

engine image is fully deployed on all ready nodes.For the above abnormal behavior, no need to create volume/pv/pvc manually from Longhorn UI, it can be reproduced by dynamic provisioning as following steps:

In cluster A (1) kubectl apply -f https://raw.githubusercontent.com/longhorn/longhorn/master/examples/statefulset.yaml (2) create backup for a volume from Longhorn UI (3) create system backup

In cluster B (4) restore this system backup => the volume has backup will become faulted state (5) delete the restored volume and restore job, and then retry restore again => volumes can be restored without error, the restored volumes remain in detached state (6) kubectl apply -f https://raw.githubusercontent.com/longhorn/longhorn/master/examples/statefulset.yaml => volume states transfer from detached to healthy, and the volume has backup will have a snapshot with invalid date

@c3y1huang Am I missing anything or have any other restrict or prerequisite for restore?

I don’t think it will. Because the system restore will only leverage volume backup restore, the behavior will be the same as restoring volume backup. (the restored volume will have no snapshots)

Yes, see restore path.

The test is good but it seems not valid enough because the RC1 is still a transient version. Does RC2 work well if restoring from a backup created by RC2? I think yes, because it’s a best criteria .

However, this means we need to pay attention to system backup n restore backward compatibility for the system backup created by a previous version.

@khushboo-rancher Let’s add this test case to the regression testing if not yet.