harvester: [BUG] vm killed by Memory cgroup out of memory OOM

Describe the bug A vm with heavy memory activity is killed by harvester.suse.OOM

- vm setup ram= 48G.

- 100G ram avaliable before vm start

- ram cost of program running inside: normal=8G, peak=16G. i run 2 of them.

- suddenly vm pod is killed by suse.k8s OOM.

Jun 23 10:10:34 hv kernel: CPU 2/KVM invoked oom-killer: gfp_mask=0xcc0(GFP_KERNEL), order=0, oom_score_adj=872

Jun 23 10:10:34 hv kernel: memory: usage 50595840kB, limit 50595840kB, failcnt 15

Jun 23 10:10:34 hv kernel: memory+swap: usage 0kB, limit 9007199254740988kB, failcnt 0

Jun 23 10:10:34 hv kernel: kmem: usage 377108kB, limit 9007199254740988kB, failcnt 0

Jun 23 10:10:34 hv kernel: Memory cgroup out of memory: Killed process 10381 (qemu-system-x86) total-vm:51318200kB, anon-rss:50107964kB, file-rss:22164kB, shmem-rss:4kB

before killed, vm top shows: (48G is enough for programs)

top - xxxxxx up 36 min, 1 user, load average: 3.99, 1.89, 0.78

Tasks: 121 total, 1 running, 119 sleeping, 0 stopped, 1 zombie

%Cpu(s): 79.1 us, 6.9 sy, 0.0 ni, 13.5 id, 0.2 wa, 0.0 hi, 0.3 si, 0.2 st

KiB Mem : 49191852 total, 3425924 free, 31568352 used, 14197576 buff/cache

KiB Swap: 0 total, 0 free, 0 used. 17183912 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1510 yqdev 20 0 21.5g 16.3g 2272 S 125.8 36.8 4:51.41 /app1

1601 yqdev 20 0 15.7g 9.8g 2276 S 188.1 20.8 3:19.65 /app2

1373 mysql 20 0 3824944 2.4g 8252 S 31.1 5.2 0:27.45 /usr/sbin/mysqld --daemonize --pid-file=/var/run/m+ 876 root 20 0 574280 17420 6132 S 0.0 0.0 0:00.42 /usr/bin/python2 -Es /usr/sbin/tuned -l -P

- this program using jemalloc, and alloc/free huge memory very fast.

- pod cgroup memory limit default value is vm’s ram setup, should this be larger ? or editable?

- both vm and harvester-ui has no log or event about this crash. really horrible.

- reducing ram overcommit from 150% to 100% helps?

To Reproduce Steps to reproduce the behavior:

- create vm

- enter vm, start program

- vm killed by oom and restart

Expected behavior

- vm.os.OOM happens before harvester.k8s.cgroup.OOM

- harvester should log oom/restart events somewhere

Support bundle

- already sent by email.

Environment

- Harvester ISO version: v1.0.2

- Underlying Infrastructure: 20c / 256G / ssd

Additional context suse sys log:

Jun 23 10:10:34 hv kernel: CPU 2/KVM invoked oom-killer: gfp_mask=0xcc0(GFP_KERNEL), order=0, oom_score_adj=872

Jun 23 10:10:34 hv kernel: CPU: 3 PID: 10612 Comm: CPU 2/KVM Tainted: G I X 5.3.18-150300.59.63-default #1 SLE15-SP3

Jun 23 10:10:34 hv kernel: Hardware name: Dell Inc. PowerEdge R440/04JN2K, BIOS 2.13.3 12/17/2021

Jun 23 10:10:34 hv kernel: Call Trace:

Jun 23 10:10:34 hv kernel: dump_stack+0x66/0x8b

Jun 23 10:10:34 hv kernel: dump_header+0x4a/0x200

Jun 23 10:10:34 hv kernel: oom_kill_process+0xe8/0x130

Jun 23 10:10:34 hv kernel: out_of_memory+0x105/0x510

Jun 23 10:10:34 hv kernel: mem_cgroup_out_of_memory+0xb5/0xd0

Jun 23 10:10:34 hv kernel: try_charge+0x7c0/0x800

Jun 23 10:10:34 hv kernel: ? __alloc_pages_nodemask+0x15d/0x320

Jun 23 10:10:34 hv kernel: mem_cgroup_try_charge+0x70/0x190

Jun 23 10:10:34 hv kernel: mem_cgroup_try_charge_delay+0x1c/0x40

Jun 23 10:10:34 hv kernel: __handle_mm_fault+0x969/0x1260

Jun 23 10:10:34 hv kernel: handle_mm_fault+0xc4/0x200

Jun 23 10:10:34 hv kernel: __get_user_pages+0x1f7/0x770

Jun 23 10:10:34 hv kernel: get_user_pages_unlocked+0x146/0x200

Jun 23 10:10:34 hv kernel: __gfn_to_pfn_memslot+0x16c/0x4c0 [kvm]

Jun 23 10:10:34 hv kernel: try_async_pf+0x88/0x230 [kvm]

Jun 23 10:10:34 hv kernel: ? gfn_to_hva_memslot_prot+0x16/0x40 [kvm]

Jun 23 10:10:34 hv kernel: tdp_page_fault+0x143/0x2b0 [kvm]

Jun 23 10:10:34 hv kernel: kvm_mmu_page_fault+0x74/0x600 [kvm]

Jun 23 10:10:34 hv kernel: ? vmx_set_msr+0xed/0xcc0 [kvm_intel]

Jun 23 10:10:34 hv kernel: ? x86_virt_spec_ctrl+0x68/0xe0

Jun 23 10:10:34 hv kernel: vcpu_enter_guest+0x570/0x1560 [kvm]

Jun 23 10:10:34 hv kernel: ? finish_task_switch+0x181/0x2a0

Jun 23 10:10:34 hv kernel: ? finish_task_switch+0x181/0x2a0

Jun 23 10:10:34 hv kernel: ? __apic_accept_irq+0x275/0x320 [kvm]

Jun 23 10:10:34 hv kernel: ? kvm_arch_vcpu_ioctl_run+0x349/0x560 [kvm]

Jun 23 10:10:34 hv kernel: kvm_arch_vcpu_ioctl_run+0x349/0x560 [kvm]

Jun 23 10:10:34 hv kernel: kvm_vcpu_ioctl+0x243/0x5d0 [kvm]

Jun 23 10:10:34 hv kernel: ksys_ioctl+0x92/0xb0

Jun 23 10:10:34 hv kernel: __x64_sys_ioctl+0x16/0x20

Jun 23 10:10:34 hv kernel: do_syscall_64+0x5b/0x1e0

Jun 23 10:10:34 hv kernel: entry_SYSCALL_64_after_hwframe+0x44/0xa9

Jun 23 10:10:34 hv kernel: RIP: 0033:0x7f72285f4c47

Jun 23 10:10:34 hv kernel: Code: 90 90 90 48 8b 05 49 c2 2d 00 64 c7 00 26 00 00 00 48 c7 c0 ff ff ff ff c3 66 2e 0f 1f 84 00 00 00 00 00 b8 10 00 00 00 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d 19 c2 2d 00 f7 d8 64 89 01 48

Jun 23 10:10:34 hv kernel: RSP: 002b:00007f7221a2a7b8 EFLAGS: 00000246 ORIG_RAX: 0000000000000010

Jun 23 10:10:34 hv kernel: RAX: ffffffffffffffda RBX: 000000000000ae80 RCX: 00007f72285f4c47

Jun 23 10:10:34 hv kernel: RDX: 0000000000000000 RSI: 000000000000ae80 RDI: 0000000000000016

Jun 23 10:10:34 hv kernel: RBP: 0000000000000000 R08: 000055b352d1f6e0 R09: 0000000000000000

Jun 23 10:10:34 hv kernel: R10: 0000000000000001 R11: 0000000000000246 R12: 000055b353007df0

Jun 23 10:10:34 hv kernel: R13: 00007f7221228000 R14: 0000000000000000 R15: 000055b353007df0

Jun 23 10:10:34 hv kernel: memory: usage 50595840kB, limit 50595840kB, failcnt 15

Jun 23 10:10:34 hv kernel: memory+swap: usage 0kB, limit 9007199254740988kB, failcnt 0

Jun 23 10:10:34 hv kernel: kmem: usage 377108kB, limit 9007199254740988kB, failcnt 0

Jun 23 10:10:34 hv kernel: Memory cgroup stats for /kubepods/burstable/pod3ac4f411-6ac5-40ae-a999-9d937dce3b55:

Jun 23 10:10:34 hv kernel: anon 51423752192

file 0

kernel_stack 1622016

slab 20717568

sock 0

shmem 0

file_mapped 0

file_dirty 0

file_writeback 135168

anon_thp 51298435072

inactive_anon 0

active_anon 51423870976

inactive_file 0

active_file 0

unevictable 0

slab_reclaimable 4431872

slab_unreclaimable 16285696

pgfault 102036

pgmajfault 0

workingset_refault 0

workingset_activate 0

workingset_nodereclaim 0

pgrefill 0

pgscan 995

pgsteal 359

pgactivate 0

pgdeactivate 0

pglazyfree 0

pglazyfreed 0

thp_fault_alloc 24024

thp_collapse_alloc 0

Jun 23 10:10:34 hv kernel: Tasks state (memory values in pages):

Jun 23 10:10:34 hv kernel: [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

Jun 23 10:10:34 hv kernel: [ 9539] 0 9539 243 1 28672 0 -998 pause

Jun 23 10:10:34 hv kernel: [ 9593] 0 9593 980484 20142 843776 0 872 virt-launcher

Jun 23 10:10:34 hv kernel: [ 9837] 0 9837 1055057 23493 925696 0 872 virt-launcher

Jun 23 10:10:34 hv kernel: [ 9859] 0 9859 35284 3786 307200 0 872 virtlogd

Jun 23 10:10:34 hv kernel: [ 9860] 0 9860 447268 11448 704512 0 872 libvirtd

Jun 23 10:10:34 hv kernel: [ 10381] 107 10381 12829550 12532533 101314560 0 872 qemu-system-x86

Jun 23 10:10:34 hv kernel: oom-kill:constraint=CONSTRAINT_MEMCG,nodemask=(null),cpuset=2ef09c9197e7889ad1b9f14c3eb4ab17998af7f288f28991b3a80898b47673f4,mems_allowed=0-1,oom_memcg=/kubepods/burstable/pod3ac4f411-6ac5-40ae-a999-9d937dce3b55,task_memcg=/kubepods/burstable/pod3ac4f411-6ac5-40ae-a999-9d937dce3b55/2ef09c9197e7889ad1b9f14c3eb4ab17998af7f288f28991b3a80898b47673f4,task=qemu-system-x86,pid=10381,uid=107

Jun 23 10:10:34 hv kernel: Memory cgroup out of memory: Killed process 10381 (qemu-system-x86) total-vm:51318200kB, anon-rss:50107964kB, file-rss:22164kB, shmem-rss:4kB

Jun 23 10:10:35 hv kernel: oom_reaper: reaped process 10381 (qemu-system-x86), now anon-rss:0kB, file-rss:36kB, shmem-rss:4kB

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 16 (6 by maintainers)

cgroup-oom-kill not happened for 3 weeks.

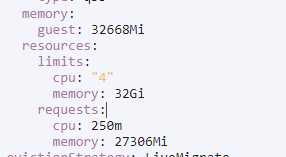

My setup for resolved memory now:

256Mis a good number.the memory calculate code is here: https://github.com/harvester/harvester/blob/master/pkg/webhook/resources/virtualmachine/mutator.go#L171-L182

different resovle-memory setups result in vmi config: (overcommit=120%)

default 100M

force 0

setup 256M

By increate resoved memory from 100M(default) to 256M, cgroup-oom-kill not seen for 3 days now. In loadtest, both guest-os-oom & guest-os-swap triggered.

But i dont know how much resoved-memory is enough.