tensorflow: Memory leak with tf.py_function in eager mode using TF 2.0

System information

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): Yes

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): MacOS 10.14.6

- TensorFlow installed from (source or binary): binary

- TensorFlow version (use command below): 2.0.0

- Python version: 3.6.3 64bit

- Bazel version (if compiling from source): N/A

- GCC/Compiler version (if compiling from source): N/A

- CUDA/cuDNN version: N/A

- GPU model and memory: N/A

Describe the current behavior

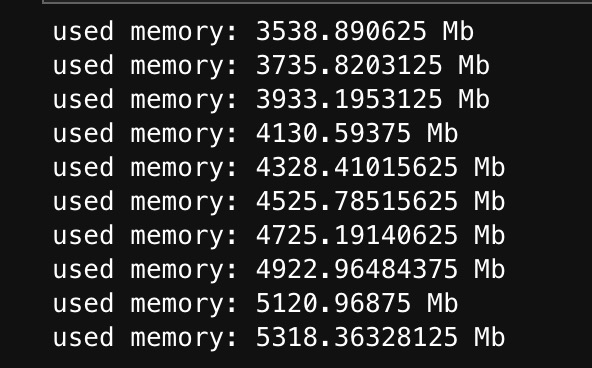

I customize a Keras layer and use tf.py_function in the call of the layer. The used memory keeps increasing linearly with the number of calling the layer with inputs in eager mode. What’s

Describe the expected behavior The memory should keep stable.

Code to reproduce the issue

import psutil

import numpy as np

import tensorflow as tf

class TestLayer(tf.keras.layers.Layer):

def __init__(

self,

output_dim,

**kwargs

):

super(TestLayer, self).__init__(**kwargs)

self.output_dim = output_dim

def build(self, input_shape):

self.built = True

def call(self, inputs):

batch_embedding = tf.py_function(

self.mock_output, inp=[inputs], Tout=tf.float64,

)

return batch_embedding

def mock_output(self, inputs):

shape = inputs.shape.as_list()

batch_size = shape[0]

return tf.constant(np.random.random((batch_size,self.output_dim)))

test_layer = TestLayer(1000)

for i in range(1000):

test_layer.call(tf.constant(np.random.randint(0,100,(256,10))))

if i % 100 == 0:

used_mem = psutil.virtual_memory().used

print('used memory: {} Mb'.format(used_mem / 1024 / 1024))

other info We encounter this issue when developing a customized embedding layer in ElasticDL and resolving the ElasticDL issue 1567

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 2

- Comments: 15 (5 by maintainers)

Yes, there is a very portion of memory leak in graph mode by using

@tf.functionto decorate thecallin the customized layer. However, i can not convert the inputs to a numpy array and use python to process the array because the inputs is a Tensor not an EagerTensor in graph mode. So i hope i can use thetf.py_functionwith eager execution.Hi all, I’ve boiled down the problem and created a simpler gist to demonstrate the problem, in TF 2.4

It’s with the

use_tape_cache, at least for the above. Here we can use thenumpy_func, maybe that works as well for the data preprocessing as no gradient is needed there.I’m experiencing a memory leakage when training Mobilenetv2,Resnet, etc on Imagenet from scratch using a tf.py_function to map my dataset with a custom augmentation. It’s not a huge leakage, but after three epochs of training on Imagenet dataset, my memory consumption increased from 16Gb to 59Gb and I had to stop my execution, because it started to be too slow.

I’m using tensorflow 2.3 on a p3.2xlarge instance for benchmarking purposes, I hope someone has a good way to deal with this, thank you all for your comments.

Found some issues in

_internal_py_funcin script_ops.pyEvery time the py_function is called in the eager mode, a new

EagerFuncis created, and a newtokenis added into_py_funcs. Also, thisfuncis appended intograph._py_funcs_used_in_graph.Looks like this logic is for graph mode, and has a side effect in the eager mode.

_py_funcsandgraph._py_funcs_used_in_graphwill continously increasing in the eager mode.This only accounts for a very small portion of the memory leak in the above sample code. There must be other reasons for the huge memory leak.