tensorflow: Memory leak when using py_function inside tf.data.Dataset

System information

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow):

- OS Platform and Distribution: Linux Ubuntu 16.04

- Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device:

- TensorFlow installed from (source or binary):

- TensorFlow version (use command below): 2.0

- Python version: 3.6.8

- Bazel version (if compiling from source):

- GCC/Compiler version (if compiling from source):

- CUDA/cuDNN version:

- GPU model and memory:

Describe the current behavior

Describe the expected behavior

The tf.data.Dataset instance should be freed in every step.

Code to reproduce the issue

import tensorflow as tf

import os

import numpy as np

import psutil

def _generator():

for i in range(100):

yield "1,2,3,4,5,6,7,8"

def _py_parse_data(record):

record = record.numpy()

record = bytes.decode(record)

rl = record.split(",")

rl = [str(int(r) + 1) for r in rl]

return [",".join(rl)]

def parse_data(record, shape=10):

sparse_data = tf.strings.split([record], sep=",")

sparse_data = tf.strings.to_number(sparse_data[0], tf.int64)

ids_num = tf.cast(tf.size(sparse_data), tf.int64)

indices = tf.range(0, ids_num, dtype=tf.int64)

indices = tf.reshape(indices, shape=(-1, 1))

sparse_data = tf.sparse.SparseTensor(

indices, sparse_data, dense_shape=(shape,)

)

return sparse_data

process = psutil.Process(os.getpid())

step = 0

while (step < 10000):

t = tf.data.Dataset.from_generator(_generator, output_types=tf.string)

t = t.map(lambda record: tf.py_function(_py_parse_data, [record], [tf.string]))

t = t.map(parse_data)

for d in t:

a = 1

if step % 10 == 0:

print("Memory : ", process.memory_info().rss)

step += 1

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 5

- Comments: 15 (9 by maintainers)

Commits related to this issue

- [py_function] Don't attach py_function to the global eager graph. Eager mode can incorrectly have a global graph. Disabling global graph on eager mode breaks too many assumptions so first introduce ... — committed to tensorflow/tensorflow by tensorflower-gardener 4 years ago

- [py_function] Don't attach py_function to the global eager graph. Eager mode can incorrectly have a global graph. Disabling global graph on eager mode breaks too many assumptions so first introduce ... — committed to tensorflow/tensorflow by tensorflower-gardener 4 years ago

- Fix #51839: py_function records data for gradient when tape is not requested. The memory leak test added for #35084 in tf.data still passes. I renamed the confusing argument name eager= to use_eager... — committed to tensorflow/tensorflow by tensorflower-gardener 3 years ago

Closing as stale. Please reopen if you’d like to work on this further.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you.

@QiJune, Is this still an issue? On running the code with TF v2.2, I did not observe much difference between each iteration. Please find the gist of it here. Thanks!

@kkimdev @jsimsa hello, we are having the same problem with TF 1.14. We use

tf.py_functionto load a wave file:putting this into a

tf.Dataset:and getting a leak, where the memory leaked is the size of the wave file being loaded:

Ok gotcha, but so you don’t instantiate the dataset in a loop?

@loretoparisi do you also create the dataset in a for loop or do you instantiate it only once ?

I am asking because I suspect a memory leak as well, but I am only creating one dataset object and then training on it using fit. On my side, I use

tf.py_functionto load HDF5 files because of this error in tfio.I would also be interested in the script you used to get the last lines of your post.

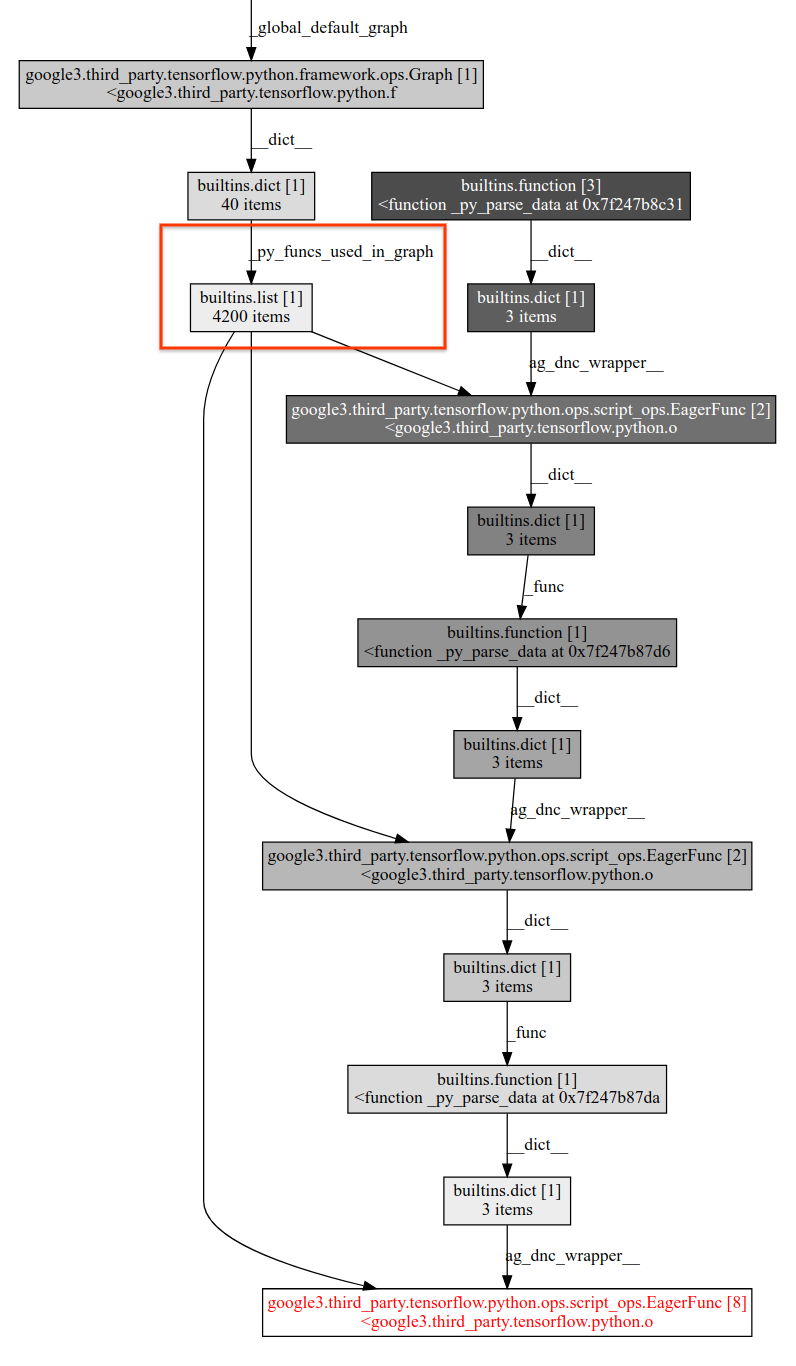

List of leaking objects per 100 iterations:

Leaking

EagerFuncreference graphSo seems like the problem is py_function getting created every loop and

_py_funcs_used_in_graphkeeps growing.