longhorn: [BUG] NodePublishVolume RWX CSI realpath failed to resolve symbolic links on microk8s

Describe the bug The volume is created and attached (Longhorn UI tells me this) But the pod where the volume is mounted does not start and has this lines in the log:

MountVolume.SetUp failed for volume "pvc-bee93258-1cfb-4d8a-a501-d34194e7a3fd" : rpc error: code = Internal desc = NodePublishVolume: failed to prepare mount point for volume pvc-bee93258-1cfb-4d8a-a501-d34194e7a3fd error exit status 1

To Reproduce Steps to reproduce the behavior:

- Install with helm chart

- Create storage class

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: longhorn-histdata

provisioner: driver.longhorn.io

allowVolumeExpansion: true

reclaimPolicy: Delete

volumeBindingMode: Immediate

parameters:

numberOfReplicas: "2"

staleReplicaTimeout: "2880"

fromBackup: ""

dataLocality: "best-effort" #Data should be where its needed

replicaAutoBalance: "least-effort" #Data is not critical and can be redownloaded

- Create PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-hist-data

spec:

accessModes:

- ReadWriteMany

volumeMode: Filesystem

volumeName: pv-hist-data

resources:

requests:

storage: 20Gi

storageClassName: longhorn-histdata

- Mount with custom helm template:

volumeMounts:

- name: data

mountPath: {{ default "/mnt/data/" .Values.persistence.mountPath | quote }}

volumes:

- name: data

{{- if .Values.persistence.enabled }}

persistentVolumeClaim:

claimName: {{ .Values.persistence.pvcName }}

{{- else }}

emptyDir: {}

{{- end -}}

Expected behavior Volume is mounted and pod is starting

Log If applicable, add the Longhorn managers’ log when the issue happens.

Relevant log entries:

level=debug msg="Running nsenter command: nsenter [--mount=/proc/1/ns/mnt --net=/proc/1/ns/net --uts=/proc/1/ns/uts -- /bin/realpath -e /var/snap/microk8s/common/var/lib/kubelet/pods/13006cc4-eae5-433d-ab9c-dfe31da7f9a8/volumes/kubernetes.io~csi/pvc-bee93258-1cfb-4d8a-a501-d34194e7a3fd/mount]"

level=error msg="failed to resolve symbolic links on /var/snap/microk8s/common/var/lib/kubelet/pods/13006cc4-eae5-433d-ab9c-dfe31da7f9a8/volumes/kubernetes.io~csi/pvc-bee93258-1cfb-4d8a-a501-d34194e7a3fd/mount" error="exit status 1"

level=debug msg="mount point /var/snap/microk8s/common/var/lib/kubelet/pods/13006cc4-eae5-433d-ab9c-dfe31da7f9a8/volumes/kubernetes.io~csi/pvc-bee93258-1cfb-4d8a-a501-d34194e7a3fd/mount try reading dir to make sure it's healthy"

level=error msg="NodePublishVolume: failed to prepare mount point for volume pvc-bee93258-1cfb-4d8a-a501-d34194e7a3fd error exit status 1"

level=error msg="NodePublishVolume: err: rpc error: code = Internal desc = NodePublishVolume: failed to prepare mount point for volume pvc-bee93258-1cfb-4d8a-a501-d34194e7a3fd error exit status 1"

You can also attach a Support Bundle here. You can generate a Support Bundle using the link at the footer of the Longhorn UI.

Environment:

- Longhorn version: 1.2.0

- Installation method (e.g. Rancher Catalog App/Helm/Kubectl): Helm Chart using ArgoCD

- Kubernetes distro (e.g. RKE/K3s/EKS/OpenShift) and version: Microk8s 1.21

- Number of management node in the cluster: 3

- Number of worker node in the cluster: 3 (same nodes)

- Node config

- OS type and version: Ubuntu 20.04

- CPU per node: 4

- Memory per node: 16GB

- Disk type(e.g. SSD/NVMe): SSD

- Network bandwidth between the nodes: 500Mbit

- Underlying Infrastructure (e.g. on AWS/GCE, EKS/GKE, VMWare/KVM, Baremetal): Baremetall

- Number of Longhorn volumes in the cluster: 1

Additional context Add any other context about the problem here.

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 1

- Comments: 21 (11 by maintainers)

I’ve been testing it since the issue was raised ( and the solution suggested ) and can confirm it’s stable and has not caused any problems since then ( k3s @ RPI: 14 nodes total, two data nodes with nvme’s, various RWX volumes mounted at least three times and operating under load and constant RW ops )

Thanks @kaxing @lukaszraczylo

@lukaszraczylo if you want to verify that the change with the nsenter wrapper fixes the issue in your environment, you can try to deploy the RC chart on a TEST CLUSTER we don’t support upgrades/downgrades from RC releases.

REF: https://github.com/longhorn/longhorn/tree/v1.2.3/chart

Hello @GeroL would you able to try again with kubelet --root-dir argument point to:

/var/snap/microk8s/common/var/lib/kubeletI am able to use this helm3 command, and install local chart pull from longhorn/charts@674a553a9363996e4150e487ad6cfb59479db3a1 :

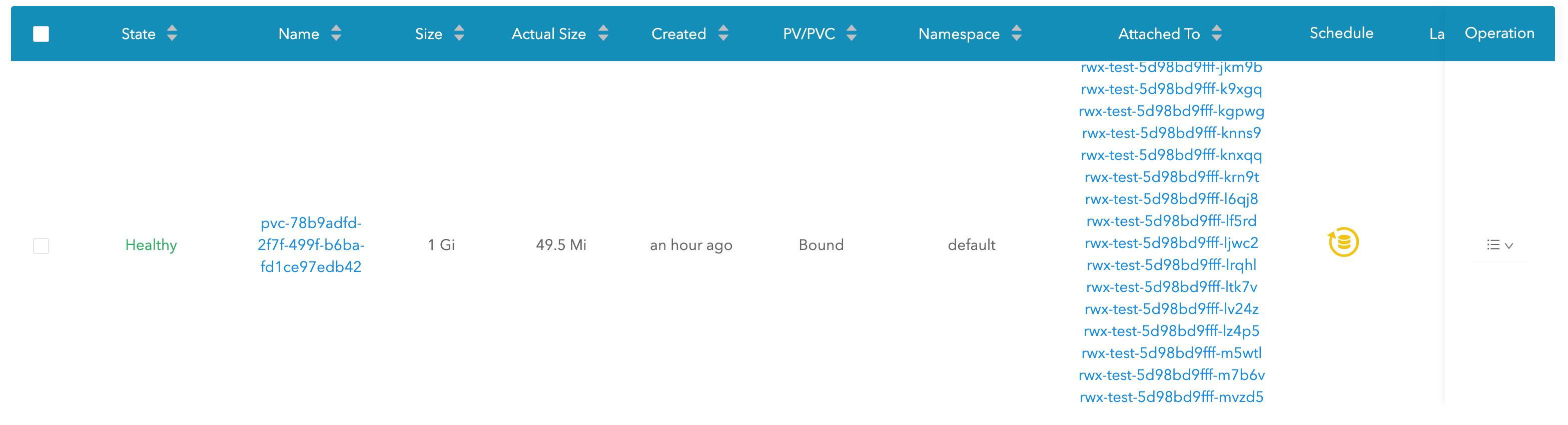

A working RWX volume for reference:

Thanks for the update @lukaszraczylo !

Hey @khushboo-rancher I am able to launch rwx-test workload on microk8s with version:

v1.22.4-3+adc4115d99034. Pushing the workload replicas to 128, both the cluster(1+3 4g ram nodes) and longhorn(master) are holding up health.As discussed with @PhanLe1010 the core issue seems to be the realpath lookup. @khushboo-rancher you can try installing microk8s via snap to see if this can be reproduced in that type of environment.