longhorn: [BUG] MountVolume.SetUp failed for volume ~ rpc error: code = Internal desc = exit status 1 due to multipathd on the node

Environment:

- Rancher 2.3.6

- Longhorn 0.8.0

- Flatcar Linux (CoreOS)

- Kubernetes 1.16.8

Hi,

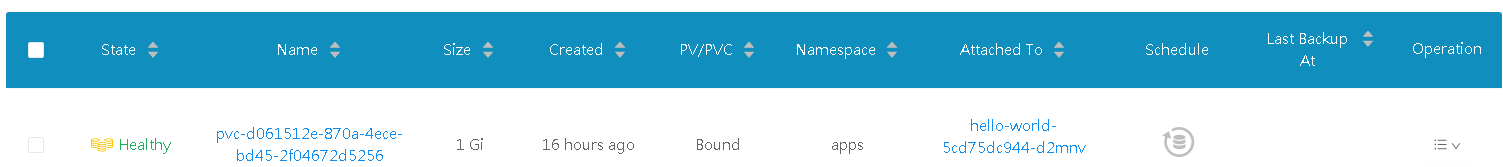

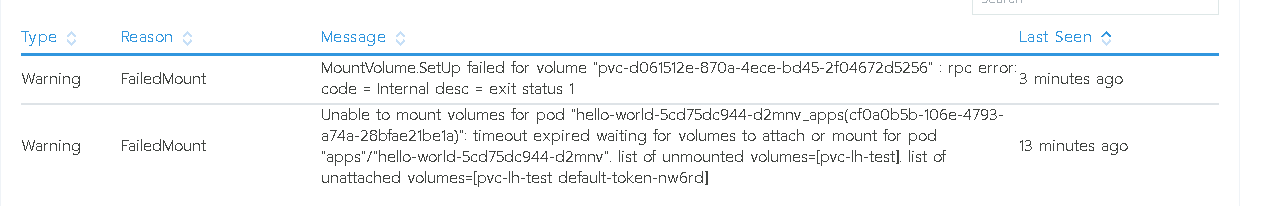

I have been able to get LH running and I see the volume has been successfully created on the underlying storage.

The pod with the volume is not starting.

longhorn-manager

time="2020-04-15T17:12:18Z" level=debug msg="Replica controller us2-k8swkr02 picked up pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-1535f3bf"

time="2020-04-15T17:12:18Z" level=debug msg="Instance handler updated instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-1535f3bf state, old state , new state stopped"

time="2020-04-15T17:12:18Z" level=debug msg="Replica controller us2-k8swkr02 picked up pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-06f4526e"

time="2020-04-15T17:12:18Z" level=debug msg="Instance handler updated instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-06f4526e state, old state , new state stopped"

time="2020-04-15T17:12:19Z" level=debug msg="Replica controller us2-k8swkr02 picked up pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-b923d985"

time="2020-04-15T17:12:25Z" level=debug msg="Volume controller us2-k8swkr02 picked up pvc-d061512e-870a-4ece-bd45-2f04672d5256"

time="2020-04-15T17:12:25Z" level=debug msg="Prepare to create instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-b923d985"

time="2020-04-15T17:12:25Z" level=info msg="Event(v1.ObjectReference{Kind:\"Replica\", Namespace:\"longhorn-system\", Name:\"pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-b923d985\", UID:\"2fcd4749-4cc4-46f6-9964-2e7d9a0ccaf5\", APIVersion:\"longhorn.io/v1beta1\", ResourceVersion:\"2864440\", FieldPath:\"\"}): type: 'Normal' reason: 'Start' Starts pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-b923d985"

time="2020-04-15T17:12:25Z" level=debug msg="Requeue longhorn-system/pvc-d061512e-870a-4ece-bd45-2f04672d5256 due to error <nil> or Operation cannot be fulfilled on replicas.longhorn.io \"pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-06f4526e\": the object has been modified; please apply your changes to the latest version and try again"

time="2020-04-15T17:12:26Z" level=debug msg="Instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-b923d985 starts running, IP 10.42.4.71"

time="2020-04-15T17:12:26Z" level=debug msg="Instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-b923d985 starts running, Port 10000"

time="2020-04-15T17:12:26Z" level=debug msg="Instance handler updated instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-r-b923d985 state, old state stopped, new state running"

time="2020-04-15T17:12:26Z" level=debug msg="Engine controller us2-k8swkr02 picked up pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87"

time="2020-04-15T17:12:26Z" level=debug msg="Instance process pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87 had been created, need to wait for instance manager update"

time="2020-04-15T17:12:26Z" level=debug msg="Instance process pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87 had been created, need to wait for instance manager update"

time="2020-04-15T17:12:27Z" level=debug msg="Instance process pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87 had been created, need to wait for instance manager update"

time="2020-04-15T17:12:27Z" level=debug msg="Instance handler updated instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87 state, old state stopped, new state starting"

time="2020-04-15T17:12:30Z" level=debug msg="Instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87 starts running, IP 10.42.4.70"

time="2020-04-15T17:12:30Z" level=debug msg="Instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87 starts running, Port 10000"

time="2020-04-15T17:12:30Z" level=debug msg="Instance handler updated instance pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87 state, old state starting, new state running"

time="2020-04-15T17:12:30Z" level=debug msg="Start monitoring pvc-d061512e-870a-4ece-bd45-2f04672d5256-e-bf525e87"

time="2020-04-15T17:12:30Z" level=info msg="Event(v1.ObjectReference{Kind:\"Volume\", Namespace:\"longhorn-system\", Name:\"pvc-d061512e-870a-4ece-bd45-2f04672d5256\", UID:\"fecc104d-8ec6-48c4-8af0-0ab25ec1ba90\", APIVersion:\"longhorn.io/v1beta1\", ResourceVersion:\"2864445\", FieldPath:\"\"}): type: 'Normal' reason: 'Attached' volume pvc-d061512e-870a-4ece-bd45-2f04672d5256 has been attached to us2-k8swkr02"

longhorn-driver

time="2020-04-15T13:40:25Z" level=debug msg="Deploying CSI driver"

time="2020-04-15T13:40:25Z" level=debug msg="proc cmdline detection pod discover-proc-kubelet-cmdline in phase: Pending"

time="2020-04-15T13:40:26Z" level=debug msg="proc cmdline detection pod discover-proc-kubelet-cmdline in phase: Pending"

time="2020-04-15T13:40:27Z" level=debug msg="proc cmdline detection pod discover-proc-kubelet-cmdline in phase: Running"

time="2020-04-15T13:40:28Z" level=debug msg="proc cmdline detection pod discover-proc-kubelet-cmdline in phase: Running"

time="2020-04-15T13:40:29Z" level=info msg="Proc found: kubelet"

time="2020-04-15T13:40:29Z" level=info msg="Try to find arg [--root-dir] in cmdline: [kubelet --cluster-dns=10.43.0.10 --hostname-override=us2-k8swkr01 --pod-infra-container-image=rancher/pause:3.1 --make-iptables-util-chains=true --cni-conf-dir=/etc/cni/net.d --authentication-token-webhook=true --resolv-conf=/etc/resolv.conf --cloud-provider= --cgroups-per-qos=True --v=2 --read-only-port=10255 --cni-bin-dir=/opt/cni/bin --root-dir=/var/lib/kubelet --kubeconfig=/etc/kubernetes/ssl/kubecfg-kube-node.yaml --authorization-mode=Webhook --address=0.0.0.0 --volume-plugin-dir=/var/lib/kubelet/volumeplugins --network-plugin=cni --client-ca-file=/etc/kubernetes/ssl/kube-ca.pem --fail-swap-on=false --streaming-connection-idle-timeout=30m --event-qps=0 --tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305 --anonymous-auth=false --cluster-domain=cluster.local --cgroup-driver=cgroupfs --resolv-conf=/run/systemd/resolve/resolv.conf ]"

time="2020-04-15T13:40:29Z" level=info msg="Detected root dir path: /var/lib/kubelet"

time="2020-04-15T13:40:29Z" level=info msg="Upgrading Longhorn related components for CSI v1.1.0"

time="2020-04-15T13:40:29Z" level=debug msg="Creating CSI Driver driver.longhorn.io"

time="2020-04-15T13:40:29Z" level=debug msg="Created CSI Driver driver.longhorn.io"

time="2020-04-15T13:40:29Z" level=debug msg="Creating service csi-attacher"

time="2020-04-15T13:40:29Z" level=debug msg="Created service csi-attacher"

time="2020-04-15T13:40:29Z" level=debug msg="Creating deployment csi-attacher"

time="2020-04-15T13:40:29Z" level=debug msg="Created deployment csi-attacher"

time="2020-04-15T13:40:29Z" level=debug msg="Creating service csi-provisioner"

time="2020-04-15T13:40:29Z" level=debug msg="Created service csi-provisioner"

time="2020-04-15T13:40:29Z" level=debug msg="Creating deployment csi-provisioner"

time="2020-04-15T13:40:29Z" level=debug msg="Created deployment csi-provisioner"

time="2020-04-15T13:40:30Z" level=debug msg="Creating service csi-resizer"

time="2020-04-15T13:40:30Z" level=debug msg="Created service csi-resizer"

time="2020-04-15T13:40:30Z" level=debug msg="Creating deployment csi-resizer"

time="2020-04-15T13:40:30Z" level=debug msg="Created deployment csi-resizer"

time="2020-04-15T13:40:30Z" level=debug msg="Creating daemon set longhorn-csi-plugin"

time="2020-04-15T13:40:30Z" level=debug msg="Created daemon set longhorn-csi-plugin"

time="2020-04-15T13:40:30Z" level=debug msg="Creating service compatible-csi-attacher"

time="2020-04-15T13:40:30Z" level=debug msg="Created service compatible-csi-attacher"

time="2020-04-15T13:40:30Z" level=debug msg="Creating deployment compatible-csi-attacher"

time="2020-04-15T13:40:30Z" level=debug msg="Created deployment compatible-csi-attacher"

time="2020-04-15T13:40:30Z" level=debug msg="CSI deployment done"

conpatibility-node-driver-registrar

I0415 13:40:32.718021 1 main.go:110] Version: v1.2.0-0-g6ef000ae

I0415 13:40:32.718114 1 main.go:120] Attempting to open a gRPC connection with: "/var/lib/kubelet/plugins/io.rancher.longhorn/csi.sock"

I0415 13:40:32.718146 1 connection.go:151] Connecting to unix:///var/lib/kubelet/plugins/io.rancher.longhorn/csi.sock

I0415 13:40:33.720229 1 main.go:127] Calling CSI driver to discover driver name

I0415 13:40:33.720281 1 connection.go:180] GRPC call: /csi.v1.Identity/GetPluginInfo

I0415 13:40:33.720297 1 connection.go:181] GRPC request: {}

I0415 13:40:33.725650 1 connection.go:183] GRPC response: {"name":"io.rancher.longhorn","vendor_version":"v0.8.0"}

I0415 13:40:33.726588 1 connection.go:184] GRPC error: <nil>

I0415 13:40:33.726608 1 main.go:137] CSI driver name: "io.rancher.longhorn"

I0415 13:40:33.726763 1 node_register.go:58] Starting Registration Server at: /registration/io.rancher.longhorn-reg.sock

I0415 13:40:33.727143 1 node_register.go:67] Registration Server started at: /registration/io.rancher.longhorn-reg.sock

I0415 13:41:22.090870 1 main.go:77] Received GetInfo call: &InfoRequest{}

I0415 13:41:22.706991 1 main.go:87] Received NotifyRegistrationStatus call: &RegistrationStatus{PluginRegistered:true,Error:,}

longhorn-csi-plugin

E0416 08:47:25.148934 1 mount_linux.go:487] format of disk "/dev/longhorn/pvc-d061512e-870a-4ece-bd45-2f04672d5256" failed: type:("ext4") target:("/var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount") options:(["defaults"])error:(exit status 1)

time="2020-04-16T08:47:25Z" level=error msg="GRPC error: rpc error: code = Internal desc = exit status 1"

time="2020-04-16T08:49:27Z" level=info msg="GRPC call: /csi.v1.Node/NodeGetCapabilities"

time="2020-04-16T08:49:27Z" level=info msg="GRPC request: {}"

time="2020-04-16T08:49:27Z" level=info msg="GRPC response: {\"capabilities\":[{\"Type\":{\"Rpc\":{\"type\":3}}}]}"

time="2020-04-16T08:49:27Z" level=info msg="GRPC call: /csi.v1.Node/NodeGetCapabilities"

time="2020-04-16T08:49:27Z" level=info msg="GRPC request: {}"

time="2020-04-16T08:49:27Z" level=info msg="GRPC response: {\"capabilities\":[{\"Type\":{\"Rpc\":{\"type\":3}}}]}"

time="2020-04-16T08:49:27Z" level=info msg="GRPC call: /csi.v1.Node/NodePublishVolume"

time="2020-04-16T08:49:27Z" level=info msg="GRPC request: {\"target_path\":\"/var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount\",\"volume_capability\":{\"AccessType\":{\"Mount\":{\"fs_type\":\"ext4\"}},\"access_mode\":{\"mode\":1}},\"volume_context\":{\"baseImage\":\"\",\"fromBackup\":\"\",\"numberOfReplicas\":\"3\",\"staleReplicaTimeout\":\"30\",\"storage.kubernetes.io/csiProvisionerIdentity\":\"1586958032802-8081-driver.longhorn.io\"},\"volume_id\":\"pvc-d061512e-870a-4ece-bd45-2f04672d5256\"}"

time="2020-04-16T08:49:27Z" level=info msg="NodeServer NodePublishVolume req: volume_id:\"pvc-d061512e-870a-4ece-bd45-2f04672d5256\" target_path:\"/var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount\" volume_capability:<mount:<fs_type:\"ext4\" > access_mode:<mode:SINGLE_NODE_WRITER > > volume_context:<key:\"baseImage\" value:\"\" > volume_context:<key:\"fromBackup\" value:\"\" > volume_context:<key:\"numberOfReplicas\" value:\"3\" > volume_context:<key:\"staleReplicaTimeout\" value:\"30\" > volume_context:<key:\"storage.kubernetes.io/csiProvisionerIdentity\" value:\"1586958032802-8081-driver.longhorn.io\" > "

E0416 08:49:27.567704 1 mount_linux.go:143] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t ext4 -o defaults /dev/longhorn/pvc-d061512e-870a-4ece-bd45-2f04672d5256 /var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount

Output: mount: /var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount: /dev/longhorn/pvc-d061512e-870a-4ece-bd45-2f04672d5256 already mounted or mount point busy.

E0416 08:49:27.576477 1 mount_linux.go:487] format of disk "/dev/longhorn/pvc-d061512e-870a-4ece-bd45-2f04672d5256" failed: type:("ext4") target:("/var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount") options:(["defaults"])error:(exit status 1)

time="2020-04-16T08:49:27Z" level=error msg="GRPC error: rpc error: code = Internal desc = exit status 1"

time="2020-04-16T08:51:29Z" level=info msg="GRPC call: /csi.v1.Node/NodeGetCapabilities"

time="2020-04-16T08:51:29Z" level=info msg="GRPC request: {}"

time="2020-04-16T08:51:29Z" level=info msg="GRPC response: {\"capabilities\":[{\"Type\":{\"Rpc\":{\"type\":3}}}]}"

time="2020-04-16T08:51:29Z" level=info msg="GRPC call: /csi.v1.Node/NodeGetCapabilities"

time="2020-04-16T08:51:29Z" level=info msg="GRPC request: {}"

time="2020-04-16T08:51:29Z" level=info msg="GRPC response: {\"capabilities\":[{\"Type\":{\"Rpc\":{\"type\":3}}}]}"

time="2020-04-16T08:51:29Z" level=info msg="GRPC call: /csi.v1.Node/NodePublishVolume"

time="2020-04-16T08:51:29Z" level=info msg="GRPC request: {\"target_path\":\"/var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount\",\"volume_capability\":{\"AccessType\":{\"Mount\":{\"fs_type\":\"ext4\"}},\"access_mode\":{\"mode\":1}},\"volume_context\":{\"baseImage\":\"\",\"fromBackup\":\"\",\"numberOfReplicas\":\"3\",\"staleReplicaTimeout\":\"30\",\"storage.kubernetes.io/csiProvisionerIdentity\":\"1586958032802-8081-driver.longhorn.io\"},\"volume_id\":\"pvc-d061512e-870a-4ece-bd45-2f04672d5256\"}"

time="2020-04-16T08:51:29Z" level=info msg="NodeServer NodePublishVolume req: volume_id:\"pvc-d061512e-870a-4ece-bd45-2f04672d5256\" target_path:\"/var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount\" volume_capability:<mount:<fs_type:\"ext4\" > access_mode:<mode:SINGLE_NODE_WRITER > > volume_context:<key:\"baseImage\" value:\"\" > volume_context:<key:\"fromBackup\" value:\"\" > volume_context:<key:\"numberOfReplicas\" value:\"3\" > volume_context:<key:\"staleReplicaTimeout\" value:\"30\" > volume_context:<key:\"storage.kubernetes.io/csiProvisionerIdentity\" value:\"1586958032802-8081-driver.longhorn.io\" > "

E0416 08:51:30.015597 1 mount_linux.go:143] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t ext4 -o defaults /dev/longhorn/pvc-d061512e-870a-4ece-bd45-2f04672d5256 /var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount

Output: mount: /var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount: /dev/longhorn/pvc-d061512e-870a-4ece-bd45-2f04672d5256 already mounted or mount point busy.

E0416 08:51:30.024131 1 mount_linux.go:487] format of disk "/dev/longhorn/pvc-d061512e-870a-4ece-bd45-2f04672d5256" failed: type:("ext4") target:("/var/lib/kubelet/pods/cf0a0b5b-106e-4793-a74a-28bfae21be1a/volumes/kubernetes.io~csi/pvc-d061512e-870a-4ece-bd45-2f04672d5256/mount") options:(["defaults"])error:(exit status 1)

time="2020-04-16T08:51:30Z" level=error msg="GRPC error: rpc error: code = Internal desc = exit status 1"

Expected behavior: Pod should mount volume and startup.

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Reactions: 2

- Comments: 38 (14 by maintainers)

To prevent multipath daemon from adding additional block devices created by Longhorn

First check devices created by Longhorn using

lsblkNotice that Longhorn device names start with

/dev/sd[x]/etc/multipath.confif not existeddevnode "^sd[a-z0-9]+"Restart multipath service

# systemctl restart multipathd.serviceVerify that configuration is applied

# multipath -tRef: http://manpages.ubuntu.com/manpages/bionic/man5/multipath.conf.5.html#blacklist section

Just posting so that it may help someone who stumbles across this as well.

I also experienced a similar issue with Longhorn and

iscsid. After rebuilding my k8s cluster from scratch and installing Longhorn, I was attempting to restore a PVC from a Minio/S3 backup. My backup would restore, but mounting the PV into the pod resulted in (kubectl describe pod my-pod-12345-123456):So after reading this thread I realized that my LVM was mounted to

/var/lib/longhornusing the/dev/disk/by-uuid/method, like so (/etc/fstab):Since this was an LVM I instead decided to just mount the disk using the logical volume name, converting the previous line in

/etc/fstabto one like this:As far as I can tell, this has resolved my issue of not being able to mount restored PV/PVCs into pods.

Just encountered this issue (after encountering

UnmountVolume.NewUnmounter failed for volume and vol_data.json: no such file or directoryand rebooting the node) with Longhorn 1.5.1. Adding a blacklist section in the multipath config seems to have worked, but what the hell is this and why do I need to do any of that? Any way to protect against that behavior?I have no clue what multipath is, what problem its trying to solve, or why it causes this issue, but @meldafrawi’s solution fixed the problem for me nearly instantly. Thank you!

@ryantiger658 @mogwai There were two reasons we saw for this issue. The first one happens with AWS mapping mechanism and has been fixed in v1.0.0. #1223

The other one is there is a local process pick up the block device created by Longhorn automatically. Normally what we saw is multipath process. To find out the culprit:

ls -l /dev/longhorn/. The major:minor number will be shown as e.g.8, 32before the device name.ls -l /devand find the device for the same major:minor number, e.g./dev/sde.lsofto get the list of file handler in use, then grep for the device name (e.g.sdeor/dev/longhorn/xxx. You should find one there.Then you can figure out what to do next.

For the multipath process, you can use e.g.

multipath -v2 -dto see if the Longhorn device was picked up automatically.If it’s not the multipath process,

lsofshould have the related info.I think Longhorn should alert in the UI if it detects this issue

How do I destroy it 😃?

I tried killing the process but it just restarts.

I also tried removing the multipath for that device using the following:

This worked and the container is now running 💪 but multipath picks it up automatically when a new pvc is created.

@meldafrawi 's suggestion worked: https://github.com/longhorn/longhorn/issues/1210#issuecomment-671689746

@meldafrawi Your solution works, and is there any plan to perform this automatically by longhorn in the future?

Yeah, your multipath process hijacked the device. 😃