istio: pilot does not see all pods in service

Bug description we have service with two pods. Sometimes Istio ingressgateway see only one of them But all pod works fine. There are endpoints in the service:

kubectl describe svc -n pfphome-prod pfphome-pfp-service

Name: pfphome-pfp-service

Namespace: pfphome-prod

Selector: app.kubernetes.io/name=pfphome,app.pfp.dev/envaronment=prod,app.pfp.dev/release=pfphome-master

Type: ClusterIP

IP: 10.103.168.183

Port: http 80/TCP

TargetPort: http/TCP

Endpoints: 10.103.68.4:3000,10.103.72.2:3000

Session Affinity: None

Events: <none>

But in envoy clusterconfig only:

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::default_priority::max_connections::4294967295

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::default_priority::max_pending_requests::4294967295

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::default_priority::max_requests::4294967295

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::default_priority::max_retries::3

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::high_priority::max_connections::1024

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::high_priority::max_pending_requests::1024

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::high_priority::max_requests::1024

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::high_priority::max_retries::3

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::added_via_api::true

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::cx_active::1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::cx_connect_fail::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::cx_total::5528

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_active::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_error::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_success::15124

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_timeout::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_total::15137

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::hostname::

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::health_flags::healthy

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::weight::1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::region::europe-north1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::zone::europe-north1-a

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::sub_zone::

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::canary::false

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::priority::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::success_rate::-1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::local_origin_success_rate::-1

if I recreate pilot

kubectl delete po -n istio-system istio-pilot-69556cbcd6-vx4sz

pod "istio-pilot-69556cbcd6-vx4sz" deleted

kubectl logs -n istio-system istio-pilot-69556cbcd6-btqmk discovery | grep pfphome-prod

2020-01-10T09:43:58.740662Z info Handling event add for pod pfphome-pfp-app-86c8c87458-wvgm7 in namespace pfphome-prod -> 10.103.68.4

2020-01-10T09:43:58.741579Z info Handling event add for pod pfphome-pfp-app-86c8c87458-m9vtn in namespace pfphome-prod -> 10.103.72.2

2020-01-10T09:43:58.742078Z info Handle EDS endpoint pfphome-pfp-service in namespace pfphome-prod -> [10.103.68.4 10.103.72.2]

2020-01-10T09:43:58.742099Z info ads Full push, new service pfphome-pfp-service.pfphome-prod.svc.cluster.local

2020-01-10T09:43:58.742107Z info ads Endpoint updating service account spiffe://cluster.local/ns/pfphome-prod/sa/default pfphome-pfp-service.pfphome-prod.svc.cluster.local

I take correct config in ingressgateway:

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::default_priority::max_connections::4294967295

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::default_priority::max_pending_requests::4294967295

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::default_priority::max_requests::4294967295

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::default_priority::max_retries::3

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::high_priority::max_connections::1024

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::high_priority::max_pending_requests::1024

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::high_priority::max_requests::1024

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::high_priority::max_retries::3

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::added_via_api::true

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::cx_active::2

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::cx_connect_fail::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::cx_total::5679

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_active::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_error::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_success::16796

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_timeout::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::rq_total::16814

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::hostname::

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::health_flags::healthy

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::weight::1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::region::europe-north1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::zone::europe-north1-a

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::sub_zone::

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::canary::false

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::priority::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::success_rate::-1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.68.4:3000::local_origin_success_rate::-1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::cx_active::2

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::cx_connect_fail::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::cx_total::10

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::rq_active::1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::rq_error::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::rq_success::62

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::rq_timeout::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::rq_total::63

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::hostname::

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::health_flags::healthy

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::weight::1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::region::europe-north1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::zone::europe-north1-a

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::sub_zone::

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::canary::false

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::priority::0

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::success_rate::-1

outbound|80||pfphome-pfp-service.pfphome-prod.svc.cluster.local::10.103.72.2:3000::local_origin_success_rate::-1

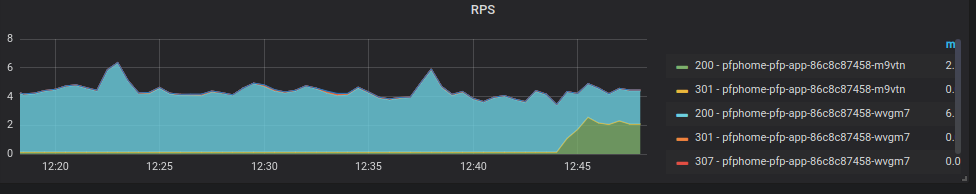

and traffic goes to both pods

Expected behavior

Steps to reproduce the bug

Version (include the output of istioctl version --remote and kubectl version and helm version if you used Helm)

istioctl version --remote

client version: 1.4.0

control plane version: 1.4.2

data plane version: 1.4.2 (4 proxies)

How was Istio installed?

Environment where bug was observed (cloud vendor, OS, etc) GKE

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Reactions: 18

- Comments: 36 (19 by maintainers)

Yes! With release 1.4.5 it was solved! There is no any “Endpoint without pod” in logs in last 3 days! And pilot is using all endpoints in all services. Thanks