transformers: OSError: Can't load config for 'bert-base-uncased

Environment info

It happens in local machine, Colab, and my colleagues also.

transformersversion:- Platform: Window, Colab

- Python version: 3.7

- PyTorch version (GPU?): 1.8.1 (GPU yes)

- Tensorflow version (GPU?):

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

Who can help

@LysandreJik It is to do with ‘bert-base-uncased’

Information

Hi, I m having this error suddenly this afternoon. It was all okay before for days. It happens in local machine, Colab and also to my colleagues. I can access this file in browser https://huggingface.co/bert-base-uncased/resolve/main/config.json no problem. Btw, I m from Singapore. Any urgent help will be appreciated because I m rushing some project and stuck there.

Thanks

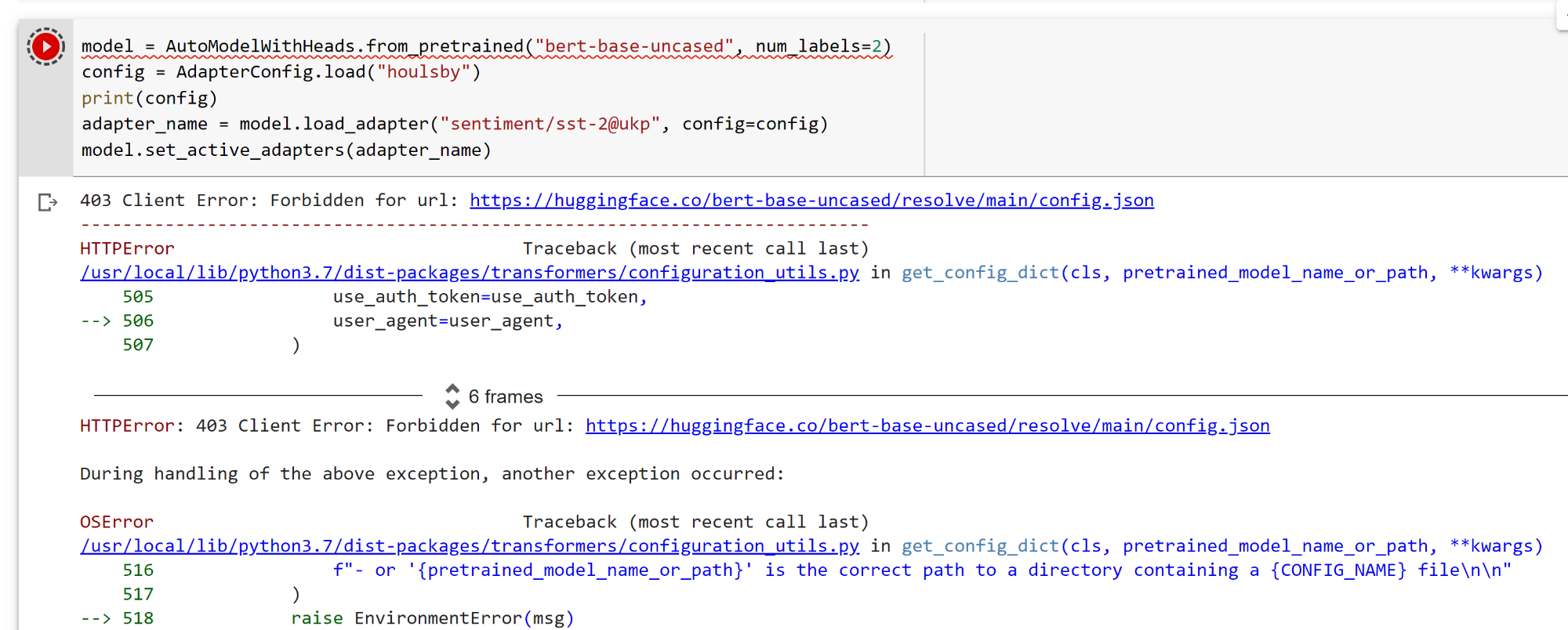

403 Client Error: Forbidden for url: https://huggingface.co/bert-base-uncased/resolve/main/config.json

HTTPError Traceback (most recent call last) /usr/local/lib/python3.7/dist-packages/transformers/configuration_utils.py in get_config_dict(cls, pretrained_model_name_or_path, **kwargs) 505 use_auth_token=use_auth_token, –> 506 user_agent=user_agent, 507 )

6 frames HTTPError: 403 Client Error: Forbidden for url: https://huggingface.co/bert-base-uncased/resolve/main/config.json During handling of the above exception, another exception occurred:

OSError Traceback (most recent call last) /usr/local/lib/python3.7/dist-packages/transformers/configuration_utils.py in get_config_dict(cls, pretrained_model_name_or_path, **kwargs) 516 f"- or ‘{pretrained_model_name_or_path}’ is the correct path to a directory containing a {CONFIG_NAME} file\n\n" 517 ) –> 518 raise EnvironmentError(msg) 519 520 except json.JSONDecodeError:

OSError: Can’t load config for ‘bert-base-uncased’. Make sure that:

-

‘bert-base-uncased’ is a correct model identifier listed on ‘https://huggingface.co/models’

-

or ‘bert-base-uncased’ is the correct path to a directory containing a config.json file

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 1

- Comments: 21 (5 by maintainers)

Commits related to this issue

- the use_auth_token has not been set up early enough in the model_kwargs. Fixes #12941 — committed to bennimmo/transformers by bennimmo 3 years ago

- the use_auth_token has not been set up early enough in the model_kwargs. Fixes #12941 (#13205) — committed to huggingface/transformers by bennimmo 3 years ago

- Updating transformers Accessing this fix https://github.com/huggingface/transformers/pull/13205 After this error https://github.com/huggingface/transformers/issues/12941 was stopping pretrained path ... — committed to sabinevidal/kindly by sabinevidal 3 years ago

Just ask chatGPT LOL…😂😂

Still not okay online, but I managed to do it locally

git clone https://huggingface.co/bert-base-uncased

#model = AutoModelWithHeads.from_pretrained(“bert-base-uncased”) model = AutoModelWithHeads.from_pretrained(BERT_LOCAL_PATH, local_files_only=True)

#tokenizer = AutoTokenizer.from_pretrained(“bert-base-uncased”) tokenizer = AutoTokenizer.from_pretrained(BERT_LOCAL_PATH, local_files_only=True)

adapter_name = model2.load_adapter(localpath, config=config, model_name=BERT_LOCAL_PATH)

@VRDJ goto this website chatGPT and enter your error in the chatbox in this website and for the 99% you will get your solution there.

With additional testing, I’ve found that this issue only occurs with adapter-tranformers, the AdapterHub.ml modified version of the transformers module. With the HuggingFace module, we can pull pretrained weights without issue.

Using adapter-transformers this is now working again from Google Colab, but is still failing locally and from servers running in AWS. Interestingly, with adapter-transformers I get a 403 even if I try to load a nonexistent model (e.g. fake-model-that-should-fail). I would expect this to fail with a 401, as there is no corresponding config.json on huggingface.co. The fact that it fails with a 403 seems to indicate that something in front of the web host is rejecting the request before the web host has a change to respond with a not found error.

Hello, I had the same problem when using transformers - pipeline in the aws-sagemaker notebook.

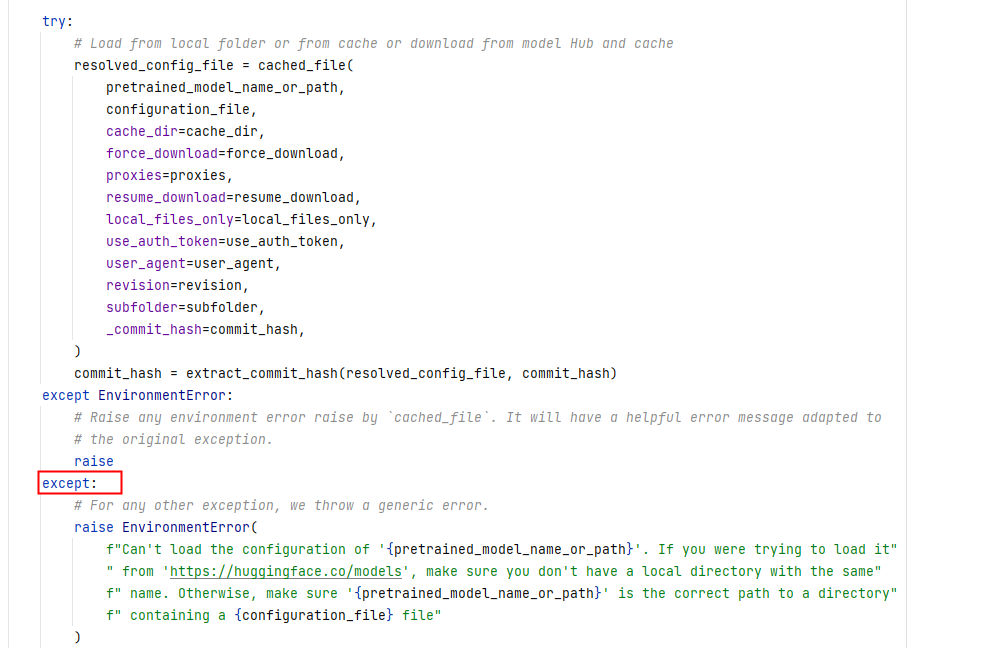

I started to think it was the version or the network problem. But, after some local tests, this guess is wrong. So, I just debug the source code. I find that: This will raise any error as EnviromentError. So, from experience, I solve it, by running this pip:

!pip install --upgrade jupyter

!pip install --upgrade ipywidgets

This will raise any error as EnviromentError. So, from experience, I solve it, by running this pip:

!pip install --upgrade jupyter

!pip install --upgrade ipywidgets

You guys can try it when meeting the problem in aws-notebook or colab!

Your token for

use_auth_tokenis not the same as your API token. The easiest way to get it is to login with!huggingface-cli loginand then just passuse_auth_token=True.@sgugger Hi it is still happening now. Not just me, many people I know of. I can access the config file from browser, but not through the code. Thanks