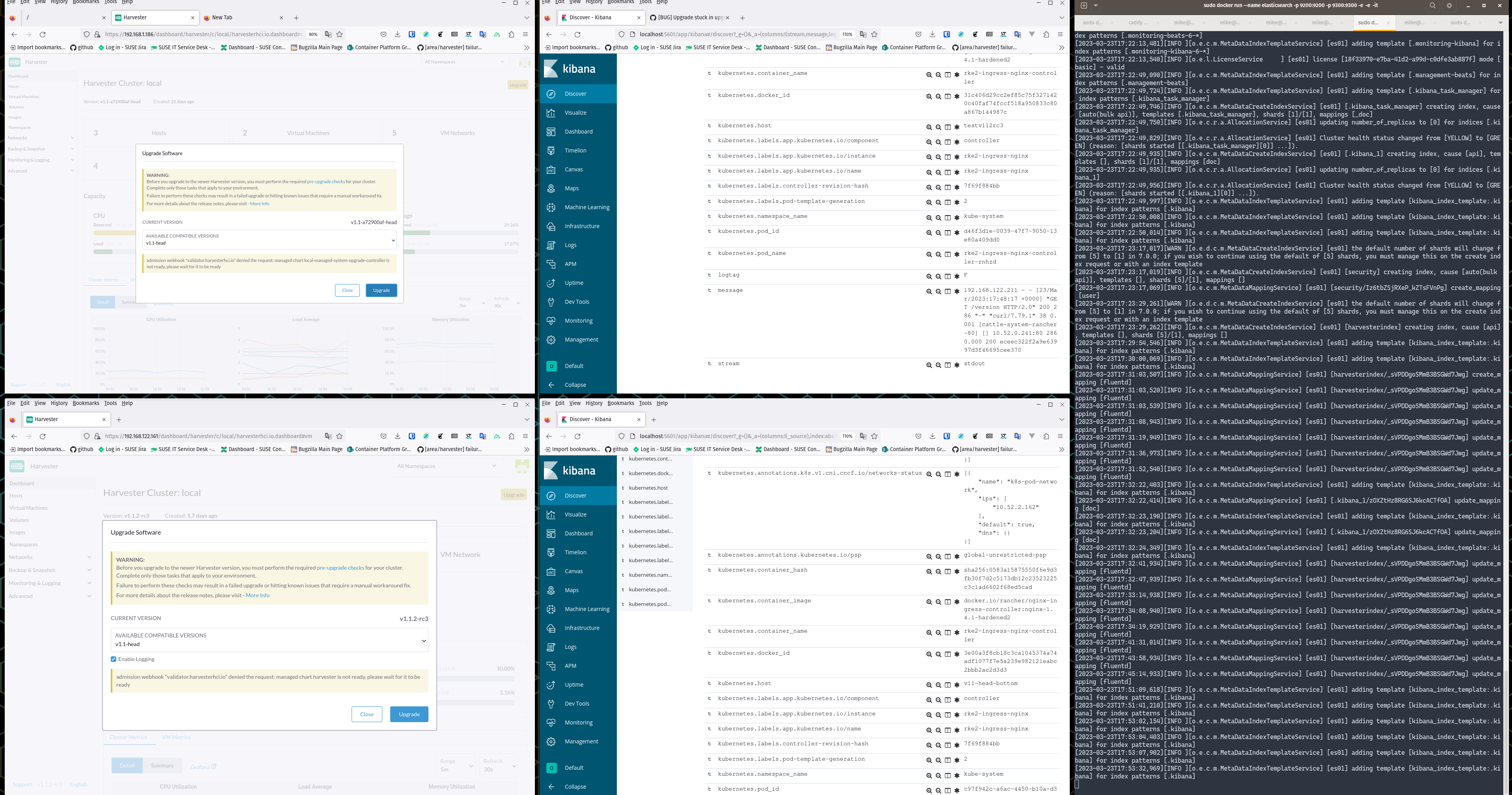

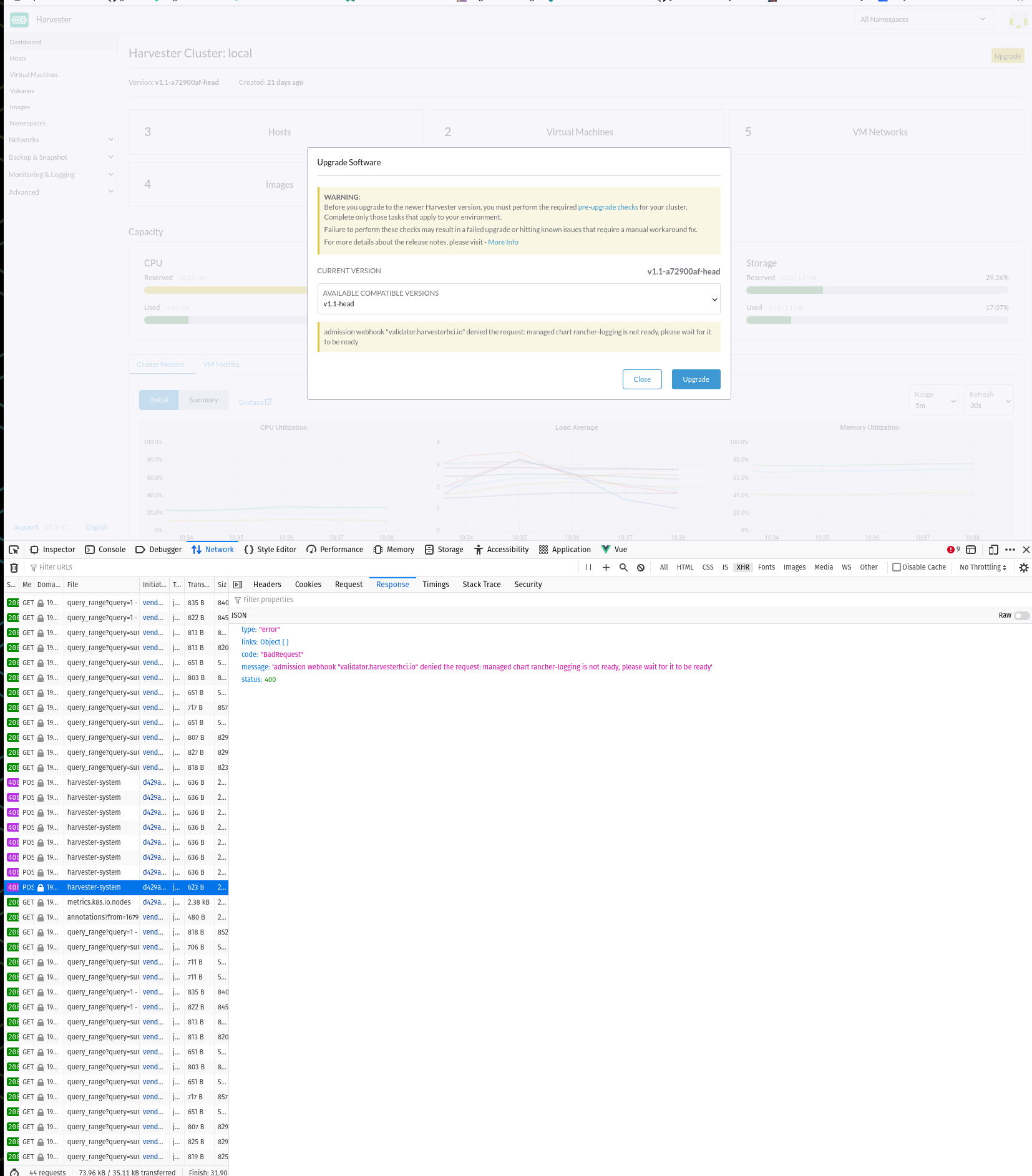

harvester: [BUG] Can Not Upgrade Cluster When ClusterOutput & ClusterFlow Configured

Describe the bug Running into an issue where either on:

- v1.1.2-rc3 (1 Node Cluster)

- v1.1-head ( v1.1-a72900af-head ) (3 Node Bare Metal Cluster) When creating an upgrade version.yaml of (with 03/23/23, v1.1-head):

apiVersion: harvesterhci.io/v1beta1

kind: Version

metadata:

name: v1.1-head

namespace: harvester-system

spec:

isoChecksum: 8167dfc265353bb367a1b73ca57904da9ea3157b2cbf91ebe81c2ffa9591b7d7de5c6f902e427f8e94cf2d0b2fcf61298dc7b3e34498dff10455656d682575d8

isoURL: http://192.168.1.164:2015/harvester-v1.1-amd64.iso

releaseDate: '20230322'

(file-server accessible by separate clusters) Upgrade will not process with Error of:

admission webhook "validator.harvesterhci.io" denied the request: managed chart harvester is not ready, please wait for it to be ready

To Reproduce

Prerequisites:

- Create an instance of ElasticSearch running so that a cluster can access it via:

sudo sysctl -w vm.max_map_count=262144sudo docker run --name elasticsearch -p 9200:9200 -p 9300:9300 -e xpack.security.enabled=false -e node.name=es01 -it docker.elastic.co/elasticsearch/elasticsearch:6.8.23- spin up kibana for easier viewing,

sudo docker run --name kibana --link elasticsearch:es_alias --env "ELASTICSEARCH_URL=http://es_alias:9200" -p 5601:5601 -it docker.elastic.co/kibana/kibana:6.8.23

In ElasticSearch PreReqs: 1.have built out in ElasticSearch: an elastic search user, via, replace localhost as needed:

curl --location --request POST 'http://localhost:9200/security/user/harvesteruser' \

--header 'Content-Type: application/json' \

--data-raw '{

"password": "harvestertesting",

"enabled": true,

"roles": ["superuser", "kibana_admin"],

"full_name": "Harvesterrr Testingggguser",

"email": "harvestertesting@harvestertesting.com"

}'

- have built out in ElasticSearch: check, verifying the elasticsearch user, replace localhost as needed via:

curl --location --request GET 'localhost:9200/security/user/harvesteruser'

- have built out in ElasticSearch: build the elasticsearch index, replace localhost as needed via:

curl --location --request PUT 'localhost:9200/harvesterindex?pretty'

Steps to reproduce the behavior:

- Build a Secret in cattle-logging namespace with Advanced -> Secrets, then provide the password for the harvesteruser

- Build a Cluster Output, point to the ElasticSearch, use the secret built for the password, remove checked box for the TLS

- Build a Cluster Flow, select Logging, point to the Cluster Output

- Have a File-Server that holds the v1.1-head iso, a version.yaml w/ appropriate checksum

- Have for safety, created the ‘pre-flight’ adjustment:

$ cat > /tmp/fix.yaml <<EOF

spec:

values:

systemUpgradeJobActiveDeadlineSeconds: "3600"

EOF

$ kubectl patch managedcharts.management.cattle.io local-managed-system-upgrade-controller --namespace fleet-local --patch-file=/tmp/fix.yaml --type merge

$ kubectl -n cattle-system rollout restart deploy/system-upgrade-controller

- Then fire off the upgrade

- The admission webhook controller surrounding the logging should be exposed

Expected behavior The Upgrade to be allowed to be run

Support bundle v1.1.2-rc3 Cluster: supportbundle_fe8a3fc4-3594-434c-a956-d35207f79079_2023-03-23T18-11-56Z.zip v1.1-a72900af-head Cluster: supportbundle_a8d32ff1-0769-4a05-85be-ebe21ffef525_2023-03-23T18-12-16Z.zip

Environment

- Harvester ISO version: v1.1.2-rc3 & v1.1-a72900af-head

- Underlying Infrastructure: bare-metal & qemu/kvm

Additional context

About this issue

- Original URL

- State: open

- Created a year ago

- Comments: 19 (13 by maintainers)

Move this issue to v1.2.1 for a note; modifying the default kubevirt config is not supported in the current stage, and since the kubevirt patch is already included in Harvester v1.2.0, so users will need to revert it before the upgrade.

This happens when we manually patch kube-virt image https://github.com/harvester/harvester/issues/3715#issuecomment-1481965453 @Vicente-Cheng, we need a way to deal with this case.