weave: weave-net hangs - leaking IP addresses?

What you expected to happen?

/status etc to work

What happened?

/status (and all other endpoints) hang

Issuance of IP addresses to pods also seemed to stop on the affected nodes

How to reproduce it?

Unknown. Happened on multiple nodes in our cluster

Anything else we need to know?

Versions:

$ weave --local version

weave 2.5.0

$ docker version

$ uname -a

Linux kubernetes-kubernetes-cr0-17-1547767704 4.14.67-coreos #1 SMP Mon Sep 10 23:14:26 UTC 2018 x86_64 Linux

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.10", GitCommit:"098570796b32895c38a9a1c9286425fb1ececa18", GitTreeState:"clean", BuildDate:"2018-08-02T17:19:54Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.10", GitCommit:"098570796b32895c38a9a1c9286425fb1ececa18", GitTreeState:"clean", BuildDate:"2018-08-02T17:11:51Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"}

Logs:

or, if using Kubernetes:

$ kubectl logs -n kube-system <weave-net-pod> weave

(After triggering a kill -ABRT)

weave-logs-1548156065-weave-net-4mdf6.txt

Network:

$ ip route

$ ip -4 -o addr

$ sudo iptables-save

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 1

- Comments: 29 (15 by maintainers)

Yes, that worked. If i return the error from the CNI, then kubelet retry would fix the leak. It does seem kubelet is stateful, even if i restart the kublet, it does re-attempt to CNI DEL. It seems we could rely on kubelet instead of implementing PruneOwned that works for non docker.

I am able to easily reproduce the leaking IP scenario

So I do

kubectl delete pods weave-net-bkxln -n kube-system; kubectl delete pods frontend-69859f6796-nq8d6for the pods running on the same node.kubelet and CNI interaction fails as expected

there is no external attempt (from kubelet) to retry CNI

DELcommand leaking the IP.So weave-net pod has crashed or is unresponsive then IP’s are leaked if the pods are deleted.

For info, we’ve ended up writing and deploying this: https://github.com/ocadotechnology/weave-wiper

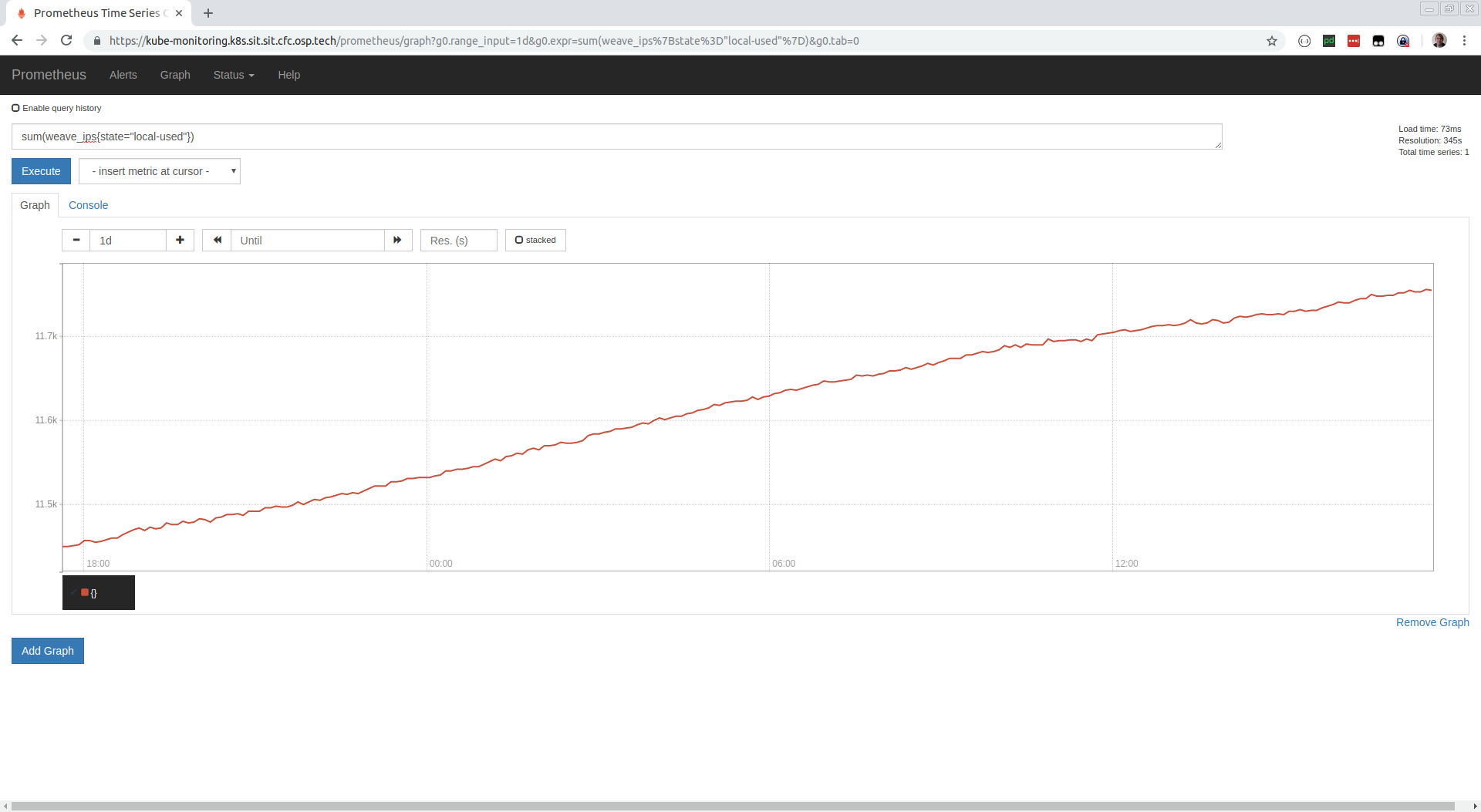

The leaking IP addresses is continuing. We’re leaking around 300 per day.