VictoriaMetrics: OOM -killed vmstorage causing by expensive queries

Version: v1.27.3

VM used as remote storage for Prometheus in k8s

• setup is following: vmselect x1(8Gi), vminsert x1 (2Gi), vmstorage x1(16Gi)

• number of active timeseries(vm_cache_entries{type=“storage/hour_metric_ids”}) is ~3kk

• max_allowed_memory is calculated properly for containers

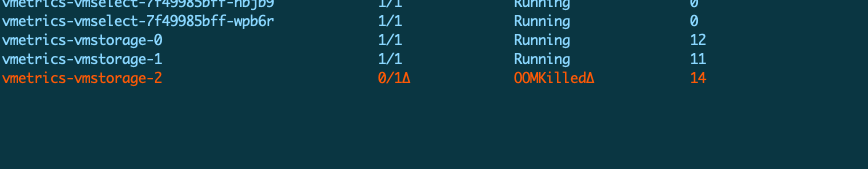

Queries for time-range more than 2d causing OOMs in vmstorage, which results in crashloops for vminsert and vmselect.

The query example is following:

sum(rate(http_response_time_seconds_sum{job=~\"|skipper-prod|vesld\",env=~\".*\",path!~\".+/(health|healthcheck)\",namespace=~\"kube-system|prod\",cluster=~\".*\"}[10m])) by (job, env) /sum(rate(http_response_time_seconds_count{job=~\"skipper-prod|vesld\",env=~\".*\",path!~\".+/(health|healthcheck)\",namespace=~\"kube-system|prod\",cluster=~\".*\"}[10m])) by (job, env)

@tenmozes @valyala @hagen1778 has been in touched with folks about this

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Comments: 16

Commits related to this issue

- app/vminsert/netstorage: make sure the conn exists before closing it in storageNode.closeBrokenConn The conn can be missing or already closed during the call to storageNode.closeBrokenConn. Prevent `... — committed to VictoriaMetrics/VictoriaMetrics by valyala 5 years ago

- lib/mergeset: reduce the maximum number of cached blocks, since there are reports on OOMs due to too big caches Updates https://github.com/VictoriaMetrics/VictoriaMetrics/issues/189 Updates https://g... — committed to VictoriaMetrics/VictoriaMetrics by valyala 5 years ago

- lib/mergeset: reduce the maximum number of cached blocks, since there are reports on OOMs due to too big caches Updates https://github.com/VictoriaMetrics/VictoriaMetrics/issues/189 Updates https://g... — committed to VictoriaMetrics/VictoriaMetrics by valyala 5 years ago

FYI, the following patch reduced memory usage when processing heavy queries in

vmselect- 56dff57f77e19b342b8b4d5f4795a4b9fb30412c . The upcoming release will contain this patch. As for memory usage forvmstoragenodes, it should be reduced too for heavy queries in the upcoming release (v1.28.0) comparing tov.1.27.3due to re-worked and optimized path when looking up time series with label filters.