VictoriaMetrics: Error in vmstorage regarding big packetsize

Describe the bug vmstorage has error logs complaining about packet size.

app/vmstorage/transport/server.go:160 cannot process vminsert conn from 10.20.11.94:40018: cannot read packet with size 104176512: unexpected EOF

I assume this also causes data to drop

Expected behavior Such error should not happen in vmstorage and vminsert should be writing all the data in vmstorage without an error

Version docker image tag: 1.26.0-cluster

Additional context

- We are running clustered victoriametrics in GKE.

- For vmstorage, the data is stored in a HDD persistent disk.

- There are two pods running for each component

- There are two pods of prometheus (HA) writing data to this VictoriaMetrics

- RAM & CPU are as below:

- vmstorage (3 cpu & 15 Gi memory) *2

- vmselect (1 cpu & 10 Gi memory) * 2

- vminsert (4 cpu & 6 Gi memory) * 2

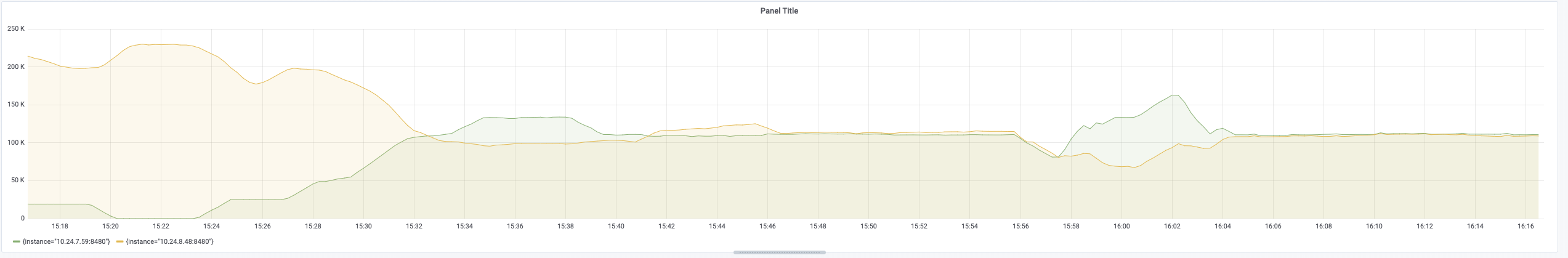

- sum(rate(vm_rows_inserted_total[5m])) by (instance)

{instance=“10.24.7.59:8480”} : 111152.98245614035

{instance=“10.24.8.48:8480”} : 111287.36842105263

Please let me know if additional information is required.

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Comments: 17

Commits related to this issue

- app/vminsert/netstorage: send per-storageNode bufs to vmstorage nodes in parallel This should improve the maximum ingestion throughput. Updates https://github.com/VictoriaMetrics/VictoriaMetrics/iss... — committed to VictoriaMetrics/VictoriaMetrics by valyala 5 years ago

- all: report the number of bytes read on io.ReadFull error This should simplify error investigation similar to https://github.com/VictoriaMetrics/VictoriaMetrics/issues/175 — committed to VictoriaMetrics/VictoriaMetrics by valyala 5 years ago

- app/vminsert/netstorage: log network errors when sending data to vmstorage nodes Updates https://github.com/VictoriaMetrics/VictoriaMetrics/issues/175 — committed to VictoriaMetrics/VictoriaMetrics by valyala 5 years ago

- app/vminsert/netstorage: mention the data size that cannot be sent to vmstorage Updates https://github.com/VictoriaMetrics/VictoriaMetrics/issues/175 — committed to VictoriaMetrics/VictoriaMetrics by valyala 5 years ago

- README.md: refer to comment about ingestion rate scalability Updates https://github.com/VictoriaMetrics/VictoriaMetrics/issues/175 — committed to VictoriaMetrics/VictoriaMetrics by valyala 5 years ago

Thanks for the update! Then leaving the issue open until possible solutions for this issue are published in README.md for cluster version.

The solutions:

-rpc.disableCompressionon vminsert.These workarounds are needed, since currently vminsert establishes a single connection per each configured vmstorage node and this connection is served by a single CPU core, which can be saturated with high amounts of data. In the future we can add support for automatic adjustement for the number of connections between vminsert and vmstorage nodes depending on the ingestion rate.

vminsertkeeps trying to send data to unhealthyvmstoragenode in order to determine when the node becomes healthy again.