fastapi: Gunicorn Workers Hangs And Consumes Memory Forever

Describe the bug

I have deployed FastAPI which queries the database and returns the results. I made sure closing the DB connection and all. I’m running gunicorn with this line ;

gunicorn -w 8 -k uvicorn.workers.UvicornH11Worker -b 0.0.0.0 app:app --timeout 10

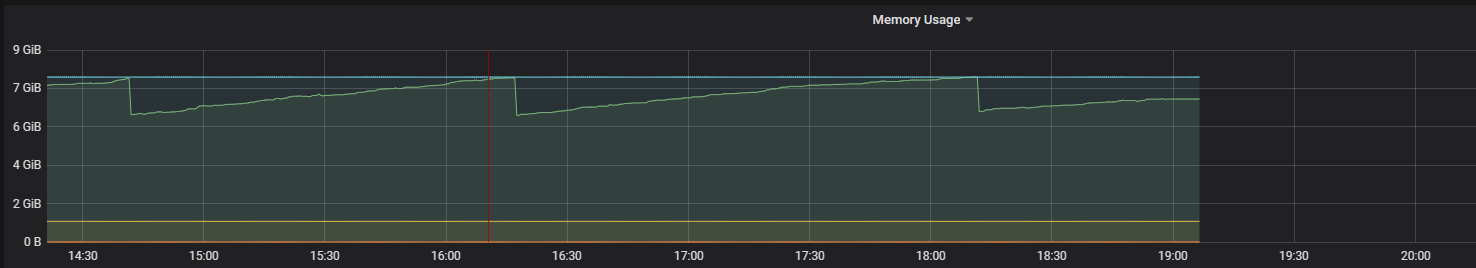

So after exposing it to the web, I run a load test which makes 30-40 requests in parallel to the fastapi. And the problem starts here. I’m watching the ‘HTOP’ in the mean time and I see that RAM usage is always growing, seems like no task is killed after completing it’s job. Then I checked the Task numbers, same goes for it too, seems like gunicorn workers do not get killed. After some time RAM usage gets at it’s maximum, and starts to throw errors. So I killed the gunicorn app but the thing is processes spawned by main gunicorn proces did not get killed and still using all the memory.

Environment:

-

OS: Ubuntu 18.04

-

FastAPI Version : 0.38.1

-

Python version : 3.7.4

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Comments: 63 (6 by maintainers)

Hi everyone,

I just read the source code of fastAPI and test it myself. First of all, this should not be a memory leak issue, but the problem is if your machine has a lot of CPUs, it will occupy a lot of memory.

The only difference is in

starlette.routing.pymethodrequest_response()If the your rest interface is not async, it will run in

loop.run_in_executor, but starlette do not specify the executor here, so the default thread pool size should be os.cpu_count() * 5, my test machine has 40 cpus so I should have 200 threads in the pool. And after each request it will not release the object in these threads, unless the thread be reused by next request, which will occupy a lot of memory, but at the end it’s not memory leak.below is my test code if you want to reproduce it

Even though it’s not memory leak, I still think it’s not a good implementation cuz it’s sensitive to your cpu count and when you run large deep learning model in fastAPI, you will find it occupy a ton of memory. So I suggest could we make the thread pool size configurable?

If you are interested in my process reading the source code, pls refer to my blog and give me a like(https://www.jianshu.com/p/e4595c48d091)

Sorry for only write blogs in Chinese 😃

Current Solution

python 3.9 already limit the threads in thread pool as below,

If for ur program, 32 thread is not too large, you can upgrade python to 3.9 to avoid this issue.

Use async to define ur interface, then the request will run in an event loop, but the throughput maybe infected.

Some statistics for python3.7, python3.8, and async.

hi all i can reproduce memory leak

memory usage rises to about 700mb on request 1000

just for info: I run the same function under flask -> and the memory is constant (!) -> so for me there is something wrong with async…

Hi, I have write a simple test to validate this issue. It seems that python3.8 fix this problem.

Sample Code: https://github.com/kevchentw/fastapi-memory-leak

still got this problem when using fastapi with defining a router function with a model to run in. The RAM has continuous goes high.

the solution here maybe:

update: RAM consume change after change to python3.8

I also found this problem, when i use gunicorn+flask ,memory would increase fastly ,and my application on k8s paltform can handle 1000000 requests, how to solve this problem?

I have noticed that too, under high load memory is left allocated but for single requests memory gets cleared up. And I already tried it making async but it is not deallocating the memory as well.

Hmm, reproducing it in Starlette makes sense. I will reproduce the issue and open an issue on Starlette repo. Thanks for the idea

@wanaryytel

This is probably an issue with starlette’s

run_in_threadpool, or maybe even the python ThreadPoolExecutor. If you port that endpoint to starlette, I expect you’ll get the same behavior.Recently the starlette and uvicorn teams have been pretty good about addressing issues; if you can reproduce the memory leak in starlette, I’d recommend creating an issue demonstrating it in the starlette (and possible uvicorn?) repos.

still got this problem, memory does not get deallocated, my conditions:

python 3.8.9["gunicorn", "-b", "0.0.0.0:8080", "-w", "3",'-k', 'uvicorn.workers.UvicornWorker', "palette.main:app", '--timeout', '0', "--graceful-timeout", "5", '--access-logfile', '-', '--error-logfile', '-', '--log-level', 'error']eventsexecutorroutesI don’t think this is solved but seems like we all found some work arounds. So I think it is up to you closing it @tiangolo

I’m seeing same issue with

Running

python:3.10.1-slim-busterin k8s withgunicorn -k uvicorn.workers.UvicornWorker app.api.server:app --workers 1 --bind 0.0.0.0:8000I have solved this issue with following settings:

@tiangolo would it be appropriate to close this issue with a

wont-fix? It seems like the memory leak bug arises from uvloop on python versions older than python3.8, so it is unlikely to be fixed. Regardless, it is external to fastapi.Anecdotally, I have encountered memory leaks when mixing uvloop and code with a lot of c extensions in python3.8+ outside of the context of a web service like fastapi/uvicorn/gunicorn. In light of this, perhaps an example of how to run fastapi without uvloop would be appropriate.

Great, it seems this the issue was solved!

@MeteHanC may we close it now?

If anyone else is having other problems, please create a new issue so we can keep the conversation focused 🤓

Yeah, in this case I suggested it specifically because it looked like the function involved would be compatible with a subprocess call.

There is a ProcessPool interface similar to ThreadPool that you can use very similarly to how

run_in_threadpoolworks in starlette. I think that mostly solves the problems related to managing the worker processes. But yes, the arguments/return types need to be pickleable, so there are many cases where you wouldn’t want to take this approach.would be happy to take a look a it @madkote if you get a simple reproductible example

hi @HsengivS , you can add threaded=False for Flask like

app.run(host='0.0.0.0', port=9333, threaded= False). I used it successfully to avoid Flask memory leaking issue.After further investigation, in my case, the memory consumption was normal. For testing, I added more RAM and saw the memory consumption pretty stable. I ended up migrating to

uvicornand lowering the number of workers from 4 to 3, in my case was enough.Thanks for the help!

similar to @madkote (and im using no ctypes) gunicorn & flask see no issue

hey @madkote thanks for asking. That involves a request via

requestsand somescikit-learn/numpyoperations on it, basically. I’ll dig on them, if this appears to be the problem!I just stopped using Python’s multiprocessing pool. I merged the queries I wanted to execute concurrently and let my DB to parallelize the queries. Thanks @tiangolo and everyone here.

I encountered the same problem, a endpoint defined with

defand hit it again and again, memory leak will happen. but defined withasync, it will not. Looking forward to the outcome of the discussion.@dmontagu @euri10 I still have a suspect on two aspects:

everywhere I use the same model, even I tried with many (custom and free available)… the result is always the same.

So, please give me some more time to make a reasonable example to reproduce the issue.

@madkote this starlette issue includes a good discussion of the problem (and some patterns to avoid that might be exacerbating it): https://github.com/encode/starlette/issues/667

@madkote I’m not 100% confident this explains the issue, but I think this may actually just be how python works. I think this article does a good job explaining it:

Edit: just realized I already replied in this issue with a link to similar discussions 😄.

hi all, I have noticed the same issue with fastapi. the function is

async def- inside I do load a resource ~200mb, do something with this and return the response. the memory is not given free.Example:

This occurs with:

uvicorn.workers.UvicornWorkerSo to me it seems to be a bug in starlette or uvicorn…

@wanaryytel It looks like this might actually be related to how python manages memory – it’s not guaranteed to release memory back to the os.

The top two answers to this stack overflow question have a lot of good info on this topic, and might point in the right direction.

That said, given you are just executing the same call over and over, it’s not clear to me why it wouldn’t reuse the memory – there could be something leaking here (possibly related to the ThreadPoolExecutor…). You could check if it was related to the ThreadPoolExecutor by checking if you got the same behavior with an

async defendpoint, which would not run in the ThreadPoolExecutor.If the requests were being made concurrently, I suppose that could explain the use of more memory, which would then feed into the above stack overflow answer’s explanation. But if you just kept calling the endpoint one call at a time in sequence, I think it’s harder to explain.

If you really wanted to dig into this, it might be worth looking at the

gcmodule and seeing if manually calling the garbage collector helps at all.@MeteHanC whether this explains/addresses your issue or not definitely depends on what your endpoints are doing.

You’re using the PyPy compliant uvicorn worker class - is your system based on PyPy? If you’re running on cpython then I suggest you try out the cpython implementation

uvicorn.workers.UvicornWorker.But in other news, I’m seeing something similar. I just run uvicorn with this:

uvicorn --host 0.0.0.0 --port 7001 app:api --reloadbut in some cases the memory is never freed up.For example this function:

When I hit the endpoint once, the memory is cleared up, but when I hit it 10x, some of the memory is left allocated and when I hit it another 10x, even more memory is left allocated. This continues until I run out of memory or restart the process. If I change the

get_xoxofunction to beasync, then the memory is always cleared up, but the function also blocks much more (which makes sense since I’m not taking advantage of anyawaits in there).So - is there a memory leak? I’m not sure, but something is handled incorrectly.

My system is running on

python:3.7Docker container. Basically the same problem occurs in production where uvicorn is run withuvicorn --host 0.0.0.0 --port %(ENV_UVICORN_PORT)s --workers %(ENV_UVICORN_WORKERS)s --timeout-keep-alive %(ENV_UVICORN_KEEPELIVE)s --log-level %(ENV_UVICORN_LOGLEVEL)s app:api.