thanos: Store: Thanos store consumes lots of memory at startup and cause OOM

Thanos, Prometheus and Golang version used:

thanos:

store/query/compactor: docker.io/bitnami/thanos:0.27.0-scratch-r6

sidecar: quay.io/thanos/thanos:v0.28.0

prometheus: quay.io/prometheus/prometheus:v2.39.0

Object Storage Provider: S3

What happened:

Thanos store is consuming over 64G memory and it causes OOM. We have 2 cluster sending Prometheus metrics to S3 store by sidecar, the current usage of S3 is around 9TB. However, the memory usage of Thanos store is growing from 30G to over 60GB and it keeps growing till OOM now.

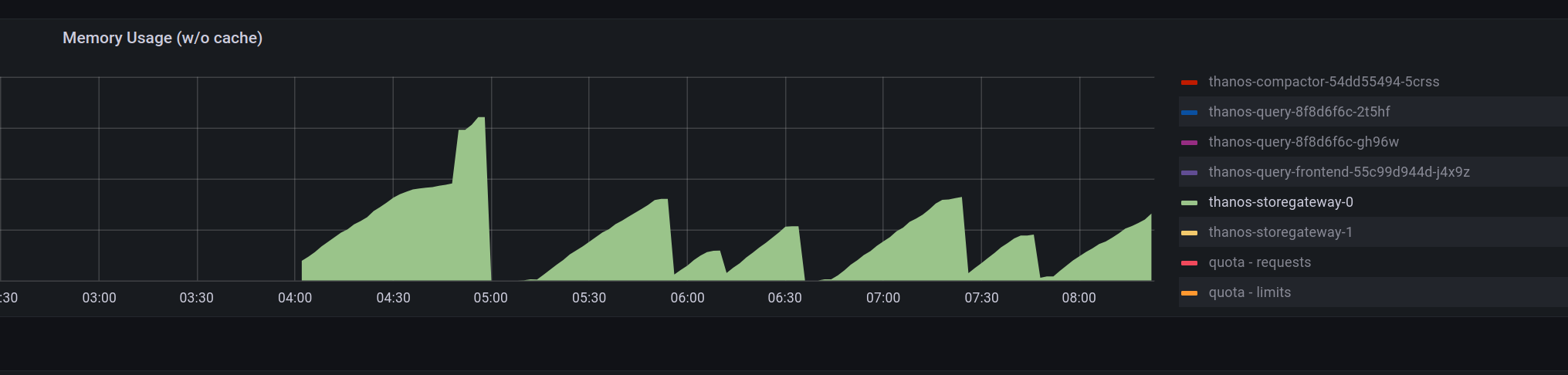

memory usage:

Here is the args:

Prometheus:

- --web.console.templates=/etc/prometheus/consoles

- --web.console.libraries=/etc/prometheus/console_libraries

- --storage.tsdb.retention.time=1h

- --storage.tsdb.retention.size=20GB

- --config.file=/etc/prometheus/config_out/prometheus.env.yaml

- --storage.tsdb.path=/prometheus

- --web.enable-lifecycle

- --web.external-url=http://jina-prom-kube-prometheus-prometheus.monitor:9090

- --web.route-prefix=/

- --storage.tsdb.wal-compression

- --web.config.file=/etc/prometheus/web_config/web-config.yaml

- --storage.tsdb.max-block-duration=2h

- --storage.tsdb.min-block-duration=2h

Thanos sidecar:

- sidecar

- --prometheus.url=http://127.0.0.1:9090/

- '--prometheus.http-client={"tls_config": {"insecure_skip_verify":true}}'

- --grpc-address=:10901

- --http-address=:10902

- --objstore.config=$(OBJSTORE_CONFIG)

- --tsdb.path=/prometheus

- --log.level=info

- --log.format=logfmt

Thanos store:

- store

- --log.level=info

- --log.format=logfmt

- --grpc-address=0.0.0.0:10901

- --http-address=0.0.0.0:10902

- --data-dir=/data

- --objstore.config-file=/conf/objstore.yml

- --sync-block-duration=10m

- --chunk-pool-size=10GB

- --index-cache-size=4GB

Thanos compactor:

- compact

- --log.level=info

- --log.format=logfmt

- --http-address=0.0.0.0:10902

- --data-dir=/data

- --retention.resolution-raw=30d

- --retention.resolution-5m=30d

- --retention.resolution-1h=3y

- --consistency-delay=2h

- --objstore.config-file=/conf/objstore.yml

- --block-viewer.global.sync-block-interval=10m

- --wait

What you expected to happen:

Our node is now running out of memory while the Thanos store is scheduled onto it. That makes the Thanos cluster is not functioning. Is it any way to optimize the memory consumption?

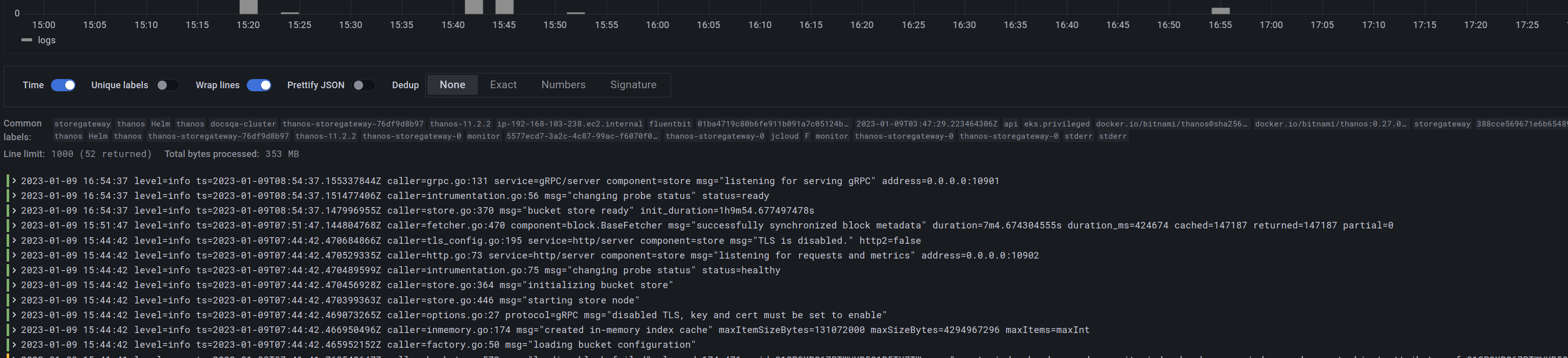

Full logs to relevant components:

it basically just loaded new blocks.

level=info ts=2023-01-09T08:29:34.126272387Z caller=bucket.go:575 msg="loaded new block" elapsed=476.84292ms id=01GMERV47B5KM6KSTNB3BBMMJN

level=info ts=2023-01-09T08:29:34.163318785Z caller=bucket.go:575 msg="loaded new block" elapsed=463.926264ms id=01GNNHNHM8SPKMPZY8VYZQ057R

level=info ts=2023-01-09T08:29:34.206577561Z caller=bucket.go:575 msg="loaded new block" elapsed=486.567896ms id=01GMWTEMKCTKQRFPKT65DBTZF7

level=info ts=2023-01-09T08:29:34.222199555Z caller=bucket.go:575 msg="loaded new block" elapsed=441.89455ms id=01GMKJ2PAMS3SZMYV7BZ08B7BB

level=info ts=2023-01-09T08:29:34.301065351Z caller=bucket.go:575 msg="loaded new block" elapsed=340.658541ms id=01GMJD8D6SPMP53PX9DSF4B7ZH

level=info ts=2023-01-09T08:29:34.318040972Z caller=bucket.go:575 msg="loaded new block" elapsed=399.348183ms id=01GP0SN321JW3BNB8079KDTDYR

level=info ts=2023-01-09T08:29:34.341624449Z caller=bucket.go:575 msg="loaded new block" elapsed=609.957282ms id=01GN0A16VYMS3PXCS3GCX8BS53

level=info ts=2023-01-09T08:29:34.367124411Z caller=bucket.go:575 msg="loaded new block" elapsed=392.148426ms id=01GMPNPJ4WZ84MEM4AGWXGJFXJ

level=info ts=2023-01-09T08:29:34.412209514Z caller=bucket.go:575 msg="loaded new block" elapsed=507.208586ms id=01GP4Z3NB3BDX7P0JK74GH1AZQ

level=info ts=2023-01-09T08:29:34.433269798Z caller=bucket.go:575 msg="loaded new block" elapsed=542.990656ms id=01GNF1J0MSXQHCJV9QCYNV3H2X

level=info ts=2023-01-09T08:29:34.43339439Z caller=bucket.go:575 msg="loaded new block" elapsed=338.445853ms id=01GMTK5X8PWG23WP86C8KMW9K4

level=info ts=2023-01-09T08:29:34.505015093Z caller=bucket.go:575 msg="loaded new block" elapsed=298.403406ms id=01GP9RR16SQBRF6K6JCMNW0QHX

level=info ts=2023-01-09T08:29:34.521069715Z caller=bucket.go:575 msg="loaded new block" elapsed=502.271628ms id=01GMPF17VY6SZQHFBPD38Y12C0

level=info ts=2023-01-09T08:29:34.535999439Z caller=bucket.go:575 msg="loaded new block" elapsed=681.687935ms id=01GN420087KDV1DYKZQ5AR36BY

level=info ts=2023-01-09T08:29:34.548036887Z caller=bucket.go:575 msg="loaded new block" elapsed=541.064296ms id=01GNCXS3W4X2W3HQAJAR211677

level=info ts=2023-01-09T08:29:34.56470743Z caller=bucket.go:575 msg="loaded new block" elapsed=438.384449ms id=01GMDCBFK3VAQXCEAP8R48BGQM

level=info ts=2023-01-09T08:29:34.584712046Z caller=bucket.go:575 msg="loaded new block" elapsed=595.623383ms id=01GMHXD6QDJ4G5RBQ6FAYSMTTT

level=info ts=2023-01-09T08:29:34.596543325Z caller=bucket.go:575 msg="loaded new block" elapsed=720.630675ms id=01GN2HW2TGM6FXAHPV2SNKSQ2Y

level=info ts=2023-01-09T08:29:34.607871953Z caller=bucket.go:575 msg="loaded new block" elapsed=770.505425ms id=01GMG02A7J2KYCG5TGFMTDD23D

level=info ts=2023-01-09T08:29:34.622657864Z caller=bucket.go:575 msg="loaded new block" elapsed=459.295922ms id=01GP0FHTDCEPVYT26CFCTH9KDM

level=info ts=2023-01-09T08:29:34.640474406Z caller=bucket.go:575 msg="loaded new block" elapsed=560.334001ms id=01GMGHSY98N6P39F3DFQB3WARW

level=info ts=2023-01-09T08:29:34.65231403Z caller=bucket.go:575 msg="loaded new block" elapsed=430.073873ms id=01GNVFWWJTG27YHY8KWNRGPV9H

level=info ts=2023-01-09T08:29:34.7017599Z caller=bucket.go:575 msg="loaded new block" elapsed=756.442413ms id=01GNWCXD1B495M98DD8BXTMESH

level=info ts=2023-01-09T08:29:34.743980219Z caller=bucket.go:575 msg="loaded new block" elapsed=425.900158ms id=01GMD4A74Y8B6P30S2E2CRSH6V

level=info ts=2023-01-09T08:29:34.759814864Z caller=bucket.go:575 msg="loaded new block" elapsed=965.168982ms id=01GP1KCRVH3DCG72R1Y9JGJ9G5

level=info ts=2023-01-09T08:29:34.77185663Z caller=bucket.go:575 msg="loaded new block" elapsed=430.190252ms id=01GMMRBNMWCGW65TJ7KWXZP3ZG

level=info ts=2023-01-09T08:29:34.786458987Z caller=bucket.go:575 msg="loaded new block" elapsed=374.204128ms id=01GN5TX048Q0ZFEKX4JTZZRVA3

level=info ts=2023-01-09T08:29:34.879001799Z caller=bucket.go:575 msg="loaded new block" elapsed=445.69812ms id=01GP2X0S1ME5A12KG592M9Z037

level=info ts=2023-01-09T08:29:34.8980481Z caller=bucket.go:575 msg="loaded new block" elapsed=349.978491ms id=01GMRNDE60GKCNEYTHFTR9K36K

level=info ts=2023-01-09T08:29:34.911204235Z caller=bucket.go:575 msg="loaded new block" elapsed=375.170347ms id=01GMR5AXAZB11RPNSTD4KMZDXS

level=info ts=2023-01-09T08:29:34.922889582Z caller=bucket.go:575 msg="loaded new block" elapsed=621.790763ms id=01GN6XP948ECG6N5NWPMZR5XJ8

level=info ts=2023-01-09T08:29:34.943017906Z caller=bucket.go:575 msg="loaded new block" elapsed=437.95829ms id=01GMRGTYJZ3JERGBSHD9P81X8N

level=info ts=2023-01-09T08:29:34.960895805Z caller=bucket.go:575 msg="loaded new block" elapsed=593.728364ms id=01GN16HZTR49ESDA12X8ERFJ9J

level=info ts=2023-01-09T08:29:34.976384882Z caller=bucket.go:575 msg="loaded new block" elapsed=542.956595ms id=01GMQVTYWCF1BT7CXX044HVB92

level=info ts=2023-01-09T08:29:35.027938785Z caller=bucket.go:575 msg="loaded new block" elapsed=405.245597ms id=01GNZZADS49SPDZP6X2D9EGJF2

level=info ts=2023-01-09T08:29:35.042191693Z caller=bucket.go:575 msg="loaded new block" elapsed=477.450758ms id=01GN1PNDFZB509MNQCZX9HXA5G

level=info ts=2023-01-09T08:29:35.056635402Z caller=bucket.go:575 msg="loaded new block" elapsed=460.058269ms id=01GP9N03A9MT20KQYBNE0B92KG

level=info ts=2023-01-09T08:29:35.073868412Z caller=bucket.go:575 msg="loaded new block" elapsed=433.355793ms id=01GMA30GWY9Y25NNDE3HC92ZXJ

level=info ts=2023-01-09T08:29:35.085838549Z caller=bucket.go:575 msg="loaded new block" elapsed=477.935575ms id=01GMJCQXVGYQS87HK1B3HNZB5S

level=info ts=2023-01-09T08:29:35.100815055Z caller=bucket.go:575 msg="loaded new block" elapsed=579.704018ms id=01GMCPBE9736CCRARRWPCVAH6Y

level=info ts=2023-01-09T08:29:35.115420458Z caller=bucket.go:575 msg="loaded new block" elapsed=463.072407ms id=01GP59WSQXGJT0SHNKY309JAFH

level=info ts=2023-01-09T08:29:35.203169731Z caller=bucket.go:575 msg="loaded new block" elapsed=618.421554ms id=01GNEVT01MNGEFRS3ERMJ16V87

level=info ts=2023-01-09T08:29:35.279652214Z caller=bucket.go:575 msg="loaded new block" elapsed=400.606937ms id=01GN6NNXK36293W1RADCKEV3JR

level=info ts=2023-01-09T08:29:35.291359844Z caller=bucket.go:575 msg="loaded new block" elapsed=531.511611ms id=01GNCCZPNFE8SP83E4BW9XZ6KP

level=info ts=2023-01-09T08:29:35.310191944Z caller=bucket.go:575 msg="loaded new block" elapsed=523.696002ms id=01GN9WVAH8XVWPAG092SHVA0W0

level=info ts=2023-01-09T08:29:35.322481452Z caller=bucket.go:575 msg="loaded new block" elapsed=620.67756ms id=01GP8PT73SEFD7G9Y4YRKXPEVN

level=info ts=2023-01-09T08:29:35.338789229Z caller=bucket.go:575 msg="loaded new block" elapsed=594.775128ms id=01GMC5PKGGKMNK5JCHBRJ9XZTC

level=info ts=2023-01-09T08:29:35.350509784Z caller=bucket.go:575 msg="loaded new block" elapsed=439.27053ms id=01GMXDM1K3MXNGM2RTPABGJCZR

level=info ts=2023-01-09T08:29:35.365079283Z caller=bucket.go:575 msg="loaded new block" elapsed=404.137871ms id=01GMQQ2ZG0QJP58ARCT13TYGC6

level=info ts=2023-01-09T08:29:35.379899644Z caller=bucket.go:575 msg="loaded new block" elapsed=608.002821ms id=01GMAWPN5EWTF7SYEX77VHKNK7

level=info ts=2023-01-09T08:29:35.403586229Z caller=bucket.go:575 msg="loaded new block" elapsed=460.533742ms id=01GP96C8HB61GQXJEYE82EA75Y

level=info ts=2023-01-09T08:29:35.421131475Z caller=bucket.go:575 msg="loaded new block" elapsed=523.051163ms id=01GN37QJ481E8RRW4MPK2EWC0G

level=info ts=2023-01-09T08:29:35.437835534Z caller=bucket.go:575 msg="loaded new block" elapsed=461.411579ms id=01GP2VFB0MYF043PQJJS59BVQF

level=info ts=2023-01-09T08:29:35.463091339Z caller=bucket.go:575 msg="loaded new block" elapsed=377.223659ms id=01GNM35T6MFRM3D7S05AF40QMP

level=info ts=2023-01-09T08:29:35.48466485Z caller=bucket.go:575 msg="loaded new block" elapsed=383.789358ms id=01GN75F9W7N9DSQTA9HBF548F3

level=info ts=2023-01-09T08:29:35.497009364Z caller=bucket.go:575 msg="loaded new block" elapsed=574.085198ms id=01GN5K24SA68P0XMB5XHE9G5PN

I did some filter:

Anything else we need to know:

About this issue

- Original URL

- State: open

- Created a year ago

- Reactions: 1

- Comments: 24 (11 by maintainers)

That’s true. We don’t have a way to address this until we have per metric retention. But in order to consume less memory during store gateway start up time, time partitioning should be efficient to reduce the number of blocks to load. I didn’t go through the whole context, so if we are trying to achieve something else then time partition may not help.

@tarrantro You can try deploying Thanos store with time partitioning. Basically only loading part of the blocks within the specified time range. Sounds like a perfect fit for your use case.