thanos: compact: downsample stopped making progress (compacted blocks migrated from prometheus too small)

Thanos, Prometheus and Golang version used: Thanos image:tag v0.11.0

Object Storage Provider: GCS

What happened: thanos-compact downsample stopped making progress (5m incomplete, no 1h). The situation has continued for 10 hours.

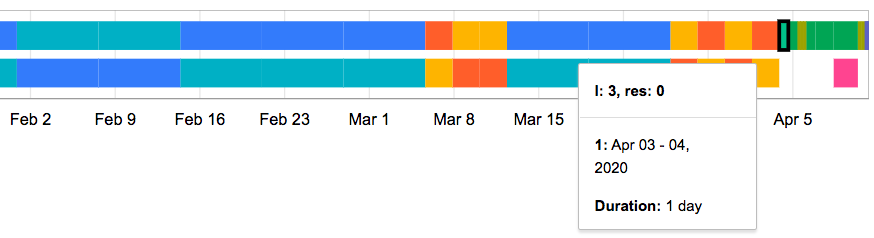

bucket UI apppears as follows:

What you expected to happen: compactor makes progress towards steady-state of downsampled data

How to reproduce it (as minimally and precisely as possible):

newly deployed compactor job against 5 months of data

compact args:

- "compact"

- "--log.level=info"

- "--log.format=logfmt"

- "--http-address=0.0.0.0:10902"

- "--objstore.config-file=/etc/config/object-store.yaml"

- "--data-dir=/var/thanos/compact"

- "--consistency-delay=30m"

- "--retention.resolution-raw=0d"

- "--retention.resolution-5m=0d"

- "--retention.resolution-1h=0d"

- "--block-sync-concurrency=20"

- "--compact.concurrency=1"

- "--wait"

Full logs to relevant components:

Compactor iterations have settled to doing no work. There are no warnings or errors in the logs. Compaction operations are still happening normally.

Log for latest iteration:

E 2020-04-10T23:40:59.535995275Z level=info ts=2020-04-10T23:40:59.535727872Z caller=clean.go:50 msg="cleaning of aborted partial uploads done"

E 2020-04-10T23:40:59.535910379Z level=info ts=2020-04-10T23:40:59.535671469Z caller=fetcher.go:368 component=block.MetaFetcher msg="successfully fetched block metadata" duration=51.342219ms cached=52 returned=52 partial=0

E 2020-04-10T23:40:59.484518859Z level=info ts=2020-04-10T23:40:59.484316539Z caller=clean.go:25 msg="started cleaning of aborted partial uploads"

E 2020-04-10T23:40:59.484512229Z level=info ts=2020-04-10T23:40:59.484300199Z caller=retention.go:41 msg="optional retention apply done"

E 2020-04-10T23:40:59.484473403Z level=info ts=2020-04-10T23:40:59.484219675Z caller=fetcher.go:368 component=block.MetaFetcher msg="successfully fetched block metadata" duration=61.096018ms cached=52 returned=52 partial=0

E 2020-04-10T23:40:59.423193158Z level=info ts=2020-04-10T23:40:59.423100065Z caller=retention.go:20 msg="start optional retention"

E 2020-04-10T23:40:59.423178026Z level=info ts=2020-04-10T23:40:59.423057958Z caller=compact.go:324 msg="downsampling iterations done"

E 2020-04-10T23:40:59.422756013Z level=info ts=2020-04-10T23:40:59.422495525Z caller=fetcher.go:368 component=block.MetaFetcher msg="successfully fetched block metadata" duration=55.296998ms cached=52 returned=52 partial=0

E 2020-04-10T23:40:59.367230069Z level=info ts=2020-04-10T23:40:59.367100699Z caller=compact.go:319 msg="start second pass of downsampling"

E 2020-04-10T23:40:59.366817119Z level=info ts=2020-04-10T23:40:59.366579816Z caller=fetcher.go:368 component=block.MetaFetcher msg="successfully fetched block metadata" duration=51.449956ms cached=52 returned=52 partial=0

E 2020-04-10T23:40:59.315252887Z level=info ts=2020-04-10T23:40:59.315067975Z caller=compact.go:313 msg="start first pass of downsampling"

E 2020-04-10T23:40:59.315221167Z level=info ts=2020-04-10T23:40:59.314961081Z caller=compact.go:910 msg="compaction iterations done"

E 2020-04-10T23:40:59.213787324Z level=info ts=2020-04-10T23:40:59.213601901Z caller=compact.go:872 msg="start of compaction"

E 2020-04-10T23:40:59.195223946Z level=info ts=2020-04-10T23:40:59.195049536Z caller=compact.go:246 msg="deleting outdated block" block=01E59XWF1XDQFG2AY9TR6TVX6P

E 2020-04-10T23:40:59.174106196Z level=info ts=2020-04-10T23:40:59.173935931Z caller=compact.go:246 msg="deleting outdated block" block=01E59Q0N419Y9RAZWG2FKEBEFB

E 2020-04-10T23:40:59.156003984Z level=info ts=2020-04-10T23:40:59.155810736Z caller=compact.go:246 msg="deleting outdated block" block=01E59G5090ZVF1AX61E07W50CJ

E 2020-04-10T23:40:59.135748608Z level=info ts=2020-04-10T23:40:59.135366491Z caller=compact.go:246 msg="deleting outdated block" block=01E59996ZPQWGGQ03M007CNB4P

E 2020-04-10T23:40:59.135736558Z level=info ts=2020-04-10T23:40:59.135351688Z caller=compact.go:864 msg="start of GC"

E 2020-04-10T23:40:59.135674222Z level=info ts=2020-04-10T23:40:59.135304544Z caller=fetcher.go:368 component=block.MetaFetcher msg="successfully fetched block metadata" duration=145.544367ms cached=52 returned=52 partial=0

E 2020-04-10T23:40:58.989982600Z level=info ts=2020-04-10T23:40:58.989582621Z caller=compact.go:858 msg="start sync of metas"

Anything else we need to know:

I have not yet tried restarting the compact job. I’ll wait up to 1 day for advice in case there is something to inspect on the running instance.

I’ve ruled out it being a Bucket UI issue by comparing increase(thanos_compact_downsample_total) to number of downsampled blocks shown in the UI, and they match as expected.

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 23 (18 by maintainers)

Commits related to this issue

- reduce DownsampleRange1 to 6d The intention is to accommodate blocks migrated from prometheus-server, which has a different compaction schedule ending at 6.75 days. Fixes #2413. — committed to belm0/thanos by belm0 4 years ago

- reduce DownsampleRange1 to 6d The intention is to accommodate blocks migrated from prometheus-server, which has a different compaction schedule ending at 6.75 days. Fixes #2413. — committed to belm0/thanos by belm0 4 years ago

- reduce DownsampleRange1 to 6d The intention is to accommodate blocks migrated from prometheus-server, which has a different compaction schedule ending at 6.75 days. Fixes #2413. Signed-off-by: John... — committed to belm0/thanos by belm0 4 years ago

- reduce DownsampleRange1 to 6d The intention is to accommodate blocks migrated from prometheus-server, which has a different compaction schedule ending at 6.75 days. Fixes #2413. Signed-off-by: John... — committed to belm0/thanos by belm0 4 years ago

- reduce DownsampleRange1 to 3d The intention is to accommodate blocks migrated from prometheus-server, which has a different compaction schedule ending at 6.75 days. Fixes #2413. Signed-off-by: John... — committed to belm0/thanos by belm0 4 years ago

- reduce DownsampleRange1 to 3d The intention is to accommodate blocks migrated from prometheus-server, which has a different compaction schedule ending at 6.75 days. Fixes #2413. Signed-off-by: John... — committed to belm0/thanos by belm0 4 years ago

Why migrated data is not compacted to 2w or 8d blocks then?

I ran

block verify, it is reporting an overlap between one 8h block and four 2h blocks:however this time range is very recent (starting 04/12/2020 4:00pm UTC), so it seems unrelated to the downsampling problem

it appears to be a bug in v0.12.0-rc.1

bucket verify--> filed #2420