tensorflow: ValueError: No gradients provided for any variable: ['conv2d/kernel:0', 'conv2d/bias:0',

System information Colab tensorflow 2.2.0

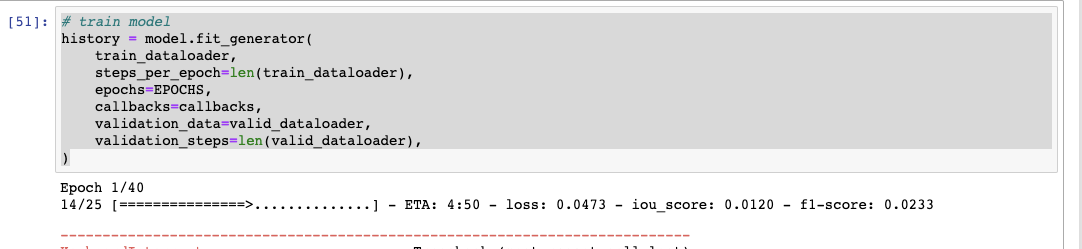

Describe the current behavior: I faced this error when i tried to solve my own data issues, which is multiple label semantic segmentations. When I ran on Jupiter notebook on my local Mac Book with Keras installation (not tf.keras but keras only), model could train normally as expected as below.

However, I stopped training the whole model on local Mac Book due to its limited memory and capability and I switched to Colab, Tensorflow version: 2.2.0-rc2 and faced this error:

ValueError: No gradients provided for any variable: ['conv2d/kernel:0', 'conv2d/bias:0', 'conv2d_1/kernel:0', 'conv2d_1/bias:0', 'conv2d_2/kernel:0', 'conv2d_2/bias:0', 'conv2d_3/kernel:0', 'conv2d_3/bias:0', 'conv2d_4/kernel:0', 'conv2d_4/bias:0', 'conv2d_5/kernel:0', 'conv2d_5/bias:0', 'conv2d_6/kernel:0', 'conv2d_6/bias:0', 'conv2d_7/kernel:0', 'conv2d_7/bias:0', 'conv2d_8/kernel:0', 'conv2d_8/bias:0', 'conv2d_9/kernel:0', 'conv2d_9/bias:0', 'conv2d_transpose/kernel:0', 'conv2d_transpose/bias:0', 'conv2d_10/kernel:0', 'conv2d_10/bias:0', 'conv2d_11/kernel:0', 'conv2d_11/bias:0', 'conv2d_transpose_1/kernel:0', 'conv2d_transpose_1/bias:0', 'conv2d_12/kernel:0', 'conv2d_12/bias:0', 'conv2d_13/kernel:0', 'conv2d_13/bias:0', 'conv2d_transpose_2/kernel:0', 'conv2d_transpose_2/bias:0', 'conv2d_14/kernel:0', 'conv2d_14/bias:0', 'conv2d_15/kernel:0', 'conv2d_15/bias:0', 'conv2d_transpose_3/kernel:0', 'conv2d_transpose_3/bias:0', 'conv2d_16/kernel:0', 'conv2d_16/bias:0', 'conv2d_17/kernel:0', 'conv2d_17/bias:0', 'conv2d_18/kernel:0', 'conv2d_18/bias:0'].

Full error:

Epoch 1/40

ValueError Traceback (most recent call last) <ipython-input-27-f05367a1db71> in <module>() 5 callbacks=callbacks, 6 validation_data=valid_dataloader, ----> 7 validation_steps=(no_of_validation_images//1),verbose=1 8 )

10 frames

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py in _method_wrapper(self, *args, **kwargs)

64 def _method_wrapper(self, *args, **kwargs):

65 if not self._in_multi_worker_mode(): # pylint: disable=protected-access

—> 66 return method(self, *args, **kwargs)

67

68 # Running inside run_distribute_coordinator already.

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing, **kwargs) 783 batch_size=batch_size): 784 callbacks.on_train_batch_begin(step) –> 785 tmp_logs = train_function(iterator) 786 # Catch OutOfRangeError for Datasets of unknown size. 787 # This blocks until the batch has finished executing.

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/def_function.py in call(self, *args, **kwds) 578 xla_context.Exit() 579 else: –> 580 result = self._call(*args, **kwds) 581 582 if tracing_count == self._get_tracing_count():

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/def_function.py in _call(self, *args, **kwds) 625 # This is the first call of call, so we have to initialize. 626 initializers = [] –> 627 self._initialize(args, kwds, add_initializers_to=initializers) 628 finally: 629 # At this point we know that the initialization is complete (or less

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/def_function.py in _initialize(self, args, kwds, add_initializers_to) 504 self._concrete_stateful_fn = ( 505 self._stateful_fn._get_concrete_function_internal_garbage_collected( # pylint: disable=protected-access –> 506 *args, **kwds)) 507 508 def invalid_creator_scope(*unused_args, **unused_kwds):

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/function.py in _get_concrete_function_internal_garbage_collected(self, *args, **kwargs) 2444 args, kwargs = None, None 2445 with self._lock: -> 2446 graph_function, _, _ = self._maybe_define_function(args, kwargs) 2447 return graph_function 2448

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/function.py in _maybe_define_function(self, args, kwargs) 2775 2776 self._function_cache.missed.add(call_context_key) -> 2777 graph_function = self._create_graph_function(args, kwargs) 2778 self._function_cache.primary[cache_key] = graph_function 2779 return graph_function, args, kwargs

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/function.py in _create_graph_function(self, args, kwargs, override_flat_arg_shapes) 2665 arg_names=arg_names, 2666 override_flat_arg_shapes=override_flat_arg_shapes, -> 2667 capture_by_value=self._capture_by_value), 2668 self._function_attributes, 2669 # Tell the ConcreteFunction to clean up its graph once it goes out of

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/func_graph.py in func_graph_from_py_func(name, python_func, args, kwargs, signature, func_graph, autograph, autograph_options, add_control_dependencies, arg_names, op_return_value, collections, capture_by_value, override_flat_arg_shapes)

979 _, original_func = tf_decorator.unwrap(python_func)

980

–> 981 func_outputs = python_func(*func_args, **func_kwargs)

982

983 # invariant: func_outputs contains only Tensors, CompositeTensors,

/usr/local/lib/python3.6/dist-packages/tensorflow/python/eager/def_function.py in wrapped_fn(*args, **kwds) 439 # wrapped allows AutoGraph to swap in a converted function. We give 440 # the function a weak reference to itself to avoid a reference cycle. –> 441 return weak_wrapped_fn().wrapped(*args, **kwds) 442 weak_wrapped_fn = weakref.ref(wrapped_fn) 443

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/func_graph.py in wrapper(*args, **kwargs) 966 except Exception as e: # pylint:disable=broad-except 967 if hasattr(e, “ag_error_metadata”): –> 968 raise e.ag_error_metadata.to_exception(e) 969 else: 970 raise

ValueError: in user code:

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py:505 train_function *

outputs = self.distribute_strategy.run(

/usr/local/lib/python3.6/dist-packages/tensorflow/python/distribute/distribute_lib.py:951 run **

return self._extended.call_for_each_replica(fn, args=args, kwargs=kwargs)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/distribute/distribute_lib.py:2290 call_for_each_replica

return self._call_for_each_replica(fn, args, kwargs)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/distribute/distribute_lib.py:2649 _call_for_each_replica

return fn(*args, **kwargs)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py:475 train_step **

self.trainable_variables)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py:1741 _minimize

trainable_variables))

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/optimizer_v2/optimizer_v2.py:525 _aggregate_gradients

filtered_grads_and_vars = _filter_grads(grads_and_vars)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/optimizer_v2/optimizer_v2.py:1203 _filter_grads

([v.name for _, v in grads_and_vars],))

ValueError: No gradients provided for any variable: ['conv2d/kernel:0', 'conv2d/bias:0', 'conv2d_1/kernel:0', 'conv2d_1/bias:0', 'conv2d_2/kernel:0', 'conv2d_2/bias:0', 'conv2d_3/kernel:0', 'conv2d_3/bias:0', 'conv2d_4/kernel:0', 'conv2d_4/bias:0', 'conv2d_5/kernel:0', 'conv2d_5/bias:0', 'conv2d_6/kernel:0', 'conv2d_6/bias:0', 'conv2d_7/kernel:0', 'conv2d_7/bias:0', 'conv2d_8/kernel:0', 'conv2d_8/bias:0', 'conv2d_9/kernel:0', 'conv2d_9/bias:0', 'conv2d_transpose/kernel:0', 'conv2d_transpose/bias:0', 'conv2d_10/kernel:0', 'conv2d_10/bias:0', 'conv2d_11/kernel:0', 'conv2d_11/bias:0', 'conv2d_transpose_1/kernel:0', 'conv2d_transpose_1/bias:0', 'conv2d_12/kernel:0', 'conv2d_12/bias:0', 'conv2d_13/kernel:0', 'conv2d_13/bias:0', 'conv2d_transpose_2/kernel:0', 'conv2d_transpose_2/bias:0', 'conv2d_14/kernel:0', 'conv2d_14/bias:0', 'conv2d_15/kernel:0', 'conv2d_15/bias:0', 'conv2d_transpose_3/kernel:0', 'conv2d_transpose_3/bias:0', 'conv2d_16/kernel:0', 'conv2d_16/bias:0', 'conv2d_17/kernel:0', 'conv2d_17/bias:0', 'conv2d_18/kernel:0', 'conv2d_18/bias:0'].

Describe the expected behavior: Could train model

Standalone code to reproduce the issue

Thank you for looking into this

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 19 (3 by maintainers)

@phuongchi911, Can you describe in more details the solution ?

Hi as I mentioned it is about my own data generator coding issue. Not in Tensorflow. It should return a tuple in the dataloader. But i am not sure if this will apply to your dataset.

can you explain how to change from list to tuple?