tensorflow: TOCO fails to handle Dilated Convolution properly

System information

Have I written custom code (as opposed to using a stock example script provided in TensorFlow):N/A OS Platform and Distribution (e.g., Linux Ubuntu 16.04):Linux Ubuntu 16.04 Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device:N/A TensorFlow installed from (source or binary):source TensorFlow version (use command below):1.10.0 Python version: 2.7.12 Bazel version (if compiling from source):0.15.0 GCC/Compiler version (if compiling from source):5.4.0 CUDA/cuDNN version:N/A GPU model and memory:N/A Exact command to reproduce:N/A

Problem description

I have frozen_model.pb which works well.

I build toco with command:

bazel build -c opt //tensorflow/contrib/lite/toco:toco

Then i’m trying to convert model to TfLite:

toco --allow_custom_ops --input_file='frozen_model.pb' --output_file='wavenet.tflite' --input_arrays='original_input' --input_shapes='1,48000,1' --output_arrays='activation_2/Softmax' --dump_graphviz=graph --dump_graphviz_video=true

and geting error:

2018-09-07 17:45:53.870648: I tensorflow/contrib/lite/toco/graph_transformations/graph_transformations.cc:39] Before Removing unused ops: 412 operators, 674 arrays (0 quantized)

2018-09-07 17:45:53.876846: I tensorflow/contrib/lite/toco/graph_transformations/graph_transformations.cc:39] Before general graph transformations: 412 operators, 674 arrays (0 quantized)

2018-09-07 17:45:53.877237: I tensorflow/contrib/lite/toco/graph_transformations/identify_dilated_conv.cc:161] Identified sub-network emulating dilated convolution.

2018-09-07 17:45:53.877266: I tensorflow/contrib/lite/toco/graph_transformations/identify_dilated_conv.cc:209] Replaced with Dilated Conv2D op outputting "dilated_conv_2_tanh/convolution/Conv2D".

2018-09-07 17:45:53.877487: I tensorflow/contrib/lite/toco/graph_transformations/identify_dilated_conv.cc:161] Identified sub-network emulating dilated convolution.

2018-09-07 17:45:53.877512: I tensorflow/contrib/lite/toco/graph_transformations/identify_dilated_conv.cc:209] Replaced with Dilated Conv2D op outputting "dilated_conv_2_sigm/convolution/Conv2D".

2018-09-07 17:45:53.877852: F tensorflow/contrib/lite/toco/graph_transformations/propagate_fixed_sizes.cc:116] Check failed: dim_x == dim_y (48002 vs. 48000)Dimensions must match

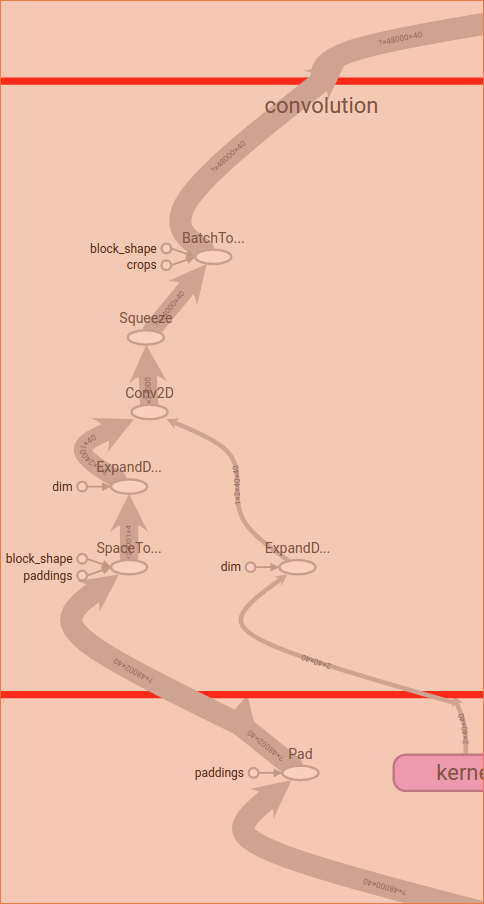

This is Dilated Convolution block in original frozen_model.pb visualized in tensorboard, it has input and output shape [?, 48000, 40]:

[?, 48000, 40] ->Pad->[?, 48002, 40]->SpaceToBatchND -> ExpandDims -> Conv2D -> Squeeze -> BatchToSpaceND->[?, 48000, 40]

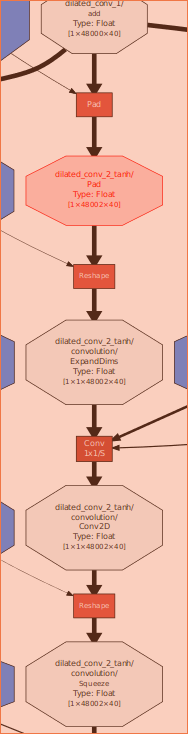

TOCO tries to substitute with

[?, 48000, 40] ->Pad->[?, 48002, 40]->Reshape -> Conv1x1/S -> Squeze->[?, 48002, 40]

so ouput of resulted block is [?, 48002, 40] instead of [?, 48000, 40], it causes error in subsequent operations.

I managed to avoid this issue by removing line 102:

transformations->Add(new IdentifyDilatedConv);

from tensorflow/contrib/lite/toco/toco_tooling.cc and rebuilding TOCO,

but unfortunately this causes new problems, because some operations like SpaceToBatchND and BatchToSpaceND don’t support 3D tensors in current TfLite verison.

About this issue

- Original URL

- State: closed

- Created 6 years ago

- Reactions: 2

- Comments: 29 (13 by maintainers)

@karolbadowski : Thanks for sharing details. I have verified the TCN conversion & Inference in TFLite. My 3 PRs #28410, #27867 & #28179 solve the blocking issue you are facing. So i would suggest, as it is taking long time to get merged, you can setup one build environment of Tensorflow latest code locally, and merge my 3 PRs mentioned above. Then you can convert the TCN models and will be able to inference as well. That will be just one time effort.

NOTE: I have used mnist_example TCN to verify my changes, and i believe it coincides with your requirement also.

Please let me know if you need anything else, Thanks!

Hello. Do you know for which release version of TensorFlow this change is planned?

@mohantym I notice that this issue happens in TF 2.6 quantization too with atrous convolution. And just for reference I tried with MLIR based converter the model is converted but dilated convolution breaks down into spacetodepth, conv2d and depth to space and then does not apply quantization to conv2d, I am not sure if that is happening because of MLIR conversion or the qat quant nodes are actually not inserted properly. However, I did not have this issue with TF 1.15.

Thank you @ANSHUMAN87 Do you know if the change is already merged with release version of TensorFlow (if so, since which one)?

Hello. I believe I have a similar issue. My model based on TCN has 2 issues. Last of which is probably similar to what is mentioned here (WaveNet is kind of TCN, and my problem occurs with ‘causal’ padding). I would highly appreciate an advice.

First my environment:

Now the problems: I am using keras-tcn library (in version 2.3.5, but newest version has the same problem) from https://github.com/philipperemy/keras-tcn in order to create a Temporal Convolutional Neural Network. My model has 2 issues in conversion to TFLite.

Error says : “A merge layer should be called on a list of inputs.”

2. Another error shows up whenever any of the dilations is >1 while simultaneously kernel_size being >1. So for example:

If previous layer had the time dimension equal 31, the error would look like: “Check failed: dim_x == dim_y (31 vs. 35)Dimensions must match”

EDIT: Problem does not occur when I set padding to ‘same’, however it gives completely different logic, not applicable for real-time solution. I really need it working with ‘causal’ padding.

When is the new set of improvements estimated to show up in release? I will gladly perform an update then. Is there any temporary hack I can apply to go around this issue till then? I really would like to make at least a PoC for now for a demonstration and get the “official solution” whenever it is ready.

This error always oppears when you try to convert Keras model with Conv1D when dilation_rate > 1 and padding != ‘same’

Still on TF 1.13