tensorflow: [TF2.0]: Skipping optimization due to error while loading function

Please make sure that this is a bug. As per our GitHub Policy, we only address code/doc bugs, performance issues, feature requests and build/installation issues on GitHub. tag:bug_template

System information

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): No

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Window 10

- Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device: No

- TensorFlow installed from (source or binary): source

- TensorFlow version (use command below): 2.0.0-beta1

- Python version: 3.6.0

Describe the current behavior I’m trying to reproduce the results from the tutorial example about “Text classification with an RNN” provided by Tensorflow at: https://www.tensorflow.org/beta/tutorials/text/text_classification_rnn

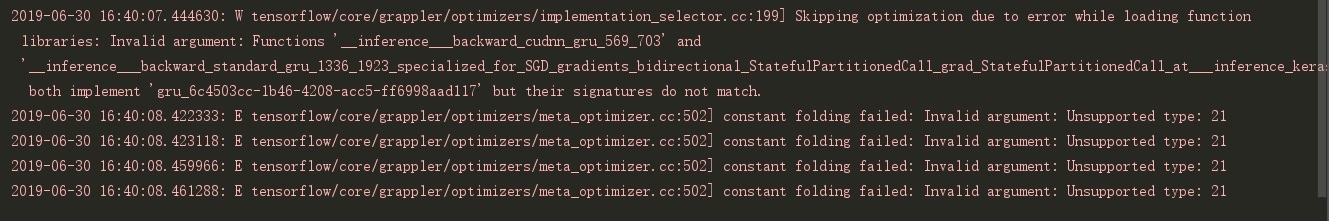

However, this warning message constantly appears that shows "skipping optimization due to error while loading function libraries: Invalid argument: … "

I tried other optimizers, and LSTM or GRU architectures but nothing changes!

Code to reproduce the issue

from __future__ import absolute_import, division, print_function, unicode_literals

import tensorflow as tf

print(tf.__version__)

import tensorflow_datasets as tfds

# plot result

import matplotlib.pyplot as plt

def plot_graph(histroy, string):

plt.plot(histroy.history[string])

plt.plot(histroy.history['val_' + string])

plt.xlabel('Epochs')

plt.ylabel('string')

plt.legend([string, 'val_'+string])

plt.show()

# See available datasets

# print(tfds.list_builders())

dataset, info = tfds.load('imdb_reviews/subwords8k', with_info=True,

as_supervised=True)

train_dataset, test_dataset = dataset['train'], dataset['test']

tokenizer = info.features['text'].encoder

# print('Vocabulary size: {}'.format(tokenizer.vocab_size))

# sample_string = 'TensorFlow is cool'

# tokenized_string = tokenizer.encode(sample_string)

# print ('Tokenized string is {}'.format(tokenized_string))

# original_string = tokenizer.decode(tokenized_string)

# print ('The original string: {}'.format(original_string))

# assert original_string == sample_string

# for ts in tokenized_string:

# print('{} -------> {}'.format(ts, tokenizer.decode([ts])))

BUFFER_SIZE = 10000

BATCH_SIZE = 64

train_dataset = train_dataset.shuffle(BUFFER_SIZE)

train_dataset = train_dataset.padded_batch(BATCH_SIZE, train_dataset.output_shapes)

test_dataset = test_dataset.padded_batch(BATCH_SIZE,test_dataset.output_shapes)

# Build the model

EM_SIZE = 64

model = tf.keras.Sequential([

tf.keras.layers.Embedding(tokenizer.vocab_size, 64),

tf.keras.layers.Bidirectional(tf.keras.layers.GRU(64)),

tf.keras.layers.Dense(64, activation= 'relu'),

tf.keras.layers.Dense(1,activation = 'sigmoid')

])

model.compile(loss= 'mse',

optimizer= 'sgd',

metrics=['accuracy'])

history = model.fit(train_dataset, epochs = 1, validation_data=test_dataset)

test_loss, test_acc = model.evaluate(test_dataset)

print('Test Loss: {}'.format(test_loss))

print('Test Accuracy: {}'.format(test_acc))

It seems that many other users are experiencing similar issues on TF2.0-beta

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 6

- Comments: 52 (24 by maintainers)

@ravikyram I think you are missing the fact that although it reportedly works on your machine, it does not on a bunch of others. This bug has been experienced by multiple users, and I just ran the exact same code again in TF 2.0-GPU-beta1, which yields the same issue as that first reported by @Slacker-WY.

I get that TF is a very large (and, let me stress it out, pretty amazing) project receiving a huge number of Issues, part of which are more about user-related bugs than actual software problems, but systematically answering “it works on my computer” to try and close issues when there are multiple reports of an issue - whose not showing up on your machine actually underlines (in my humble opinion) that there is something tricky to investigate - does not feel like a very good software maintenance policy.

Again, TF 2.0 is a big release with a lot of work and changes, it is bound to include some issues and that’s what beta versions are for, but please stop denying that there is something broken here - similar issues have been raised for about a month now, and we are talking about Google’s own tutorial code on something “basic” (in terms of use - I totally understand that the underlying mechanics and implementation are not exactly simple) not working (at all) on a number of configurations. It might be due to an unnoticed detail (GPU driver version, support of a given type of CPU instructions… I have honestly no idea), or to an actual software issue (by the way, I tested that the code runs properly - except for a compatibility issue with using

test_datasetasvalidation_datainmodel.fit- on the same system using TF 1.14-GPU in a different virtual environment, so TF 2.0 really seems to be at fault). At any rate, it really seems worth investigating by TF developers.Hi all, apparently seems like the issue (which I had too) is correlated with the activation function of the LSTM/GRU/RNN layer. By changing activation from default ‘tanh’ to ‘sigmoid’ the warning disappeared. Hope this will help fixing the problem. Have a good day!

FYI I run tensorflow-2.0.0-beta1 on CPU

Hi all:

First, let’s skip the constant folding issue which is tracked by other ticket.

Secondly, let me give more details about why the warning message can be ignored, and why you should still use the default activation function (tanh).

In 2.0, we implement an improvement for RNN to select different kernel based on GPU availability. Previously, we expect user to build model with CudnnLSTM, CudnnGRU layer explicitly, now you only need LSTM/GRU layer, and it will smartly choose cudnn kernel when the graph is landed on GPU. Under the hood, the we have grappler stages to rewrite the graph. It suppose to optimize the graph if possible. When a grappler stage failed, it will leave the existing graph as is, and unoptimized graph should still behave correctly (just slower).

In this particular case, the implementation_selector failed to optimize the function, since it was executed on a function that has been previously optimized (we run optimization multiple rounds). It is fine for it to fail at this stage, which will just leave the function unchanged.

When you change the activation function (from tanh to sigmoid or any other function), it means we cannot use cudnn kernel (which by default use tanh and not configurable). The graph rewrite does not happen since cudnn kernel is not the option here. This means you will force your graph to be executed with normal kernel, and cannot enjoy the speed from cudnn kernel.

Thirdly, we expect the optimized the graph to run samely before and after the optimization mathematically. We also have the unit test to cover that. @Slacker-WY, if somehow your model does not train correctly, probably check the model structure. Btw, since you have previously switch to use sigmoid activation function, does it improve the accuracy?

Based on all this, here are some personal thoughts on TF 2.0, with hope that they can form part of all of the feedback TF developers are getting which may help guide development/release strategy:

For the moment, I am going to stick with my source-compiled 2.0b1 installation which does not have Eager execution enabled by default. To be honest, I feel like Google is pushing this feature forward (for obvious (and arguable) user-friendliness and pytorch-competing reasons) a bit too fast, when you consider the bugs that remain and the cost (plus, at some points, blurriness) of optimizing code once you are done with the bare design (for which Eager is rather helpful - although it really was not that painful to use session.run in 0.x and 1.x versions…).

I really appreciate the effort at cleaning up the package in 2.0, and although I was reluctant to use tf.keras so extensively, I have to admit it embarks a lot of great mechanics. The documentation (I am talking about the docstrings - the new tutorials on the website are very nice), however, is not yet well adjusted (again, it is normal to take time on such a huge project, but why not wait a bit longer before making 2.0 the norm?), and the work at preserving some compatibility between versions creates some necessary confusion, although the effort here is greatly appreciable.

Finally, the (superficial?) multiplication of engines to create backend graphs (tf.function with and without autograph; tf.keras.backend.function; tf.keras.Model which I guess makes use of the previous) does not help making it clear which to prefer, nor how and when to optimize code. For example, I recently developed a specific kind of layer I found in the literature, and did it by subclassing tf.keras.Layer. Now, if I decorate the call method with tf.function, I get a very significant runtime speedup when feeding it eager tensors and such during development; however, when I build a tf.keras.Model using one or more such layers, the tf.function decoration no longer has any effect on runtime. On the one hand, this is great in the sense that I seemingly do not need to think about decorating my code. On the other hand, this creates even more confusion as to when to use tf.function and when not to (in addition to questions as to what should be optimized to avoid overheads due to some suboptimal conversions within Autograph, as reported in the academic paper which presents it)…

In the end, I feel like while the entry cost of TF might have decreased thanks to Eager and keras, it is getting even harder than in 0.x to get a clear understanding of the lower level mechanics and the skill to optimize code, which I find really sad. The graph mechanics allow TF to outperform other frameworks in terms of performance, and while I get that it can be good to let it partly aside when scratching things up, it would be a shame to see TF lose some of its power “just” to capture some more users, even more so when it is probably already the predominant framework.

Can also confirm. Switching LSTM activation to “sigmoid” stopped the “skipping optimization” issue, but “Unsupported type: 21” appears in that case.

Further, by switching to sigmoid the model trains about half as fast. However, leaving it at the default tanh does not seem to affect validation convergence (backprop is still working).

I’m running tensorflow-gpu 2.0.0-beta1 on Windows 10 with a 2080 Ti.

This is what prints when I set LSTM activation to tanh (the default):

2019-07-05 19:20:02.138247: W tensorflow/core/grappler/optimizers/implementation_selector.cc:199] Skipping optimization due to error while loading function libraries: Invalid argument: Functions '__inference___backward_cudnn_lstm_374_550_specialized_for_Nadam_gradients_recurrent1_StatefulPartitionedCall_grad_StatefulPartitionedCall_at___inference_keras_scratch_graph_2911' and '__inference___backward_standard_lstm_974_1476' both implement 'lstm_3f0d94f5-99f8-4499-8983-151b467a13d9' but their signatures do not match.Hi all:

This warning message is a red herring and can be ignored. It is raised when we do the implementation selection for RNN based on the availability of GPU for cudnn kernel. It is already suppressed in https://github.com/tensorflow/tensorflow/commit/d8379699d3cf5e951e03e70fcc5335726955f260.

Btw, you shouldn’t change to use other activation function just to avoid this warning.

Constant folding might be some other issue, but should affect how the final graph.

All the warning/error message here is in the graph rewrite stage, which is an optimization process. If the optimazation step failed, it will still use the unoptimized graph, which is still a valid one.

@durandg12, ah, thanks for the notice. It seems that my change is only made to 1.15 and barely miss the 2.0 branch cut. I guess it will be released in 2.1 which will come out very soon. For now you can safely ignore the warning message.

I install the tensorflow-gpu==2.0.0-rc0, but the issue still exist.

I ran the code (corrected to use the proper loss function) again in two separate virtual environments (still on the same computer), one with TF 1.14 (installed from pip), the other with TF 2.0b1 compiled from source (from the current r2.0 branch). Both installations include GPU support, but I ran tests with and without the GPU. Additionally, Eager execution is by default not enabled on either of those installations (I do not get why this is the case in TF 2.0b1, but that actually served well), so I tested the behaviours with and without it. Spoiler alert: Eager execution unsurprisingly appears to be at fault.

Initial issue (is definitely weird)

The “skipping optimization” warning does not show up in either of these two installations. So, could it be that there is some form of mismatch between the pre-compiled version’s configuration and my (and @Slacker-WY’s) system?

Convergence issues (are solved)

Using Adam optimizer instead of SGD solves convergence issues.

Using sigmoid activation for the GRU layers instead of the default tanh does not appear to change much; depending on the initial set of weights, it may result in faster or slower convergence speed (as measured by the accuracy reached on the test set after the first training epoch, using the same initial weights and training samples’ ordering) and does not alter running times in a significant manner.

Eager (breaks everything)

When Eager execution is enabled (which is not the default on either of my two installations):

the constant folding error shows up at each and every training step, whatever the rest of the setup details

there seems to be a memory leakage, as I see my RAM usage increase through training (around + 20M per training step, which stack up to be a lot) ; this increase also occurs when running evaluation

using the GPU increases training time by a factor of 20~25 as compared with using CPU, while when Eager is not enabled, using the GPU decreases time by around 40 % in TF 2.0b1, and does not change much in TF 1.14 as compared with using CPU (in 2.0b1, with tanh GRU activation, approx. 1 s/step without GPU, 25 with GPU and Eager and 620ms with GPU but not Eager)

@qlzh727, you mentioned that the constant folding error (which appears to be at the core of the issues I am now reporting) is being dealt with through other issues, but can you confirm that there is some actual activity on the developer side as to it? I know TensorFlow is a huge project and tracking / fixing bugs then integrating those fixes is bound to sometimes take long, but there has not been much activity for the past few weeks on the GitHub issues I found (and sometimes participated in) as to this problem, and it seems to have been harsh to pass the barrier of “front-row” issue readers/testers (which was also the case for the present ticket), so it would be reassuring to know that people are actually working on it.