tensorflow: Tensorflow2.0 distributed training gives error :- A non-DistributedValues value 8 cannot be reduced with the given reduce op ReduceOp.SUM.

Getting the error when i am running distributed training as described in this tutorial (https://colab.research.google.com/github/tensorflow/docs/blob/r2.0rc/site/en/r2/guide/distribute_strategy.ipynb#scrollTo=IlYVC0goepdk)

System information

- OS Platform: - Linux Ubuntu 16.04):

- TensorFlow installed from (source or binary): binary

- TensorFlow version: 2.0-beta

- Python version: 3.7

- Bazel version (if compiling from source):

- GCC/Compiler version (if compiling from source):

- CUDA/cuDNN version: 10.01

- GPU model and memory: p100, 16GB

@tf.function

def train_step(dist_inputs):

def step_fn(inputs):

features, labels = inputs

with tf.GradientTape() as tape:

logits = model(features)

#print(logits)

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(

logits=logits, labels=labels)

loss = tf.reduce_sum(cross_entropy) * (1.0 / global_batch_size)

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(list(zip(grads, model.trainable_variables)))

return cross_entropy

per_example_losses = mirrored_strategy.experimental_run_v2(

step_fn, args=(dist_inputs,))

mean_loss = mirrored_strategy.reduce(

tf.compat.v2.distribute.ReduceOp.MEAN, per_example_losses, axis=0)

return mean_loss

I am getting this error while running abobe distributed code. `ValueError: A non-DistributedValues value 8 cannot be reduced with the given reduce op ReduceOp.SUM.

But it works when I use tf.distribute.ReduceOp.SUM instead of tf.distribute.ReduceOp.MEAN `

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Comments: 23 (8 by maintainers)

Hi, @samuelmat19 You can still train your model on distributed GPU, I am able to train using ReduceOp.SUM only but after that, you have to divide by global batch size you will get the same value which ReduceOp.mean would give.

Hi @gadagashwini

It works on colab because you are not running on multiple GPU, ReduceOp.MEAN is working when I am running on a single GPU, but not working when I am running on multiple GPU.

I also have this bug. Anyone gets proper solution or fixes yet?

@CheungZeeCn @akanyaani

The above code run perfectly in

3-GPU configuration The code fails when theglobal_batch_sizeis perfectly dividable by no.of GPUs.For example, if the no. of GPUs is 4. with global_batch_size 20. Each replica will have batch size of 5 each. This will fail with error

ValueError: A non-DistributedValues value 5 cannot be reduced with the given reduce op ReduceOp.SUM.If 3-GPU batch size across replicas is 7, 7, 6. which will train perfectly

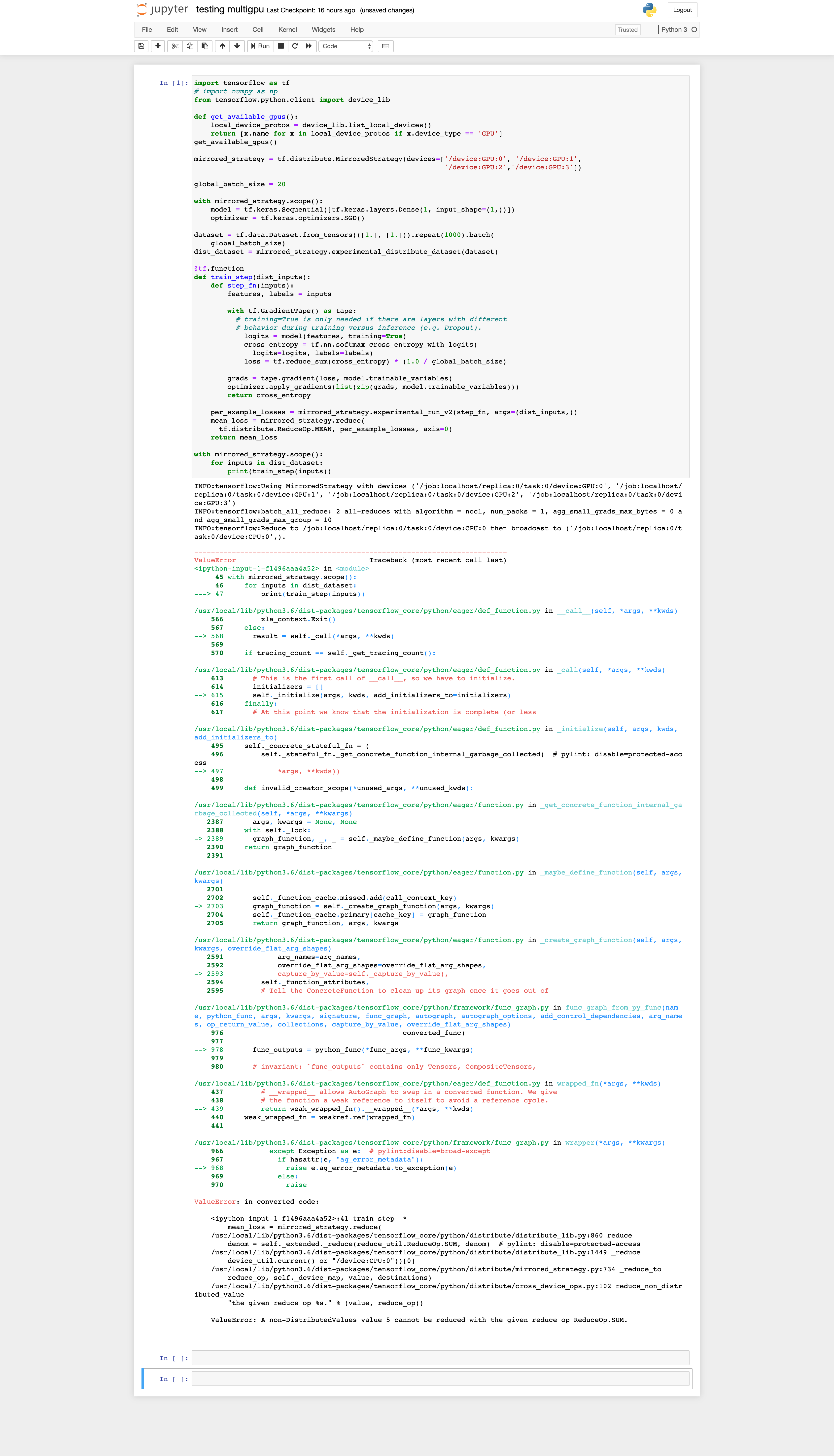

Screenshot of code running in 3 and 4 GPU configuration

3-GPU4-GPU