tensorflow: TensorArray objects improperly handled as tf.function arguments or return values

Please make sure that this is a bug. As per our GitHub Policy, we only address code/doc bugs, performance issues, feature requests and build/installation issues on GitHub. tag:bug_template

System information

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): Yes

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Linux, Ubuntu 16.04

- TensorFlow installed from (source or binary): tensorflow-gpu from binary

- TensorFlow version (use command below): 2.0.0

- Python version: 3.6.7

- CUDA/cuDNN version: CUDA 10.0, cuDNN 7.6.0.64

- GPU model and memory: GeForce GTX 1080

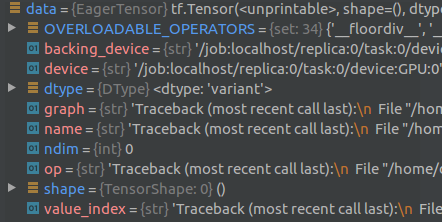

Describe the current behavior When you return a created TensorArray from a function decorated with tf.function, the returned object is an empty Tensor with an illegal state: object type is tf.Tensor(<unprintable>, shape=(), dtype=variant)

This object cannot be used and will throw an exception when attempting to use it. See also attached image for more details.

Example of the exception, when calling concat method: AttributeError: ‘tensorflow.python.framework.ops.EagerTensor’ object has no attribute ‘concat’

Describe the expected behavior The returned object should be a legal TensorArray instance, as is the case when you use only Eager mode functions. In this case the object type is <tensorflow.python.ops.tensor_array_ops.TensorArray object at 0x7f73b0386208>

The code works fine if all functions are in Eager mode (see code example). The code works fine if you concat the TensorArray before returning it (see code example).

Code to reproduce the issue

@tf.function

def accumulate_no_error_graph(d):

arr = tf.TensorArray(tf.float32, num_rows)

for i in range(num_rows):

arr = arr.write(i, d[i])

return arr.concat()

def accumulate_no_error_eager(d):

arr = tf.TensorArray(tf.float32, num_rows)

for i in range(num_rows):

arr = arr.write(i, d[i])

return arr

@tf.function

def accumulate_error_graph(d):

arr = tf.TensorArray(tf.float32, num_rows)

for i in range(num_rows):

arr = arr.write(i, d[i])

return arr

num_rows = 10

a = tf.random.uniform([num_rows, 2])

# Works well

data = accumulate_no_error_eager(a)

print(f'From eager: {data}')

data = accumulate_no_error_graph(a)

print(f'From Graph with concat: {data}')

# Doesn't work!

data = accumulate_error_graph(a)

print(f'From Graph no concat: {data}')

Other info / logs Output of the code:

From eager: <tensorflow.python.ops.tensor_array_ops.TensorArray object at 0x7ff67c5ac470>

From Graph with concat: [0.6765479 0.28778458 0.85561883 0.41792393 0.667024 0.35702074

0.33666503 0.6575141 0.05998921 0.06930244 0.05852807 0.65689635

0.4156996 0.39695132 0.78036475 0.79794145 0.96339357 0.49462914

0.885311 0.04960382]

Traceback (most recent call last):

ValueError: Tensorflow type 21 not convertible to numpy dtype.

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 5

- Comments: 29 (8 by maintainers)

TensorArrays have completely different representations in graph and eager modes. Inside graphs (tf.function) a TensorArray is a

DT_VARIANTtype tensor wrapping avector<Tensor*>whereas in eager mode it is just a python list of EagerTensors so they are not really interoperable. This was done to avoid keeping a copy of the entire TensorArray when performing an operation in eager mode.A workaround for now maybe to return

arr.stack()from the tf.function and unstack the returned value usingtf.TensorArray(tf.float32, num_rows).unstack(accumulate_error_graph(a)). If we support returning TensorArrays from tf.function this is what we might end up doing under the hood. Even if we don’t support this, we should definitely raise a better error message at function tracing time maybe.