serving: Memory leak when reloading model config

Bug Report

Memory leak when reloading model config

System information

OS Platform and Distribution: ubuntu:18.04 TensorFlow Serving installed from: binary TensorFlow Serving version: 2.1.0 Bug produced using TFS docker image: tensorflow/serving:2.1.0-gpu

Describe the Problem

Using the grpc model management endpoints to load and unload models, specifically calling the function ReloadConfigRequest, we’ve loaded 22 copies of the same model each with size 208MiB and proceeded to unload them.

When all the models were loaded docker stats showed ~10GiB in memory usage. We expected it to return close to the base memory usage when we unloaded them all.

But after unloading them, we still saw a usage of 8.153GiB. No additional changes have been made to the TFS code.

Exact Steps to Reproduce

- Pull Docker image

sudo docker pull tensorflow/serving:2.1.0-gpu - Run Docker image

sudo docker run -it --rm -v "/local/models:/models" -e MODEL_NAME=model_name tensorflow/serving:2.1.0-gpu - Have a separate window with tensorflow_serving_api==2.1.0 (binary)

- Add python client side grpc code to tensorflow_serving (shown below)

- Load model 22 (different copies of the same model) times using python client script

- Record memory usage

- Unload all models

- Record memory usage

Source code / logs

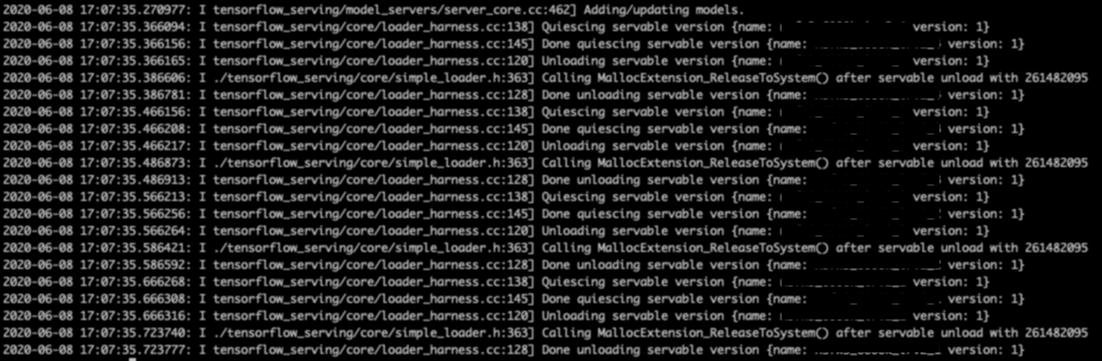

Server side logs

Grpc Client Side Code

import grpc

from tensorflow_serving.apis import model_service_pb2_grpc

from tensorflow_serving.config import model_server_config_pb2

from tensorflow_serving.apis import model_management_pb2

server_address = "0.0.0.0:1234" # Replace with address of your server

def handle_reload_config_request(stub):

model_server_config = model_server_config_pb2.ModelServerConfig()

request = model_management_pb2.ReloadConfigRequest()

config_list = model_server_config_pb2.ModelConfigList()

model_server_config.model_config_list.CopyFrom(config_list)

request.config.CopyFrom(model_server_config)

response = stub.HandleReloadConfigRequest(request)

print("Response: %s" % response)

def run():

with grpc.insecure_channel(server_address) as channel:

stub = model_service_pb2_grpc.ModelServiceStub(channel)

print("-------------Handle Reload Config Request--------------")

handle_reload_config_request(stub)

if __name__ == '__main__':

run()

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 23 (5 by maintainers)

Why? Who can tell me why using jemalloc fix this problem.

you could try to use jemalloc as LD_PRELOAD to replace the original malloc, this method may resolve the problem

Thanks @thomasdhc! I can reproduce the problem with the updated steps. I am looking into the issue.

Hi @thomasdhc, It looks to me the issue seems to be caused by some memory cache behavior from docker. When I tried to load and unload the model multiple times, the reported memory usage does not increase continuously. More specifically, you could try to limit the docker memory by ‘-m 2GB’ when starting the server, the models could be load and unload many times without problem.

Hi @mihaimaruseac,

Yes, we were able to reproduce this with our own models as well as models provided by Tensorflow.