spinnaker: Find image from Tags stage randomly fails with new AMIs

Issue Summary:

As a part of out build pipeline, we build new AMIs using Packer in Jenkins. There is a Spinnaker pipeline that is using a cron trigger (every 15 minutes) to deploy to our staging environment.

The AMIs are built in our “production” AWS account, and shared+tagged in our “development” account where we deploy to our staging environment.

Sometimes when we build a new AMI, our pipeline consistently fails at a Find Image from Tags stage when we search for that new image.

Cloud Provider(s):

AWS

Environment:

Installation on Spinnaker-Ubuntu-14.04-50 ami-6486dc73

spinnaker/trusty,now 0.83.0 all [installed]

spinnaker-clouddriver/trusty,now 2.78.0 all [installed,upgradable to: 2.84.2]

spinnaker-deck/trusty,now 2.1196.0 all [installed,automatic]

spinnaker-echo/trusty,now 1.570.0 all [installed,upgradable to: 1.571.1]

spinnaker-fiat/trusty,now 0.49.9 all [installed,upgradable to: 0.50.0]

spinnaker-front50/trusty,now 1.130.1 all [installed,upgradable to: 1.131.0]

spinnaker-gate/trusty,now 4.37.0 all [installed,upgradable to: 4.40.0]

spinnaker-igor/trusty,now 2.4.2 all [installed,upgradable to: 2.4.3]

spinnaker-orca/trusty,now 6.92.0 all [installed,upgradable to: 6.93.0]

spinnaker-rosco/trusty,now 0.107.0 all [installed,upgradable to: 0.108.0]

Feature Area:

Pipelines. Stage type: Find Image from Tags

Description:

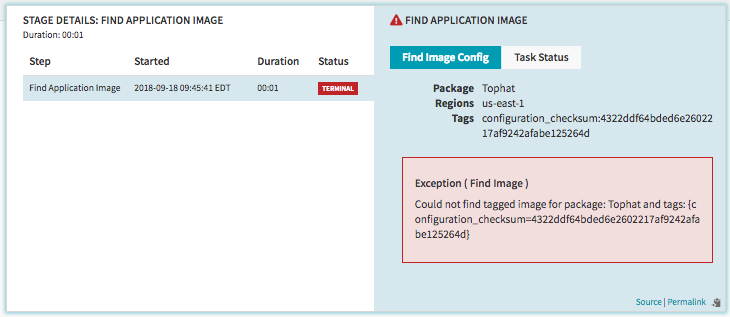

The stage error we see is:

Exception ( Find Image )

Could not find tagged image for package: Tophat and tags: {configuration_checksum=4322ddf64bded6e2602217af9242afabe125264d}

Orca throws the following error:

2018-09-18 18:38:51.694 ERROR 7767 --- [ handlers-18] c.n.s.orca.q.handler.RunTaskHandler : [] Error running FindImageFromTagsTask for pipeline[01CQPZKC4HS2500PKXCPZRYB4R]

java.lang.IllegalStateException: Could not find tagged image for package: Tophat and tags: {configuration_checksum=c31586f20995f58d90aecb6a785753fcced61189}

at com.netflix.spinnaker.orca.clouddriver.tasks.image.FindImageFromTagsTask.execute(FindImageFromTagsTask.java:58)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler$handle$1$2.invoke(RunTaskHandler.kt:95)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler$handle$1$2.invoke(RunTaskHandler.kt:54)

at com.netflix.spinnaker.orca.q.handler.AuthenticationAware$sam$java_util_concurrent_Callable$0.call(AuthenticationAware.kt)

at com.netflix.spinnaker.security.AuthenticatedRequest.lambda$propagate$0(AuthenticatedRequest.java:94)

at com.netflix.spinnaker.orca.q.handler.AuthenticationAware$DefaultImpls.withAuth(AuthenticationAware.kt:49)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler.withAuth(RunTaskHandler.kt:54)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler$handle$1.invoke(RunTaskHandler.kt:94)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler$handle$1.invoke(RunTaskHandler.kt:54)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler$withTask$1.invoke(RunTaskHandler.kt:176)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler$withTask$1.invoke(RunTaskHandler.kt:54)

at com.netflix.spinnaker.orca.q.handler.OrcaMessageHandler$withTask$1.invoke(OrcaMessageHandler.kt:48)

at com.netflix.spinnaker.orca.q.handler.OrcaMessageHandler$withTask$1.invoke(OrcaMessageHandler.kt:32)

at com.netflix.spinnaker.orca.q.handler.OrcaMessageHandler$withStage$1.invoke(OrcaMessageHandler.kt:58)

at com.netflix.spinnaker.orca.q.handler.OrcaMessageHandler$withStage$1.invoke(OrcaMessageHandler.kt:32)

at com.netflix.spinnaker.orca.q.handler.OrcaMessageHandler$DefaultImpls.withExecution(OrcaMessageHandler.kt:67)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler.withExecution(RunTaskHandler.kt:54)

at com.netflix.spinnaker.orca.q.handler.OrcaMessageHandler$DefaultImpls.withStage(OrcaMessageHandler.kt:54)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler.withStage(RunTaskHandler.kt:54)

at com.netflix.spinnaker.orca.q.handler.OrcaMessageHandler$DefaultImpls.withTask(OrcaMessageHandler.kt:41)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler.withTask(RunTaskHandler.kt:54)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler.withTask(RunTaskHandler.kt:169)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler.handle(RunTaskHandler.kt:67)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler.handle(RunTaskHandler.kt:54)

at com.netflix.spinnaker.q.MessageHandler$DefaultImpls.invoke(MessageHandler.kt:36)

at com.netflix.spinnaker.orca.q.handler.OrcaMessageHandler$DefaultImpls.invoke(OrcaMessageHandler.kt)

at com.netflix.spinnaker.orca.q.handler.RunTaskHandler.invoke(RunTaskHandler.kt:54)

at com.netflix.spinnaker.orca.q.audit.ExecutionTrackingMessageHandlerPostProcessor$ExecutionTrackingMessageHandlerProxy.invoke(ExecutionTrackingMessageHandlerPostProcessor.kt:47)

at com.netflix.spinnaker.q.QueueProcessor$pollOnce$1$1.run(QueueProcessor.kt:82)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

When I look in our redis cache for our development AWS account, I see that the image exists and the AMI tags match exactly what the error is saying it cannot find:

$ redis-cli -h spinnaker-redis.xxxxxx.0001.use1.cache.amazonaws.com get com.netflix.spinnaker.clouddriver.aws.provider.AwsProvider:images:attributes:aws:images:development:us-east-1:ami-<image id>

"{

"architecture": "x86_64",

"blockDeviceMappings": [

{

"deviceName": "/dev/sda1",

"ebs": {

"deleteOnTermination": true,

"encrypted": false,

"snapshotId": "snap-02c02fd43c65000ec",

"volumeSize": 8,

"volumeType": "gp2"

}

},

{

"deviceName": "/dev/sdb",

"virtualName": "ephemeral0"

},

{

"deviceName": "/dev/sdc",

"virtualName": "ephemeral1"

}

],

"creationDate": "2018-09-17T15:56:46.000Z",

"enaSupport": true,

"hypervisor": "xen",

"imageId": "ami-<image id>",

"imageLocation": "<Production AWS account id>/App Tophat bump-th-authorization2018-09-17T15-41-57Z-00",

"imageType": "machine",

"name": "App Tophat bump-th-authorization2018-09-17T15-41-57Z-00",

"ownerId": "<Production AWS account id>",

"productCodes": [],

"public": false,

"rootDeviceName": "/dev/sda1",

"rootDeviceType": "ebs",

"sriovNetSupport": "simple",

"state": "available",

"tags": [

{

"key": "configuration_checksum",

"value": "4322ddf64bded6e2602217af9242afabe125264d"

}

],

"virtualizationType": "hvm"

}"

When we run into this bug, out solution has been to generate a new image using our same method and restart the pipeline. Then (ideally) the stage passes.

Steps to Reproduce:

Cannot reproduce on demand.

Additional Details:

Screenshots:

Please let me know if you have any questions or need extra details.

Note: I have seen conversation around this bug in the Spinnaker Slack workspace, but the history has been erased.

About this issue

- Original URL

- State: closed

- Created 6 years ago

- Reactions: 7

- Comments: 42 (2 by maintainers)

This is still a major problem for us, still happens no matter what we attempt to clean up or archive,

currently only 500 ami’s and same references in our redis for those images, but still fails on finding tagged image.

We are still seeing this issue. @spinnakerbot Remove stale label

This issue is tagged as ‘stale’ and hasn’t been updated in 45 days, so we are tagging it as ‘to-be-closed’. It will be closed in 45 days unless updates are made. If you want to remove this label, comment: