bottleneck: Big queue OOM issue

Hi! I’m facing OOM issues while trying to add lot’s of jobs to the queue. Let’s say I have a list of 10M links. I need to visit each at allowed 50 requests per sec rate limit.

Here is what I’m doing:

const getData = (url) => {

const res = await fetch(url)

...

}

//I'm not utilizing 50 requests per sec here, but that's not the case

const limiter = new Bottleneck({

maxConcurrent: 10,

minTime: 100,

});

for (link of links) {

limiter.schedule(() => getData(link));

}

If links contains a considerably small amount of urls, everything works like a charm. But as we remember, links is supposed to contain 10M urls. As you may have guessed, I receive FATAL ERROR: CALL_AND_RETRY_LAST Allocation failed - JavaScript heap out of memory shortly after start (because I’m trying to add 10M of functions (but not the links) to the queue).

Another choice is to do like this:

for (link of links) {

await limiter.schedule(() => getData(link));

}

but it kills the whole idea of 50 requests per sec, as it adds the next url only after the previous one was fetched.

Am I doing it wrong? Is there any way to accomplish what I need? Can I just provide a worker function and a list of inputs, so Bottleneck won’t be trying to create a huge number of jobs at the same time, but will create a job for each input item only when it’s time comes (like here https://caolan.github.io/async/docs.html#queue for example)?

Thanks in advance

About this issue

- Original URL

- State: open

- Created 6 years ago

- Comments: 23 (14 by maintainers)

v2.18.1 has been released with these optimizations. It uses 40% less memory per job in the

QUEUEDstate. 🎉I’m still refactoring to enable new features such as #90 and that may yield more memory savings.

I’m still working on this. v2.15.3 fixes a few memory issues.

@SGrondin This is not directly related to this issue, but we’re having a similar memory issues with Probot apps after >2.9.0. I looked at the

limiter.on('debug')messages and can see events that claim it freed the memory, but when I take a heap snapshot I can see that all the objects are still in memory:This is how we’re implementing the rate limiter in probot:

https://github.com/probot/probot/blob/58559ff22d4524a32fa099db1483fd2c9ac54ca0/src/github/rate-limiting.ts

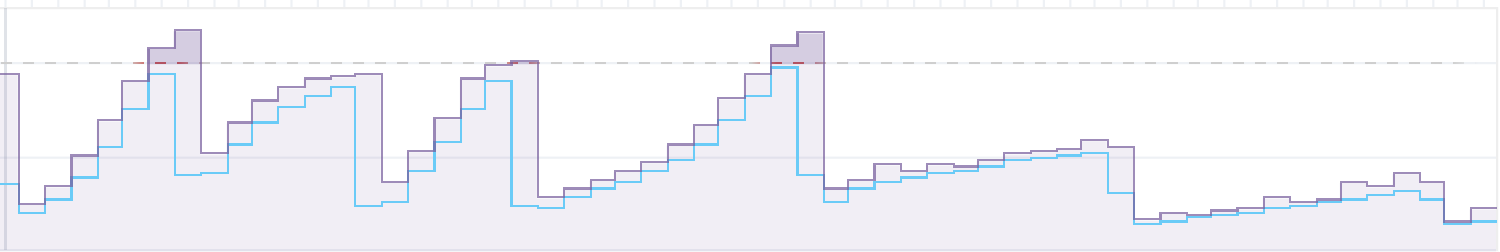

Basically it’s supposed to schedule a call to GitHub once per second. The memory grows pretty consistently based on how much activity there is on the instance (it grows a lot slower on holidays and weekends):

Any tips here? I tried looking through release notes for 2.9.0, but nothing looked like it should cause this change in behavior.

@tcbyrd Did v2.13.1 remove the need to pin against v2.9.0?

@eawer Thank you for the repo, it allowed me really drill down on the issue. It’s a different one from @tcbyrd 's. I believe I have identified what’s going on and it’s not a memory leak: the code is simply not efficient enough. I’ve narrowed down the main cause to the extensive use of

apply(). In my experiments, everyapplyI replaced reduced the noticeably memory usage, and they’re all in the hot path. After replacing about 10 of them, I was able to run your test into the millions of queued items.applyshort-circuits some of V8’s optimizations and each use will create a new closure that captures its scope. That was always the intended behavior, and Bottleneck has been usingapplyto launch jobs since the very first version, but newer versions of JS have changed the balance between code clarity and efficiency, andapplyis no longer on the winning side for a project like this one. Replacing them all isn’t very complicated (and has been on my mind for several months), but it will require significant testing/QA, so it’s something I’m not going to rush. I’m aiming for “late December” to release this.@SGrondin Thanks! I’m unleashing the chaos moneky on them now! I’ll have some data for your tomorrow.

Perfect. I’ll have those branches up tonight. I’m hoping I can get the hotfix released first, afterwards I can take whatever time I need to find a reliable way to reproduce it locally. Then I’ll turn it into a test using a child process.

Hi,

Your code will use a lot less memory if you do this instead:

That way JS doesn’t need to create a closure and keep 50M copies of your current state in memory.

Please let me know if that fixes it!