scikit-learn: The fit performance of LinearRegression is sub-optimal

It seems that the performance of Linear Regression is sub-optimal when the number of samples is very large.

sklearn_benchmarks measures a speedup of 48 compared to an optimized implementation from scikit-learn-intelex on a 1000000x100 dataset. For a given set of parameters and a given dataset, we compute the speed-up time scikit-learn / time sklearnex. A speed-up of 48 means that sklearnex is 48 times faster than scikit-learn on the given dataset.

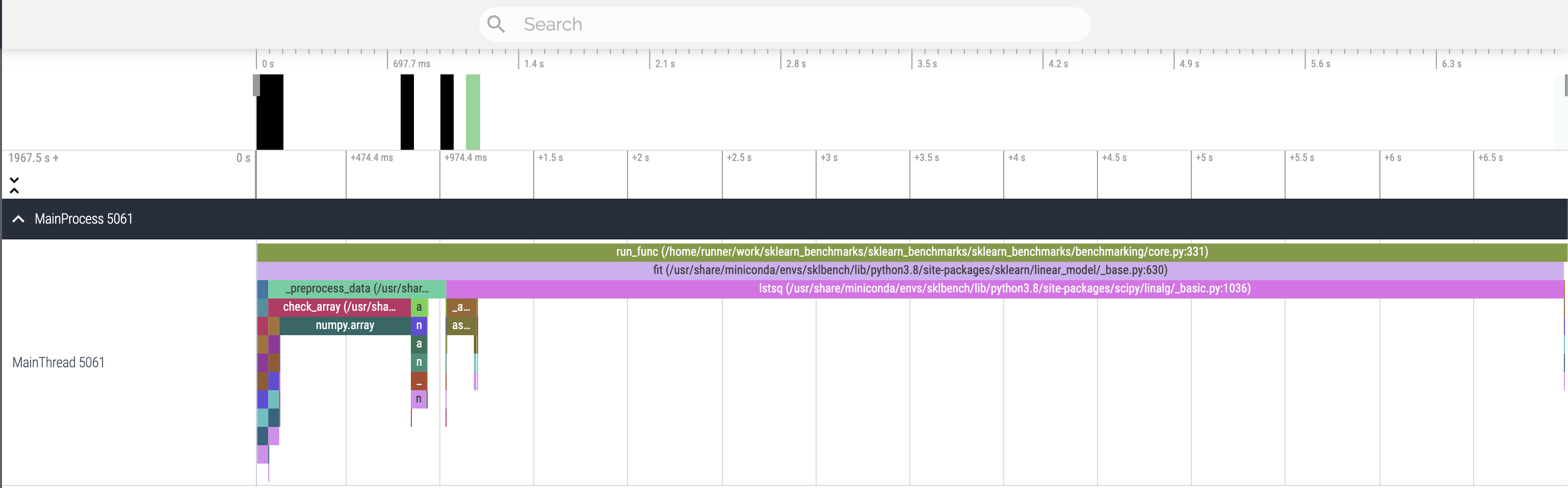

Profiling allows a more detailed analysis of the execution of the algorithm. We observe that most of the execution time is spent in the lstsq solver of scipy.

The profiling reports of sklearn_benchmarks can be viewed with Perfetto UI.

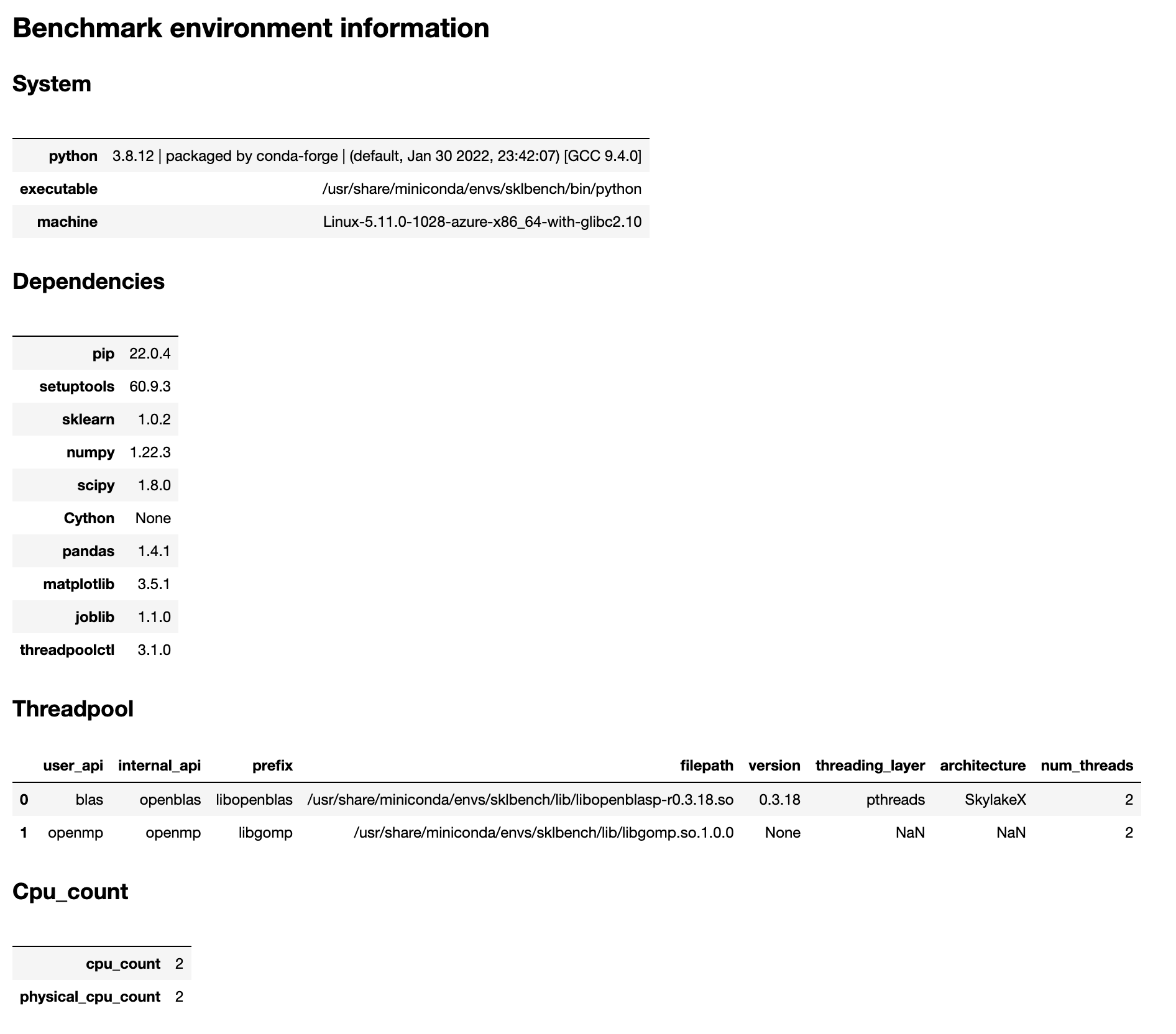

See benchmark environment information

It seems that the solver could be better chosen when the number of samples is very large. Perhaps Ridge’s solver with a zero penalty could be chosen in this case. On the same dimensions, it shows better performance.

Speedups can be reproduced with the following code:

conda create -n lr_perf -c conda-forge scikit-learn scikit-learn-intelex numpy jupyter

conda activate lr_perf

from sklearn.linear_model import LinearRegression as LinearRegressionSklearn

from sklearnex.linear_model import LinearRegression as LinearRegressionSklearnex

from sklearn.datasets import make_regression

import time

import numpy as np

X, y = make_regression(n_samples=1_000_000, n_features=100, n_informative=10)

def measure(estimator, X, y, n_executions=10):

times = []

while len(times) < n_executions:

t0 = time.perf_counter()

estimator.fit(X, y)

t1 = time.perf_counter()

times.append(t1 - t0)

return np.mean(times)

mean_time_sklearn = measure(

estimator=LinearRegressionSklearn(),

X=X,

y=y

)

mean_time_sklearnex = measure(

estimator=LinearRegressionSklearnex(),

X=X,

y=y

)

speedup = mean_time_sklearn / mean_time_sklearnex

speedup

About this issue

- Original URL

- State: open

- Created 2 years ago

- Reactions: 4

- Comments: 23 (23 by maintainers)

It is what I found reading your comment afterwards 😃 Hoping that

LinearRegressioncan forgive me for this comment 😃Regarding using the LAPACK

_gesvwithnp.linagl.solve:It is then as efficient than LBFGS.

Indeed, one can get a good speedup (x13 on my machine) with

Ridge(alpha=0).Arguably,

Ridgeshould always be preferred instead ofLinearRegressionanyway.In particular: https://github.com/oneapi-src/oneDAL/tree/master/cpp/daal/src/algorithms/linear_regression

EDIT which is called by (based on a

py-spy --nativetrace output by Julien):this C++ call, comes from the following Python wrapper:

https://github.com/intel/scikit-learn-intelex/blob/f542d87b3d131fd839a2d4bcb86c849d77aae2b8/daal4py/sklearn/linear_model/_linear.py#L60

Note the use of the

"defaultDense"and"qrDense"method names.