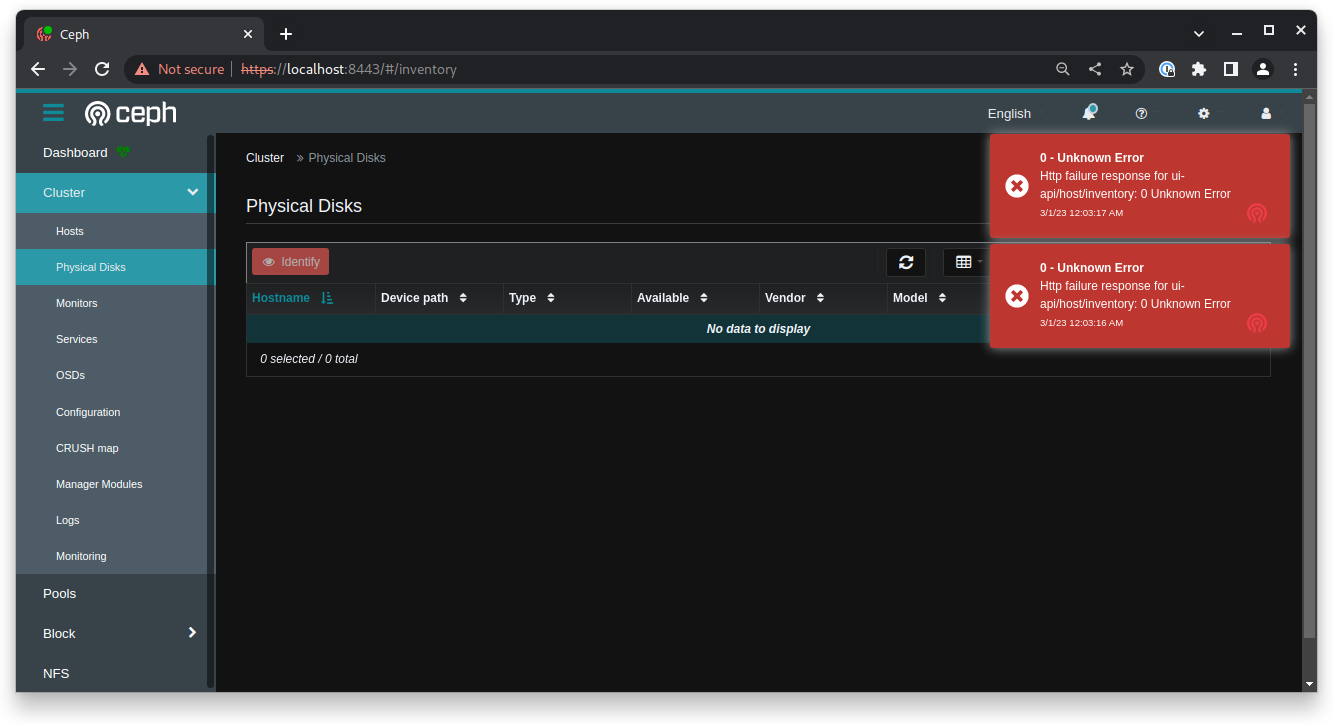

rook: dashboard will not load inventory

Is this a bug report or feature request?

- Bug Report

Deviation from expected behavior:

rook-ceph-mgr-a-896487475-2rmnr mgr debug 2023-02-28T23:58:17.739+0000 7fe2d15e3700 0 [rook ERROR rook.rook_cluster] No storage class exists matching configured Rook orchestrator storage class which currently is <local>. This storage class can be set in ceph config (mgr/rook/storage_class)

rook-ceph-mgr-a-896487475-2rmnr mgr debug 2023-02-28T23:58:17.755+0000 7fe2d15e3700 0 [rook ERROR orchestrator._interface] No storage class exists matching name provided in ceph config at mgr/rook/storage_class

rook-ceph-mgr-a-896487475-2rmnr mgr Traceback (most recent call last):

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/orchestrator/_interface.py", line 125, in wrapper

rook-ceph-mgr-a-896487475-2rmnr mgr return OrchResult(f(*args, **kwargs))

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/rook/module.py", line 229, in get_inventory

rook-ceph-mgr-a-896487475-2rmnr mgr discovered_devs = self.rook_cluster.get_discovered_devices(host_list)

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/rook/rook_cluster.py", line 713, in get_discovered_devices

rook-ceph-mgr-a-896487475-2rmnr mgr storage_class = self.get_storage_class()

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/rook/rook_cluster.py", line 709, in get_storage_class

rook-ceph-mgr-a-896487475-2rmnr mgr raise Exception('No storage class exists matching name provided in ceph config at mgr/rook/storage_class')

rook-ceph-mgr-a-896487475-2rmnr mgr Exception: No storage class exists matching name provided in ceph config at mgr/rook/storage_class

rook-ceph-mgr-a-896487475-2rmnr mgr debug 2023-02-28T23:58:17.791+0000 7fe2d15e3700 0 [dashboard ERROR exception] Internal Server Error

rook-ceph-mgr-a-896487475-2rmnr mgr Traceback (most recent call last):

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/dashboard/services/exception.py", line 47, in dashboard_exception_handler

rook-ceph-mgr-a-896487475-2rmnr mgr return handler(*args, **kwargs)

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/lib/python3.6/site-packages/cherrypy/_cpdispatch.py", line 54, in __call__

rook-ceph-mgr-a-896487475-2rmnr mgr return self.callable(*self.args, **self.kwargs)

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/dashboard/controllers/_base_controller.py", line 258, in inner

rook-ceph-mgr-a-896487475-2rmnr mgr ret = func(*args, **kwargs)

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/dashboard/controllers/orchestrator.py", line 33, in _inner

rook-ceph-mgr-a-896487475-2rmnr mgr return method(self, *args, **kwargs)

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/lib64/python3.6/contextlib.py", line 52, in inner

rook-ceph-mgr-a-896487475-2rmnr mgr return func(*args, **kwds)

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/dashboard/controllers/host.py", line 506, in inventory

rook-ceph-mgr-a-896487475-2rmnr mgr return get_inventories(None, refresh)

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/dashboard/controllers/host.py", line 251, in get_inventories

rook-ceph-mgr-a-896487475-2rmnr mgr for host in orch.inventory.list(hosts=hosts, refresh=do_refresh)]

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/dashboard/services/orchestrator.py", line 38, in inner

rook-ceph-mgr-a-896487475-2rmnr mgr raise_if_exception(completion)

rook-ceph-mgr-a-896487475-2rmnr mgr File "/usr/share/ceph/mgr/orchestrator/_interface.py", line 228, in raise_if_exception

rook-ceph-mgr-a-896487475-2rmnr mgr raise e

rook-ceph-mgr-a-896487475-2rmnr mgr Exception: No storage class exists matching name provided in ceph config at mgr/rook/storage_class

rook-ceph-mgr-a-896487475-2rmnr mgr debug 2023-02-28T23:58:17.795+0000 7fe2d15e3700 0 [dashboard ERROR request] [::ffff:127.0.0.1:36992] [GET] [500] [0.523s] [admin] [513.0B] /ui-api/host/inventory

rook-ceph-mgr-a-896487475-2rmnr mgr debug 2023-02-28T23:58:17.795+0000 7fe2d15e3700 0 [dashboard ERROR request] [b'{"status": "500 Internal Server Error", "detail": "The server encountered an unexpected condition which prevented it from fulfilling the request.", "request_id": "71801d2f-fb67-41f9-ac63-cf39936fe400"}

Expected behavior:

The dashboard should load the inventory.

How to reproduce it (minimal and precise):

I don’t know, but these are all the manifests used to create the cluster: https://github.com/uhthomas/automata/tree/0f3a179552717c8f59e2abc76ed52eab124e6d01/k8s/unwind/rook_ceph

File(s) to submit:

- Cluster CR (custom resource), typically called

cluster.yaml, if necessary

Logs to submit:

-

Operator’s logs, if necessary

-

Crashing pod(s) logs, if necessary

To get logs, use

kubectl -n <namespace> logs <pod name>When pasting logs, always surround them with backticks or use theinsert codebutton from the Github UI. Read GitHub documentation if you need help.

Cluster Status to submit:

-

Output of krew commands, if necessary

To get the health of the cluster, use

kubectl rook-ceph healthTo get the status of the cluster, usekubectl rook-ceph ceph statusFor more details, see the Rook Krew Plugin

Environment:

- OS (e.g. from /etc/os-release):

NAME="Talos"

ID=talos

VERSION_ID=v1.3.5

PRETTY_NAME="Talos (v1.3.5)"

HOME_URL="https://www.talos.dev/"

BUG_REPORT_URL="https://github.com/siderolabs/talos/issues"

- Kernel (e.g.

uname -a):

❯ k get no -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

talos-6xb-myy Ready control-plane 7d21h v1.26.1 10.0.0.241 <none> Talos (v1.3.5) 5.15.94-talos containerd://1.6.18

talos-8op-x2k Ready control-plane 7d21h v1.26.1 10.0.0.243 <none> Talos (v1.3.5) 5.15.94-talos containerd://1.6.18

talos-nlu-hin Ready control-plane 7d21h v1.26.1 10.0.0.250 <none> Talos (v1.3.5) 5.15.94-talos containerd://1.6.18

talos-rh9-xsk Ready control-plane 7d21h v1.26.1 10.0.0.249 <none> Talos (v1.3.5) 5.15.94-talos containerd://1.6.18

talos-x7c-56v Ready control-plane 7d21h v1.26.1 10.0.0.246 <none> Talos (v1.3.5) 5.15.94-talos containerd://1.6.18

- Cloud provider or hardware configuration: 3 Dell R720s, 2 Dell R430s. 10xHGST 8TB drives.

- Rook version (use

rook versioninside of a Rook Pod):

❯ k -n rook-ceph exec rook-ceph-operator-6b67cc67fd-97rh7 -- rook version

rook: v1.10.11

go: go1.19.5

- Storage backend version (e.g. for ceph do

ceph -v): ceph version 17.2.5 (98318ae89f1a893a6ded3a640405cdbb33e08757) quincy (stable) - Kubernetes version (use

kubectl version):

Client Version: version.Info{Major:"1", Minor:"26", GitVersion:"v1.26.1", GitCommit:"8f94681cd294aa8cfd3407b8191f6c70214973a4", GitTreeState:"archive", BuildDate:"2023-01-18T22:38:55Z", GoVersion:"go1.19.5", Compiler:"gc", Platform:"linux/amd64"}

Kustomize Version: v4.5.7

Server Version: version.Info{Major:"1", Minor:"26", GitVersion:"v1.26.1", GitCommit:"8f94681cd294aa8cfd3407b8191f6c70214973a4", GitTreeState:"clean", BuildDate:"2023-01-18T15:51:25Z", GoVersion:"go1.19.5", Compiler:"gc", Platform:"linux/amd64"}

- Kubernetes cluster type (e.g. Tectonic, GKE, OpenShift): Bare Metal (Talos).

- Storage backend status (e.g. for Ceph use

ceph healthin the Rook Ceph toolbox):

bash-4.4$ ceph status

cluster:

id: 617e4bed-456a-4b91-8cdb-c3a92e62c6a5

health: HEALTH_OK

services:

mon: 5 daemons, quorum b,c,d,e,f (age 2s)

mgr: a(active, since 7h), standbys: b

osd: 10 osds: 10 up (since 7h), 10 in (since 3d)

data:

pools: 3 pools, 3 pgs

objects: 11 objects, 897 KiB

usage: 138 MiB used, 73 TiB / 73 TiB avail

pgs: 3 active+clean

About this issue

- Original URL

- State: open

- Created a year ago

- Reactions: 4

- Comments: 30 (16 by maintainers)

@kpoos @vyas-n the PR https://github.com/ceph/ceph/pull/54151 should fix this issue

@kpoos the fix has already been merged into the reef branch and should come with

v18.2.1(reef release that should be out soon). If no major issues prevent it next version of rook should use this ceph release as default.@rkachach Do you have any estimation, in which version will this be released, as the latest version still does not solves this issue in our cluster. We are already on rook version rook/ceph:v1.12.8 and ceph image quay.io/ceph/ceph:v18.2.0-20231114, but the issue is still with us.

@rkachach awsome. thanks a lot

awesome, thank you @kpoos

@rkachach I guess, this is still related that the operator-config configmap is not found:

debug 2023-10-23T16:53:37.094+0000 7f6cb308e700 0 [dashboard INFO request] [10.45.13.198:47130] [GET] [500] [0.461s] [admin] [513.0B] /ui-api/host/inventory debug 2023-10-23T16:53:37.354+0000 7f6cb4911700 0 [dashboard INFO orchestrator] is orchestrator available: True, debug 2023-10-23T16:53:37.362+0000 7f6cb4911700 0 [rook ERROR orchestrator._interface] (404) Reason: Not Found HTTP response headers: HTTPHeaderDict({‘Audit-Id’: ‘edcce5d9-aac4-444b-a41c-bc3dac5fe137’, ‘Cache-Control’: ‘no-cache, private’, ‘Content-Type’: ‘application/json’, ‘X-Kubernetes-Pf-Flowsche ma-Uid’: ‘9f387737-9477-445c-bb49-fa789d327f35’, ‘X-Kubernetes-Pf-Prioritylevel-Uid’: ‘6be5645b-708e-41d0-8ac4-696234270e1b’, ‘Date’: ‘Mon, 23 Oct 2023 16:53:37 GMT’, ‘Content-Length’: ‘230’ }) HTTP response body: {“kind”:“Status”,“apiVersion”:“v1”,“metadata”:{},“status”:“Failure”,“message”:“configmaps "rook-ceph-operator-config" not found”,“reason”:“NotFound”,“details”:{“name”:" rook-ceph-operator-config",“kind”:“configmaps”},“code”:404}

Traceback (most recent call last): File “/usr/share/ceph/mgr/orchestrator/_interface.py”, line 125, in wrapper return OrchResult(f(*args, **kwargs)) File “/usr/share/ceph/mgr/rook/module.py”, line 229, in get_inventory discovered_devs = self.rook_cluster.get_discovered_devices(host_list) File “/usr/share/ceph/mgr/rook/rook_cluster.py”, line 762, in get_discovered_devices op_settings = self.coreV1_api.read_namespaced_config_map(name=“rook-ceph-operator-config”, namespace=‘rook-ceph’).data File “/usr/lib/python3.6/site-packages/kubernetes/client/api/core_v1_api.py”, line 18390, in read_namespaced_config_map (data) = self.read_namespaced_config_map_with_http_info(name, namespace, **kwargs) # noqa: E501 File “/usr/lib/python3.6/site-packages/kubernetes/client/api/core_v1_api.py”, line 18481, in read_namespaced_config_map_with_http_info collection_formats=collection_formats) File “/usr/lib/python3.6/site-packages/kubernetes/client/api_client.py”, line 345, in call_api _preload_content, _request_timeout) File “/usr/lib/python3.6/site-packages/kubernetes/client/api_client.py”, line 176, in __call_api _request_timeout=_request_timeout) File “/usr/lib/python3.6/site-packages/kubernetes/client/api_client.py”, line 366, in request headers=headers) File “/usr/lib/python3.6/site-packages/kubernetes/client/rest.py”, line 241, in GET query_params=query_params) File “/usr/lib/python3.6/site-packages/kubernetes/client/rest.py”, line 231, in request raise ApiException(http_resp=r) kubernetes.client.rest.ApiException: (404) Reason: Not Found HTTP response headers: HTTPHeaderDict({‘Audit-Id’: ‘edcce5d9-aac4-444b-a41c-bc3dac5fe137’, ‘Cache-Control’: ‘no-cache, private’, ‘Content-Type’: ‘application/json’, ‘X-Kubernetes-Pf-Flowschema-Uid’: ‘9f387737-9477-445c-bb49-fa789d327f35’, ‘X-Kubernetes-Pf-Prioritylevel-Uid’: ‘6be5645b-708e-41d0-8ac4-696234270e1b’, ‘Date’: ‘Mon, 23 Oct 2023 16:53:37 GMT’, ‘Content-Length’: ‘230’}) HTTP response body: {“kind”:“Status”,“apiVersion”:“v1”,“metadata”:{},“status”:“Failure”,“message”:“configmaps "rook-ceph-operator-config" not found”,“reason”:“NotFound”,“details”:{“name”:“rook-ceph-operator-config”,“kind”:“configmaps”},“code”:404}

However here are the full logs of both mgrs with all containers. Hope it helps. rook-ceph-mgr-a-7f7b88c67f-zs5lk.log rook-ceph-mgr-b-65d5569fc8-b4tkj.log

@kpoos You have the discovery daemon enabled? And your cluster is in the

rook-cephnamespace or a different namespace? @rkachach fyi@jmolmo the manager module was enabled, but apparently not using the CRD and the discovery daemon was (default) disabled. Both now changed to match the docs, after each setting I waited for reconciliation to finish and, after it didn’t work right away, I also restarted the mgr pod. Still have the same result:

@LittleFox94 , @uhthomas have you set properly the operator attributes to fill inventory with physical disks info?

https://rook.io/docs/rook/v1.11/Storage-Configuration/Monitoring/ceph-dashboard/?h=dashboard#visualization-of-physical-disks-section-in-the-dashboard

@LittleFox94 You have the

rook-ceph-operator-configconfigmap in therook-systemnamespace, right? Looks like the mgr module is assuming that configmap is found in the same namespace as the rook cluster. @jmolmo Could you take a look?