ioredis: Failed to refresh slots cache

Hi,

We are running ioredis 4.0.0 against an AWS ElastiCache replication group with cluster mode on (2 nodes, 1 shard) and using the cluster configuration address to connect to the cluster.

client = new Redis.Cluster([config.redis]);

and the config part:

redis: Config({

host: {

env: 'REDIS_HOST',

ssm: '/elasticache/redis/host'

},

port: {

env: 'REDIS_PORT',

ssm: '/elasticache/redis/port'

}

}),

Every time we start the application the logs shows us:

{

"stack": "Error: Failed to refresh slots cache.\n at tryNode (/app/node_modules/ioredis/built/cluster/index.js:371:25)\n at /app/node_modules/ioredis/built/cluster/index.js:383:17\n at Timeout.<anonymous> (/app/node_modules/ioredis/built/cluster/index.js:594:20)\n at Timeout.run (/app/node_modules/ioredis/built/utils/index.js:144:22)\n at ontimeout (timers.js:486:15)\n at tryOnTimeout (timers.js:317:5)\n at Timer.listOnTimeout (timers.js:277:5)",

"message": "Failed to refresh slots cache.",

"lastNodeError": {

"stack": "Error: timeout\n at Object.exports.timeout (/app/node_modules/ioredis/built/utils/index.js:147:38)\n at Cluster.getInfoFromNode (/app/node_modules/ioredis/built/cluster/index.js:591:34)\n at tryNode (/app/node_modules/ioredis/built/cluster/index.js:376:15)\n at Cluster.refreshSlotsCache (/app/node_modules/ioredis/built/cluster/index.js:391:5)\n at Cluster.<anonymous> (/app/node_modules/ioredis/built/cluster/index.js:171:14)\n at new Promise (<anonymous>)\n at Cluster.connect (/app/node_modules/ioredis/built/cluster/index.js:125:12)\n at new Cluster (/app/node_modules/ioredis/built/cluster/index.js:81:14)\n at Object.<anonymous> (/app/node_modules/@myapp/myapp-core/core/redis.js:10:12)\n at Module._compile (module.js:652:30)",

"message": "timeout"

},

"level": "error"

}

What more information do you need so we can get this fixed?

About this issue

- Original URL

- State: closed

- Created 6 years ago

- Comments: 63 (3 by maintainers)

Please enable the debug mode

DEBUG=ioredis:* node yourapp.jsand post the logs here.Here is what I used to get a redis cluster connection using aws elasticache with auth.

I am also having this issue with the latest ioredis. I have tried setting slotsRefreshTimeout to 2000 and 5000. I have tried adding the cluster endpoints directly. Tried a combination of all. I have tried without cluster config and nothing is working for me. I am using redis 5.0.4 on ElastiCache on AWS. Has anyone gotten this working already? I even tried the redis-clustr package but it does not work either.

Same problem happening for me as well. ElastiCache engine 6.2 (cluster) and ioredis@4.28.2.

Our Services are using AWS Elasticache with Cluster - Enabled auto-failover. Application deployed as docker container in the AWS ECS Cluster.

We were upgraded ioredis version from 2.4.2 to 4.16.1 after that two issues were popped up which are mentioned below.

1). ClusterAllFailedError: Failed to refresh slots cache. 2). CPU utilization is high in the application.

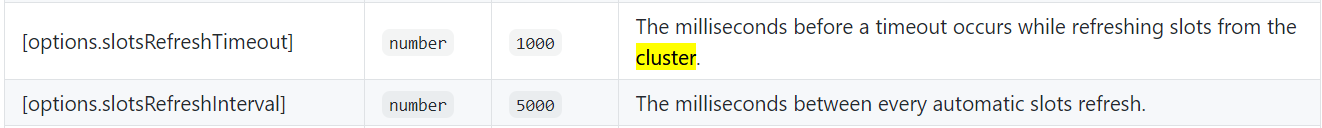

Why didn’t happen above issue in ioredis v2.4.2? The cluster instance does not have the configurable option of the below property and I do not know what is the default.

IOREDIS – v3.1.0+ Cluster introduced configurable Property.

IOREDIS – v4.0.0-0+ Cluster introduced configurable Property.

As per ioredis v4.0.0.-0 document mentioned below default value.

ClusterAllFailedError: Failed to refresh slots cache When the sloftRefreshInterval reached the ioredis is trying to refresh the slot but the issue occurs due to the default timeout value of the refreshing slot.

CPU utilization is high in the application. When ioredis client is trying to refresh the slot every 5sec because sloftRefreshInterval default value is 5seconds(5000ms) so the application CPU utilization was high.

So we have configured the below-mentioned value in our application to fix the issue. slotsRefreshTimeout configured with 10seconds instead of the default value 1seconds. slotsRefreshInterval configured with 5minutes instead of the default value is 5seconds.

Hope this information would help you to fix the issue.

I was able to connect to aws with increased slotsRefreshTimeout

Elasticache engine: Clustered Redis Elasticache engine version: 6.2.5 ioredis version: 4.28.3 Cluster: 3 shards, 9 nodes Redis Auth: None

I have one cluster in two different environments that are nearly the same. One cluster has encryption at-rest and in-transit disabled. I have been able to connect to this cluster just fine with:

However, the other cluster with encryption enabled fails to establish a connection with this same code and displayed the

ClusterAllFailedError: Failed to refresh slots cache.error. Interestingly, if I instead pass in every, or some, Redis node in the cluster to theRedis.Clusterconstructor as a{ host, port }[]object it establishes a connection just fine.I wanted to take a step back to see if the encryption was the issue and re-provisioned this cluster with encryption disabled and sure enough it works.

We can still see this issue with ElastiCache engine 6.2 (cluster) and ioredis@4.28.2. Any workaround ?

@MatteoGioioso Yes, that is correct. The below blog has this outlined as well as the docs.

Redis Cluster mode: Use Cluster-aware Redis clients and connect to the cluster using the configuration endpoint. This allows the client to automatically discover the shard and slot mappings. Redis Cluster mode also provides online resharding (scale in/out) for resizing your cluster, and allows you to complete planned maintenance and node replacements without any write interruptions. The Redis Cluster client can discover the primary and replica nodes and appropriately direct client-specific read and write traffic.

Non-Redis Cluster mode: Use the primary endpoint for all write traffic. During any configuration changes or failovers, Amazon ElastiCache ensures that the DNS of the primary endpoint is updated to always point to the primary node. Use the reader endpoint to direct all read traffic. Amazon ElastiCache ensures that the reader endpoint is kept up-to-date with the cluster changes in real time as replicas are added or removed. Individual node endpoints are also available but using reader endpoint frees up your application from tracking any individual node endpoint changes. Hence, it’s best to use primary endpoint for writes and single reader endpoint for reads.

Configuring the Redis client https://aws.amazon.com/blogs/database/configuring-amazon-elasticache-for-redis-for-higher-availability/

@ion-willo your configuration appears to be with Cluster mode disabled. In that case, you should directly connect to the

Reader Endpointusingnew Redis(...)and not usenew Redis.Cluster(...). That will do the split for you to evenly read from all read replicas. If you want to update the cache, then usingPrimary Endpointis the correct one to use for write operations.This information is available on the docs too- https://docs.aws.amazon.com/AmazonElastiCache/latest/red-ug/Endpoints.html

You can try setting up another ElastiCache Redis cluster but make sure to select Cluster Mode enabled (Scale Out). Then try with the

Configuration Endpointusingnew Redis.Cluster(...)and you should be good to go.Tip: As @luin suggested in some other replies, you can try to connect to redis using

redis-clion a temporary EC2 instance within the same VPC, Security Group as your ElastiCache Redis. Then try to issue aCLUSTER SLOTScommand. This command will work only if you useConfiguration Endpointavailable in Cluster Mode enabled. But this will not work on aPrimary EndpointnorReader Endpointsince you are directly talking to a single redis node.Hope this helps.

Works 👍

ElastiCache Redis in Cluster Mode

Engine Version Compatibility: 5.0.6Encryption in-transit: yes(hencetls: trueneeded forredisOptionsshown in Lambda snippet below)Nodes: 9Shards: 3Primary Endpoint:-(since this is a Cluster, soConfiguration Endpointis relevant instead)My Lambda running on NodeJS 12x,

ioredis@4.16.1Lambda code used

I get the below response for my Lambda execution

Tip:

Failed to refresh slots cache) since it cannot connect to ElastiCache anyways. Needless to sayslotsRefreshTimeoutwas un-necessary in my case.REDIS_CLUSTER_CONFIGURATION_ENDPOINT,REDIS_CLUSTER_AUTHpassed as environment variables to Lambda.REDIS_CLUSTER_AUTHcan be empty if no redis AUTH is set on ElastiCache Redis cluster.ioredisdependency in my snippet so I can update to newer versions ofiorediswithout breaking my Lambda unintentionally.Hope this helps!

Need help to drill down the following issue Please check following needful information for more context

I’m facing the same issue where the new lambda version release started throwing a massive number of errors

ioredis version

4.27.6AWS Lambdanodejs12.xRedis6.0.5AWS Redis cluster metrics look good and healthy, it is confirmed by AWS tech support too. Couldn’t able to root cause the issue

Redis Cluster Initilization

We were having intermittent issues with this error, using Elasticache Redis Cluster and Lambdas. After trying some different config settings, we settled on this one, which has been working 100% without any intermittent slot refresh errors any longer.

We essentially disabled the periodic refreshing of the slots cache since we only use each connection for a short period and any issues that would arise are less severe than the intermittent timeouts. Setting a high timeout value for connections and the initial slots cache fetching isn’t ideal but we haven’t had any significant spikes in runtime.

We are re-using the same Cluster instance for each unique Lambda invocation by caching the instances by

awsRequestIdto ensure the same instances aren’t re-used across lambda invocations. We setlazyConnectand callconnectmanually and then await thereadyevent to fire before attempting to use the instance. We have some retrying logic of our own wrapped around this connection. We also ensure that every instance is disconnected at the end of every Lambda invocation by callingdisconnectif the instance is still in thereadystate.Since implementing this setup we dropped the avg

currConnectionsvalue per min down to <1% of what it was previously, which is what seemed to be the main cause of the intermittent issues.I found the solution 😄

Since AWS ElasticCache provides a single endpoint to connect to the cluster we can not use

new Redis.Cluster([{HOSt:'', PORT:''}, {HOSt:'', PORT:''}])and also not usenew Redis(ENDPOINT)but I manage to get a connection by

new Redis.Cluster(['${redisAddress}:${redisPort}'], { scaleReads: 'slave' });Just noticed that this fix will not work on Redis engine 5.0 on Elasticache. Downgrade to 4.0.10 to make it work.

works on me when I changed slotsRefreshTimeout to 5000

Any updates on this? I’m still experiencing this issue with Redis version 5.0.5 on AWS ElastiCache Clustered, ioredis version: 4.16.1

Edit: Previously said I was using 4.16.0 version of ioredis while it was actually 4.16.1.

Still happening for me as well. Elasticache engine 5.0.3 (cluster) and ioredis@4.9.0

I think I found something that will help. I have been passing the single Configuration Endpoint URL to Redis.Cluster() (as the original author of this issue has been doing).

I tried instead passing the endpoints directly and it appears to work.

I obtained the above by running the following (I only have one cluster with three nodes).

Funny thing is that it (“Redis.Cluster”) sometimes does work with the configuration endpoint–it just seems to have some race condition that prevents it from always working.

Out of curiosity I tried using “new Redis()” passing the cluster configuration endpoint and got: