ray: [Ray 2.3 Release] Failure in `dataset_shuffle_sort_1tb` "no locations were found for the object"

Marking as a release blocker until we think otherwise. BuildKite: https://buildkite.com/ray-project/release-tests-branch/builds/1333#0186130c-207a-4e3e-a563-a8f2f329b498

ray.exceptions.RayTaskError: ray::_sample_block() (pid=143721, ip=172.31.205.93)

At least one of the input arguments for this task could not be computed:

ray.exceptions.ObjectFetchTimedOutError: Failed to retrieve object f57510499cea6ea7ffffffffffffffffffffffff0300000002000000. To see information about where this ObjectRef was created in Python, set the environment variable RAY_record_ref_creation_sites=1 during `ray start` and `ray.init()`.

Fetch for object f57510499cea6ea7ffffffffffffffffffffffff0300000002000000 timed out because no locations were found for the object. This may indicate a system-level bug.

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 27 (24 by maintainers)

Commits related to this issue

- Use retriable_lifo policy for shuffle 1tb nightly test (#32417) Fix release blocker issue: #32203 Ran 6 times and all of them passed. Signed-off-by: jianoaix <iamjianxiao@gmail.com> — committed to ray-project/ray by jianoaix a year ago

- Use retriable_lifo policy for shuffle 1tb nightly test (#32417) Fix release blocker issue: #32203 Ran 6 times and all of them passed. Signed-off-by: jianoaix <iamjianxiao@gmail.com> — committed to cadedaniel/ray by jianoaix a year ago

- Use retriable_lifo policy for shuffle 1tb nightly test (#32417) (#32445) Fix release blocker issue: #32203 Ran 6 times and all of them passed. Signed-off-by: jianoaix <iamjianxiao@gmail.com> C... — committed to ray-project/ray by cadedaniel a year ago

- Use retriable_lifo policy for shuffle 1tb nightly test (#32417) Fix release blocker issue: #32203 Ran 6 times and all of them passed. Signed-off-by: jianoaix <iamjianxiao@gmail.com> — committed to cadedaniel/ray by jianoaix a year ago

- Use retriable_lifo policy for shuffle 1tb nightly test (#32417) Fix release blocker issue: #32203 Ran 6 times and all of them passed. Signed-off-by: jianoaix <iamjianxiao@gmail.com> Signed-off-by: ... — committed to edoakes/ray by jianoaix a year ago

- Use retriable_lifo policy for shuffle 1tb nightly test (#32417) Fix release blocker issue: #32203 Ran 6 times and all of them passed. Signed-off-by: jianoaix <iamjianxiao@gmail.com> — committed to scottsun94/ray by jianoaix a year ago

- Use retriable_lifo policy for shuffle 1tb nightly test (#32417) Fix release blocker issue: #32203 Ran 6 times and all of them passed. Signed-off-by: jianoaix <iamjianxiao@gmail.com> — committed to cassidylaidlaw/ray by jianoaix a year ago

Ok, got log from a failure now: https://console.anyscale.com/o/anyscale-internal/projects/prj_FKRmeV5pA6X72aVscFALNC32/clusters/ses_jxk4uxrewk4lvkstygni9q6641

It’s failing on OOM:

I think @clarng is working on a change to avoid throwing this error, which should be able to fix this test.

Maybe one followup left for us is to figure out if there is a regression in memory efficiency.

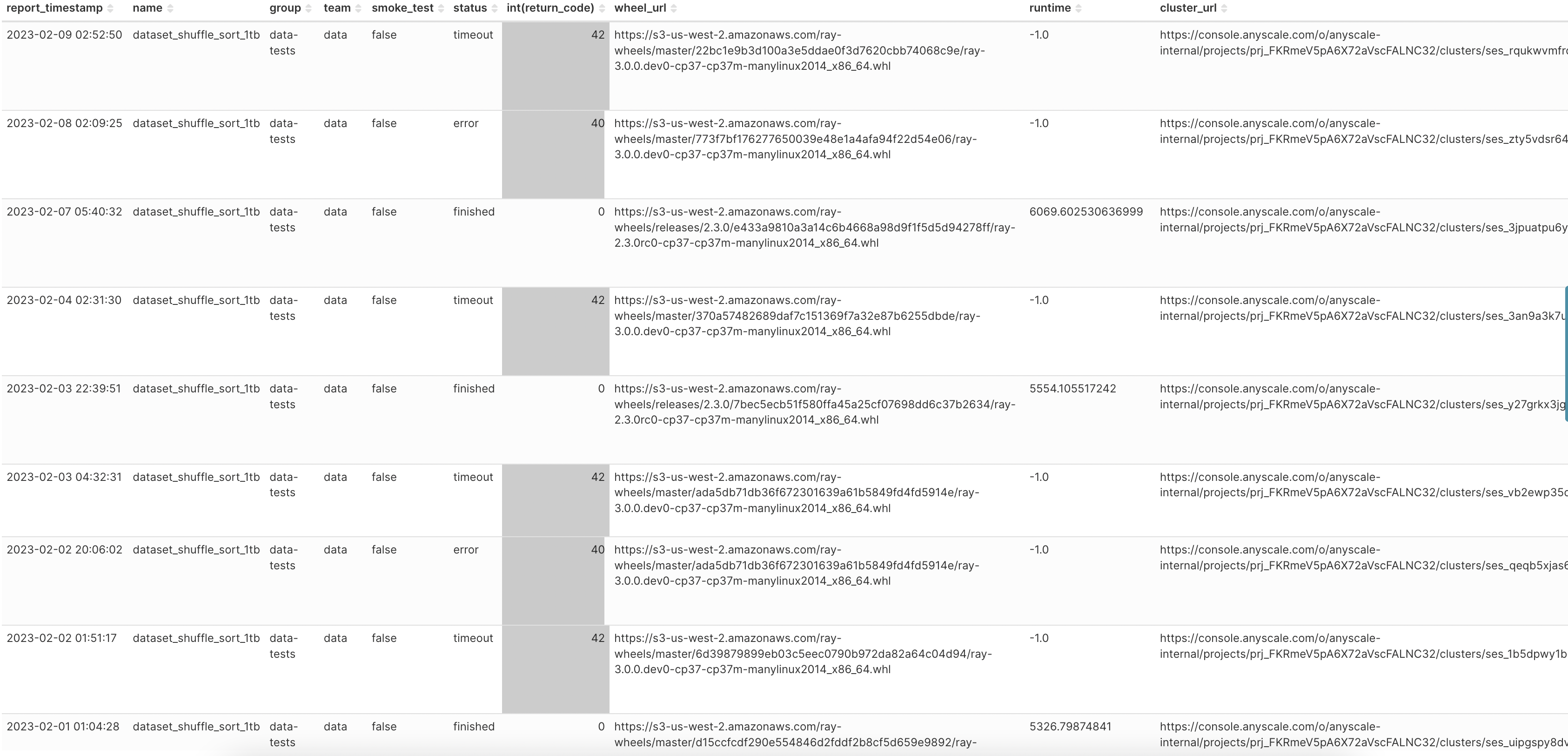

The failure/flakiness ran by master’s nightly recently has been consistent, it’s likely reproducible (just needs a few more tries):

master, sounds good to start a few in 2.3 branch.

Yet to produce a failure to see the log. I’m running two concurrently now and should get result in about half hour. Previously it didn’t log anything about the failure before it re-ran the execution with

ds.stats()which then hit timeout.That PR is a bug fix, so we’ll need to keep it (unless we revert even more). We’ll work on debugging/fixing it today.