ray: [Core] Job gets stuck when submit multiple jobs to the Ray cluster

What happened + What you expected to happen

I interact with the Ray cluster by port forwarding from my local machine. Step 1: Run the script locally that submits a job via JobSubmissionClient.

from ray.job_submission import JobSubmissionClient

client = JobSubmissionClient("http://127.0.0.1:8265")

for i in range(20):

job_id = client.submit_job(

entrypoint="python script.py",

)

print(job_id)

Step 2: The local python code calls the following script.py in the head node (pod):

import ray

import time

@ray.remote(num_cpus=1, max_calls=1)

def hello_world():

time.sleep(60)

return "hello world"

ray.init()

print(ray.get(hello_world.remote()))

Step 3:

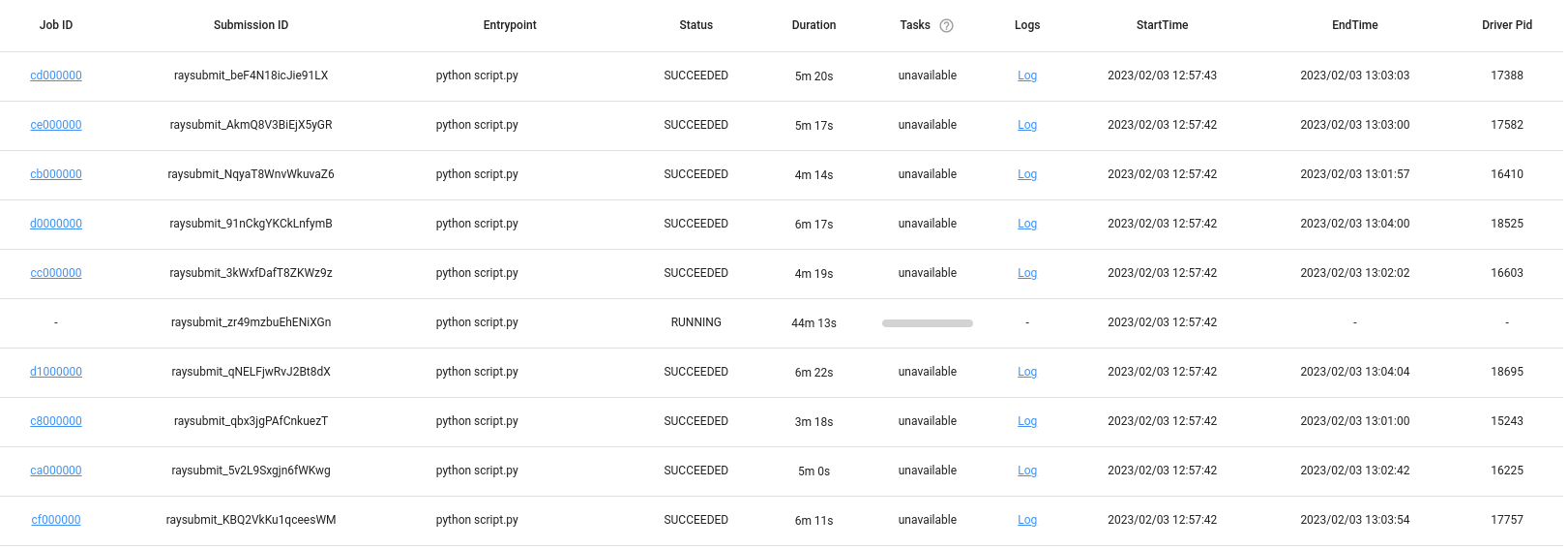

There is one job keeps stucking in the running status

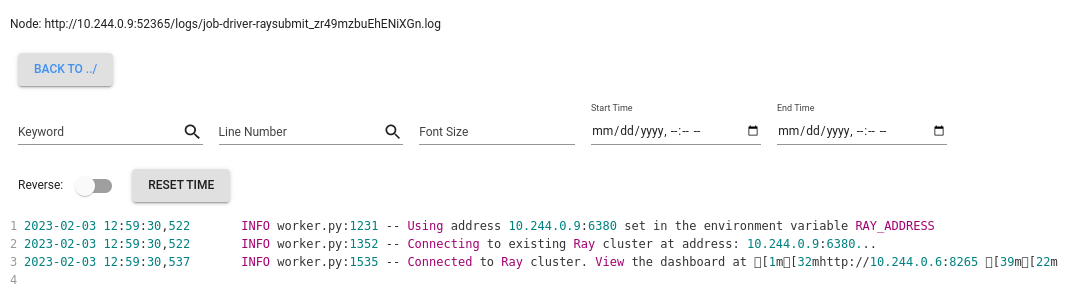

logs from the head node

There is a gap in the timestamp of the dashboard_agent.log logs for that worker:

5412023-02-03 12:57:43,048 INFO job_manager.py:683 -- Head node ID found in GCS; scheduling job driver on head node 039b7cf8253bd6f32069bc7b76140448e7437836a6b9d772a9035f79

5422023-02-03 12:57:43,052 INFO web_log.py:206 -- 10.244.0.9 [03/Feb/2023:20:57:43 +0000] "POST /api/job_agent/jobs/ HTTP/1.1" 200 244 "-" "Python/3.7 aiohttp/3.8.3"

5432023-02-03 12:58:00,266 WARNING worker.py:1851 -- WARNING: 4 PYTHON worker processes have been started on node: 039b7cf8253bd6f32069bc7b76140448e7437836a6b9d772a9035f79 with address: 10.244.0.9. This could be a result of using a large number of actors, or due to tasks blocked in ray.get() calls (see https://github.com/ray-project/ray/issues/3644 for some discussion of workarounds).

5442023-02-03 12:58:04,126 WARNING worker.py:1851 -- WARNING: 6 PYTHON worker processes have been started on node: 039b7cf8253bd6f32069bc7b76140448e7437836a6b9d772a9035f79 with address: 10.244.0.9. This could be a result of using a large number of actors, or due to tasks blocked in ray.get() calls (see https://github.com/ray-project/ray/issues/3644 for some discussion of workarounds).

5452023-02-03 12:58:07,926 WARNING worker.py:1851 -- WARNING: 8 PYTHON worker processes have been started on node: 039b7cf8253bd6f32069bc7b76140448e7437836a6b9d772a9035f79 with address: 10.244.0.9. This could be a result of using a large number of actors, or due to tasks blocked in ray.get() calls (see https://github.com/ray-project/ray/issues/3644 for some discussion of workarounds).

5462023-02-03 12:58:11,963 WARNING worker.py:1851 -- WARNING: 10 PYTHON worker processes have been started on node: 039b7cf8253bd6f32069bc7b76140448e7437836a6b9d772a9035f79 with address: 10.244.0.9. This could be a result of using a large number of actors, or due to tasks blocked in ray.get() calls (see https://github.com/ray-project/ray/issues/3644 for some discussion of workarounds).

5472023-02-03 12:58:16,235 WARNING worker.py:1851 -- WARNING: 12 PYTHON worker processes have been started on node: 039b7cf8253bd6f32069bc7b76140448e7437836a6b9d772a9035f79 with address: 10.244.0.9. This could be a result of using a large number of actors, or due to tasks blocked in ray.get() calls (see https://github.com/ray-project/ray/issues/3644 for some discussion of workarounds).

5482023-02-03 13:17:04,143 INFO web_log.py:206 -- 10.244.0.9 [03/Feb/2023:21:17:04 +0000] "GET /logs HTTP/1.1" 200 629 "http://localhost:8265/" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

5492023-02-03 13:17:05,825 INFO web_log.py:206 -- 10.244.0.9 [03/Feb/2023:21:17:05 +0000] "GET /logs/dashboard_agent.log HTTP/1.1" 200 239 "http://localhost:8265/" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

5502023-02-03 13:21:44,888 INFO web_log.py:206 -- 10.244.0.9 [03/Feb/2023:21:21:44 +0000] "GET /logs HTTP/1.1" 200 629 "http://localhost:8265/" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

5512023-02-03 13:21:46,667 INFO web_log.py:206 -- 10.244.0.9 [03/Feb/2023:21:21:46 +0000] "GET /logs/dashboard_agent.log HTTP/1.1" 200 239 "http://localhost:8265/" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

5522023-02-03 13:22:52,427 INFO web_log.py:206 -- 10.244.0.9 [03/Feb/2023:21:22:52 +0000] "GET /logs HTTP/1.1" 200 629 "http://localhost:8265/" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

5532023-02-03 13:22:55,041 INFO web_log.py:206 -- 10.244.0.9 [03/Feb/2023:21:22:55 +0000] "GET /logs/debug_state.txt HTTP/1.1" 200 238 "http://localhost:8265/" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

5542023-02-03 13:22:56,167 INFO web_log.py:206 -- 10.244.0.9 [03/Feb/2023:21:22:56 +0000] "GET /logs HTTP/1.1" 200 629 "http://localhost:8265/" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

Versions / Dependencies

Ray 2.2.0 Python 3.7.13 2 Workers, 1 CPU each 1 Head, 1 CPU

Reproduction script

-

The reproduction script is shown above. I took a look at the https://github.com/ray-project/ray/issues/3644. But in the case, it seems that there is no nested Ray remote functions (the executed function is very simple).

-

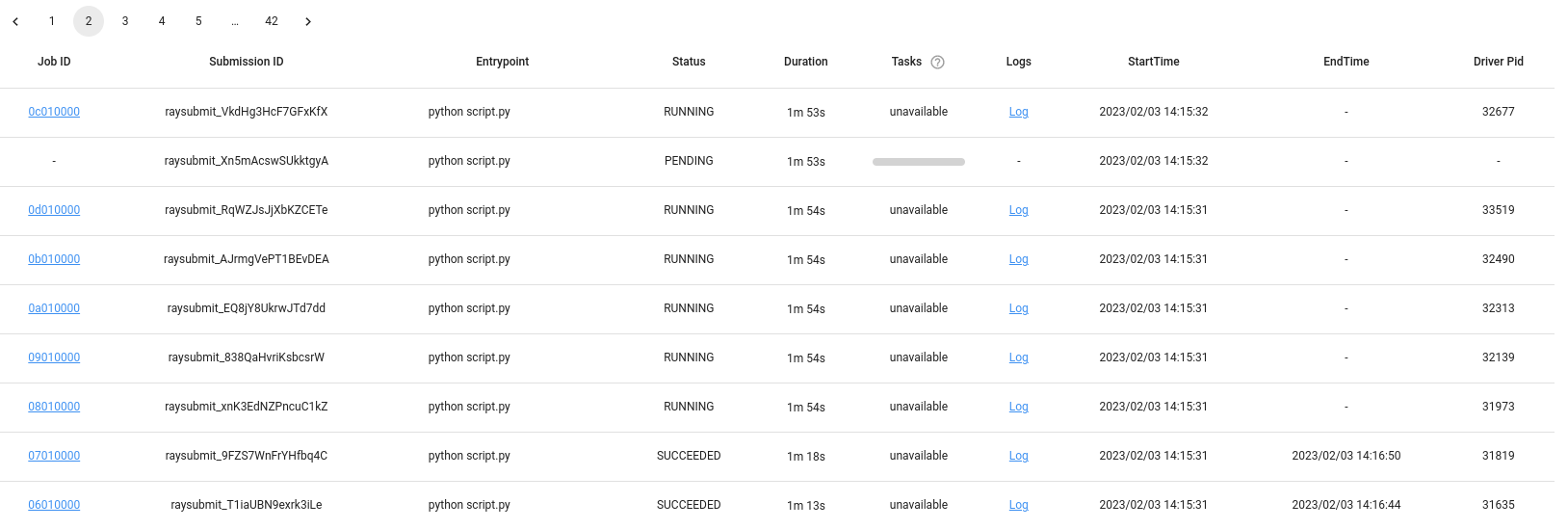

It seems things get worse with the time.sleep(60) in the remote function. I am guessing that it is because that leads to more concurrent running jobs?

-

Is it expected that there are 6 jobs in running status given the fact that I only have 2 workers and I call the remote function using

@ray.remote(num_cpus=1, max_calls=1)?

-

Is there a way to resolve such issue (prevent job to be stuck) when we have a lot of pending tasks in the head?

Issue Severity

High: It blocks me from completing my task.

About this issue

- Original URL

- State: closed

- Created a year ago

- Reactions: 1

- Comments: 18 (18 by maintainers)

Commits related to this issue

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to ray-project/ray by YQ-Wang a year ago

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to ProjectsByJackHe/ray by YQ-Wang a year ago

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to cadedaniel/ray by YQ-Wang a year ago

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to edoakes/ray by YQ-Wang a year ago

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to peytondmurray/ray by YQ-Wang a year ago

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to scottsun94/ray by YQ-Wang a year ago

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to cassidylaidlaw/ray by YQ-Wang a year ago

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to elliottower/ray by YQ-Wang a year ago

- [K8s] [Doc] remove ports limitations (#32831) We have observed that there are usually some jobs hanging if only a small range of ports are opened in the K8S networkpolicies. This PR allows all ports ... — committed to ProjectsByJackHe/ray by YQ-Wang a year ago

It seems like the job is submitted correctly from job supervisor, but the driver is not initiated for some reasons. The user uses unconventional setup which is their min ~ max worker port range is very small (19), and I suspect that’s the root cause. @YQ-Wang will try with higher port ranges.

This issue has been resolved by #32831. Please feel free to close.

Regarding this, I think it’s expected. The job entrypoint script has started running, so the job is considered “RUNNING” even if the remote functions in the tasks have not started running.