rancher: Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

All of the sudden my Rancher Single container deployment stopped working (it refused connections to the UI).

When I tried to restart the container I noticed the following log and the forever repeating message:

Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

Here is the full start up log:

2019/05/06 16:08:05 [INFO] Rancher version v2.1.8 is starting

2019/05/06 16:08:05 [INFO] Rancher arguments {ACMEDomains:[] AddLocal:auto Embedded:false KubeConfig: HTTPListenPort:80 HTTPSListenPort:443 K8sMode:auto Debug:false NoCACerts:false ListenConfig:<nil> AuditLogPath:/var/log/auditlog/rancher-api-audit.log AuditLogMaxage:10 AuditLogMaxsize:100 AuditLogMaxbackup:10 AuditLevel:0}

2019/05/06 16:08:05 [INFO] Listening on /tmp/log.sock

2019/05/06 16:08:05 [INFO] Running etcd --peer-client-cert-auth --client-cert-auth --advertise-client-urls=https://127.0.0.1:2379,https://127.0.0.1:4001 --trusted-ca-file=/etc/kubernetes/ssl/kube-ca.pem --peer-trusted-ca-file=/etc/kubernetes/ssl/kube-ca.pem --data-dir=/var/lib/rancher/etcd/ --initial-cluster-token=etcd-cluster-1 --name=etcd-master --initial-cluster-state=new --heartbeat-interval=500 --election-timeout=5000 --initial-advertise-peer-urls=https://127.0.0.1:2380 --initial-cluster=etcd-master=https://127.0.0.1:2380 --cert-file=/etc/kubernetes/ssl/kube-etcd-127-0-0-1.pem --key-file=/etc/kubernetes/ssl/kube-etcd-127-0-0-1-key.pem --listen-client-urls=https://0.0.0.0:2379 --listen-peer-urls=https://0.0.0.0:2380 --peer-cert-file=/etc/kubernetes/ssl/kube-etcd-127-0-0-1.pem --peer-key-file=/etc/kubernetes/ssl/kube-etcd-127-0-0-1-key.pem

2019-05-06 16:08:05.518007 I | etcdmain: etcd Version: 3.2.13

2019-05-06 16:08:05.518026 I | etcdmain: Git SHA: Not provided (use ./build instead of go build)

2019-05-06 16:08:05.518030 I | etcdmain: Go Version: go1.11

2019-05-06 16:08:05.518033 I | etcdmain: Go OS/Arch: linux/amd64

2019-05-06 16:08:05.518037 I | etcdmain: setting maximum number of CPUs to 4, total number of available CPUs is 4

2019-05-06 16:08:05.518105 N | etcdmain: the server is already initialized as member before, starting as etcd member...

2019-05-06 16:08:05.518133 I | embed: peerTLS: cert = /etc/kubernetes/ssl/kube-etcd-127-0-0-1.pem, key = /etc/kubernetes/ssl/kube-etcd-127-0-0-1-key.pem, ca = , trusted-ca = /etc/kubernetes/ssl/kube-ca.pem, client-cert-auth = true

2019-05-06 16:08:05.518573 I | embed: listening for peers on https://0.0.0.0:2380

2019-05-06 16:08:05.518618 I | embed: listening for client requests on 0.0.0.0:2379

2019-05-06 16:08:06.521742 W | etcdserver: another etcd process is using "/var/lib/rancher/etcd/member/snap/db" and holds the file lock.

2019-05-06 16:08:06.521759 W | etcdserver: waiting for it to exit before starting...

2019-05-06 16:08:15.600099 I | etcdserver: recovered store from snapshot at index 74100742

2019-05-06 16:08:15.650151 I | mvcc: restore compact to 69505013

2019-05-06 16:08:15.837148 I | etcdserver: name = etcd-master

2019-05-06 16:08:15.837172 I | etcdserver: data dir = /var/lib/rancher/etcd/

2019-05-06 16:08:15.837179 I | etcdserver: member dir = /var/lib/rancher/etcd/member

2019-05-06 16:08:15.837182 I | etcdserver: heartbeat = 500ms

2019-05-06 16:08:15.837186 I | etcdserver: election = 5000ms

2019-05-06 16:08:15.837189 I | etcdserver: snapshot count = 100000

2019-05-06 16:08:15.837230 I | etcdserver: advertise client URLs = https://127.0.0.1:2379,https://127.0.0.1:4001

2019-05-06 16:08:19.910817 I | etcdserver: restarting member e92d66acd89ecf29 in cluster 7581d6eb2d25405b at commit index 74171321

2019-05-06 16:08:19.912831 I | raft: e92d66acd89ecf29 became follower at term 150

2019-05-06 16:08:19.912853 I | raft: newRaft e92d66acd89ecf29 [peers: [e92d66acd89ecf29], term: 150, commit: 74171321, applied: 74100742, lastindex: 74171321, lastterm: 150]

2019-05-06 16:08:19.912969 I | etcdserver/api: enabled capabilities for version 3.2

2019-05-06 16:08:19.912981 I | etcdserver/membership: added member e92d66acd89ecf29 [https://127.0.0.1:2380] to cluster 7581d6eb2d25405b from store

2019-05-06 16:08:19.912988 I | etcdserver/membership: set the cluster version to 3.2 from store

2019-05-06 16:08:19.927814 I | mvcc: restore compact to 69505013

2019-05-06 16:08:20.107151 W | auth: simple token is not cryptographically signed

2019-05-06 16:08:20.120515 I | etcdserver: starting server... [version: 3.2.13, cluster version: 3.2]

2019-05-06 16:08:20.120884 I | embed: ClientTLS: cert = /etc/kubernetes/ssl/kube-etcd-127-0-0-1.pem, key = /etc/kubernetes/ssl/kube-etcd-127-0-0-1-key.pem, ca = , trusted-ca = /etc/kubernetes/ssl/kube-ca.pem, client-cert-auth = true

2019-05-06 16:08:24.413293 I | raft: e92d66acd89ecf29 is starting a new election at term 150

2019-05-06 16:08:24.413364 I | raft: e92d66acd89ecf29 became candidate at term 151

2019-05-06 16:08:24.413400 I | raft: e92d66acd89ecf29 received MsgVoteResp from e92d66acd89ecf29 at term 151

2019-05-06 16:08:24.413419 I | raft: e92d66acd89ecf29 became leader at term 151

2019-05-06 16:08:24.413426 I | raft: raft.node: e92d66acd89ecf29 elected leader e92d66acd89ecf29 at term 151

2019-05-06 16:08:24.415037 I | etcdserver: published {Name:etcd-master ClientURLs:[https://127.0.0.1:2379 https://127.0.0.1:4001]} to cluster 7581d6eb2d25405b

2019-05-06 16:08:24.415066 I | embed: ready to serve client requests

2019-05-06 16:08:24.415270 I | embed: serving client requests on [::]:2379

WARNING: 2019/05/06 16:08:24 Failed to dial 0.0.0.0:2379: connection error: desc = "transport: authentication handshake failed: remote error: tls: bad certificate"; please retry.

2019/05/06 16:08:25 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:26 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:27 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:28 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:29 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:30 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:31 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:32 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:33 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:34 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:35 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:36 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

2019/05/06 16:08:37 [INFO] Waiting on etcd startup: Get https://localhost:2379/health: x509: certificate has expired or is not yet valid

...

How can I recover my Rancher Server?

Thanks and Best Regards, Paul

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 6

- Comments: 28 (2 by maintainers)

We had the same issue. The rancher-server ui did not come up anymore and always kept restarting it self due to trying to access the api server under localhost:6443 . We then went into the servers file system (exec into the docker container) and found a directory

/var/lib/rancher/management-state/tls/which had a localhost.crt which had expired (check with:openssl x509 -enddate -noout -in localhost.crt) . We renamed it to localhost.crt_back and restarted the container, and voila it was regenerated an resigned. The ui went live again.P.S. if you struggle to manage all these steps within the brief timeframe before the container restarts, simply alter the date of your host to be in the past so that the internal crt is beeing considered valid again.

@azbpa what can we do to resolve this question?

We are experiencing this same issue. We upgraded to 2.2.2, but we are still seeing similar issues.

@de-robat Thank you! Thank you. Your comment needs to be elevated and included within some documentation. Saved my bacon! Solution worked perfectly.

I was running v2.2.8 and updated to v2.2.10 and moved localhost.crt to localhost.crt_back!!! Everything returned as expected. Problem solved.

Thanks again

unfortunately for me upgrading to 2.2.2 did not solve the issue. I am able to login but etcd is still not ok. I suspect that this could be a issue in conjunction with the custom godaddy wildcard certificate in place on the standalone rancher admin node.

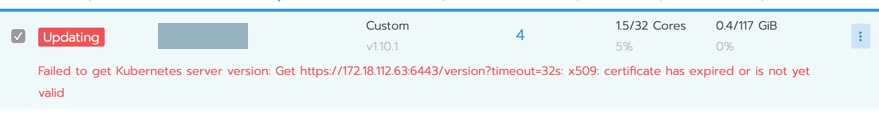

logs: 2019/05/08 20:30:00 [ERROR] ClusterController c-zsdvm [cluster-provisioner-controller] failed with : failed to get Kubernetes server version: Get https://172.18.112.63:6443/version?timeout=32s: x509: certificate has expired or is not yet valid

screenshots - rancher global view & cert on etcd & worker nodes.

Maybe one way to solve this issue could be startup rancher with the standard rancher certs to enable the agents to server communication again. How to overwride the custom certs supplied once by myself with the standard rancher certs/ca ?

I can also confirm that upgrading to v2.2.2 resolves the issue.

2.2.2/2.1.9/2.0.14 automatically rotates the self-signed server certificate it generates before it expires. If you are on an older version, upgrade.

If you provided your own cert, you need to generate a new one and provide it to the server.

If you’re using LetsEncrypt, it should already have be renewing itself.