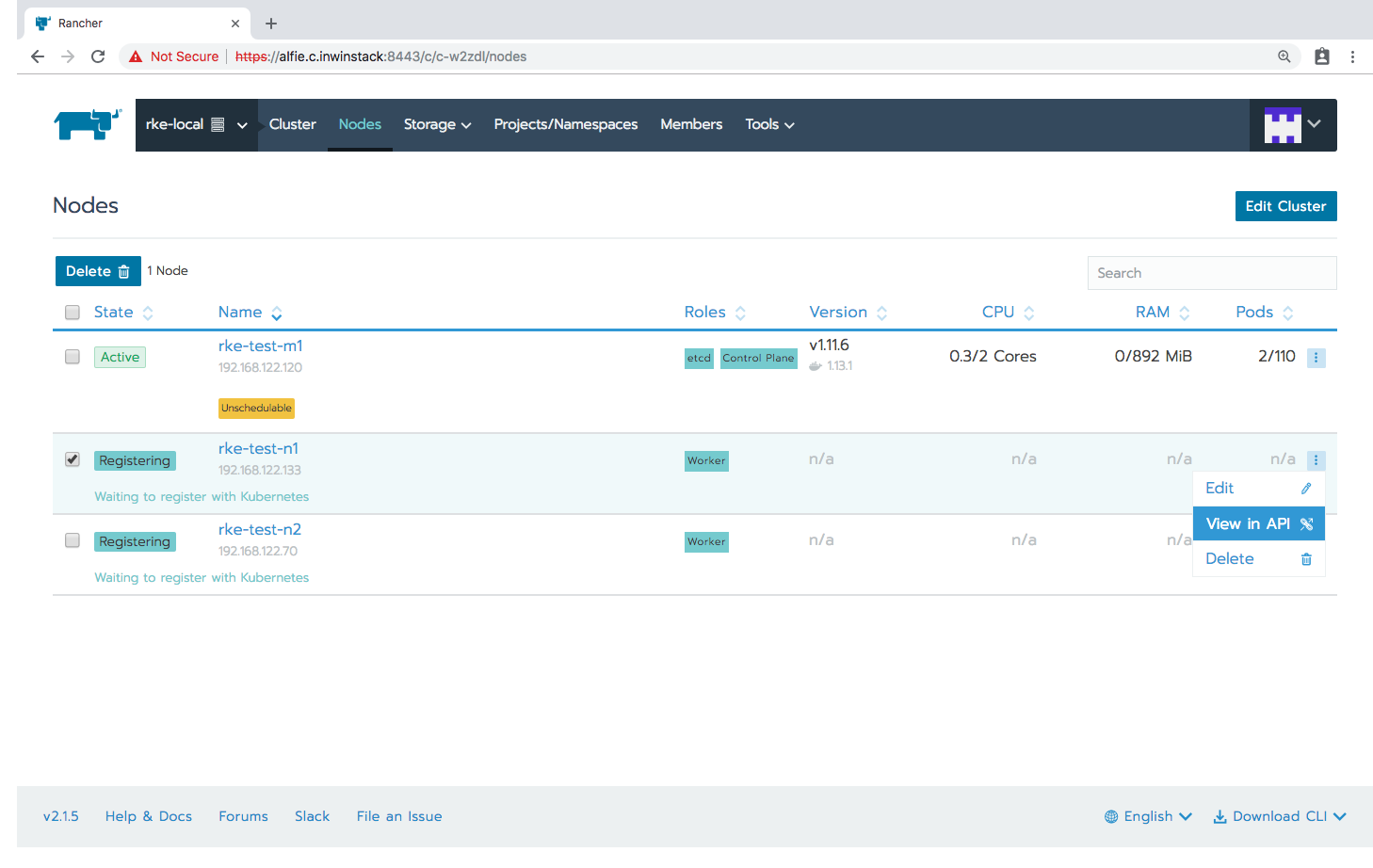

rancher: Stuck on "Waiting to register with Kubernetes"

After add a node, the state of it stuck on “Waiting to register with Kubernetes”. Though it has registered if I use docker logs to see the kubelet log.

And I can also deploy a new workload. (Aha I deploy a wordpress and it works well.

I cannot see any information about the nodes and cluster because the cluster has only this node.

I have checked every port required is open and the wss works well too.

|Versions|Rancher v2.0.2 UI: v2.0.48 |

|Access|local admin|

|Route|authenticated.cluster.nodes.index|

About this issue

- Original URL

- State: closed

- Created 6 years ago

- Reactions: 5

- Comments: 37 (8 by maintainers)

just wait some times…it will be ok

I appear to have just run into the same problem? I have 4 physical machines 1 - Rancher/Server 3 - Nodes in 1 cluster

Adding the first node to the cluster is fine, but adding any additional nodes to the same cluster fail. “Waiting to register with Kubernetes”

RancherOS v1.5.0 Rancher v2.1.6 Docker 18.06.1-ce, build e68fc7a

Error From Rancher Server UI: This cluster is currently Updating; areas that interact directly with it will not be available until the API is ready. [network] Host [10.10.0.6] is not able to connect to the following ports: [10.10.0.7:2379, 10.10.0.8:2379, 10.10.0.8:2380, 10.10.0.6:2379]. Please check network policies and firewall rules

Is 2379 only listening on ipV6 here in RancherOS? I ran

sudo iptables -A INPUT -i eth0 -p tcp --dport 2379 --src 10.10.0.0/24 -j ACCEPT sudo iptables-saveBut it doesn’t appear to persist between reboots, so I’m thinking this could be the problem. Will report back.

@portfield-slash Aha, I have tried. But it’s still registering. 😦

I’m stuck in a similar situation and believe that I’ve reproduced the issue.

At first, I had 2core2gb local KVM virtual machines. When running the Node Run Command provided in Rancher UI:

I get the “Waiting to register with Kubernetes” message and stuck.

Not sure if this helps but here’s everything from the “View in API” tab:

Heading to the machine

rke-test-n1,docker logsfor therancher/rancher-agentcontainer repeatedly shows:Referring to @superseb’s comment

I later shutdown the workers (

rke-test-n1andrke-test-n2) and upgraded the specs in KVM to 2core4gb for both of them, then reboot.docker psshows the containers restarted into a freshly UP status, yet the logs still remains as before.Requested info from issue template (for worker nodes): Docker version: (

docker version,docker infopreferred)Operating system and kernel: (

cat /etc/os-release,uname -rpreferred)Type/provider of hosts: (VirtualBox/Bare-metal/AWS/GCE/DO) KVM

Host specification (CPU/mem) 2 Cores, 4096MiB

ps. the Master VM (2 Cores, 2048MiB) works fine and

kubectl get nodesalso showsrke-test-m1isReadyAs described on https://rancher.com/docs/rancher/v2.x/en/tasks/clusters/creating-a-cluster/create-cluster-custom/, the recommendation for small clusters is 2 vCPU and 4GB RAM, also because the k8s components need to run and take a chunk of the system.

We have an open issue regarding incomplete kernels here: https://github.com/rancher/rancher/issues/10499

As this is far offtopic, @YangKeao if you can provide information requested in https://github.com/rancher/rancher/issues/13689#issuecomment-396901983, we can investigate further.