rancher: RKE2 | Custom Windows 2022 cluster | Windows worker is "Unavailable" after snapshot restore

Rancher Server Setup

- Rancher version: v2.6-head 39befda

- Installation option (Docker install/Helm Chart): Helm

- If Helm Chart, Kubernetes Cluster and version (RKE1, RKE2, k3s, EKS, etc): RKE2

- Proxy/Cert Details:

Information about the Cluster

- Kubernetes version: v1.21.7 and v1.22.10

- Cluster Type (Local/Downstream): Downstream

- If downstream, what type of cluster? (Custom/Imported or specify provider for Hosted/Infrastructure Provider): Custom

Describe the bug

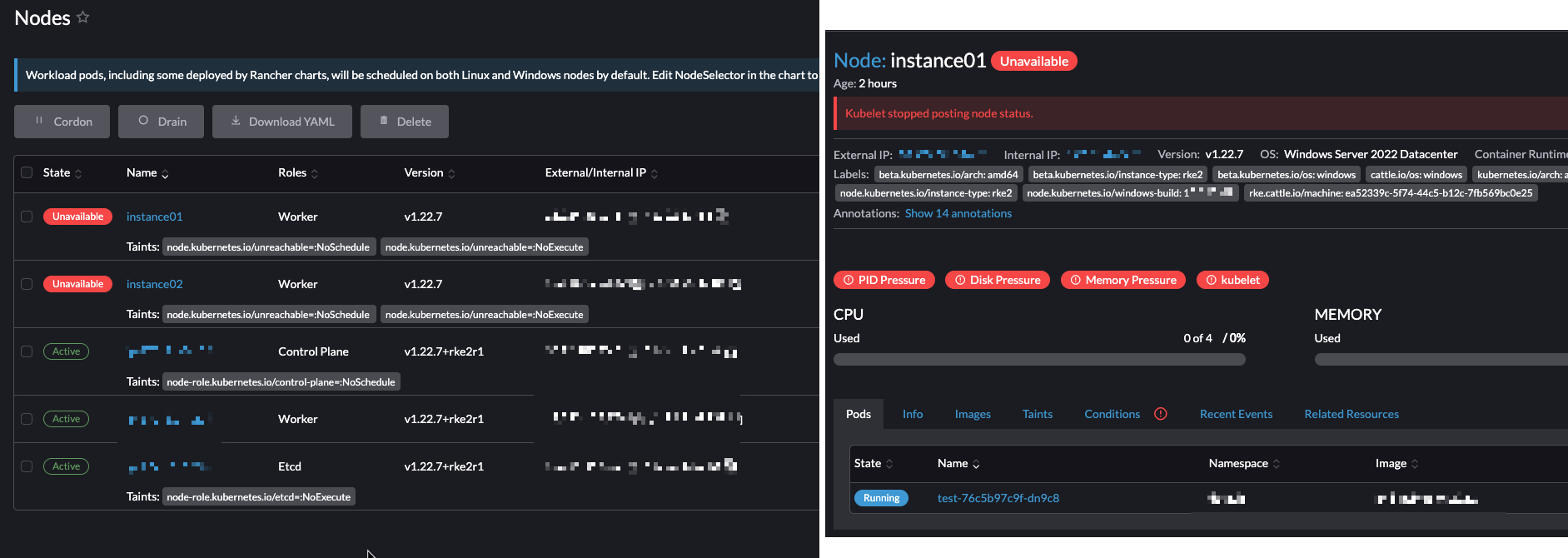

Windows worker node(s) becomes “Unavailable” with “Kubelet stopped posting node status.” message

To Reproduce

- Provision RKE2 custom Windows 2022 cluster (1 linux etcd + cp, 1 linux worker + 2 Windows 2022 workers)

- Wait for the cluster to provision

- Navigate to Cluster Management

- Take Snapshot of the etcd (three dots menu on the cluster)

- Wait for the snapshot to be created

- Restore “Restore Snaphot” -> Etcd only

Result Tried 3 different clusters. In two of those only 1 windows node became unavailable but in the third cluster all two workers became unavailable. Tried having nodes with separate roles (etcd and cp) - same result

Expected Result

All nodes are in active and working state after restore

Screenshots

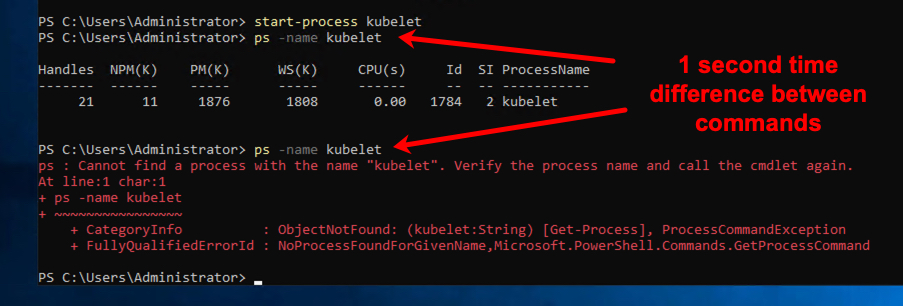

kubelet is not running on the node

when trying to start it - it run for a few seconds

Additional context

when kubectl get node

conditions:

- lastHeartbeatTime: "2022-03-03T22:10:39Z"

lastTransitionTime: "2022-03-03T22:12:40Z"

message: Kubelet stopped posting node status.

reason: NodeStatusUnknown

status: Unknown

type: MemoryPressure

- lastHeartbeatTime: "2022-03-03T22:10:39Z"

lastTransitionTime: "2022-03-03T22:12:40Z"

message: Kubelet stopped posting node status.

reason: NodeStatusUnknown

status: Unknown

type: DiskPressure

- lastHeartbeatTime: "2022-03-03T22:10:39Z"

lastTransitionTime: "2022-03-03T22:12:40Z"

message: Kubelet stopped posting node status.

reason: NodeStatusUnknown

status: Unknown

type: PIDPressure

- lastHeartbeatTime: "2022-03-03T22:10:39Z"

lastTransitionTime: "2022-03-03T22:12:40Z"

message: Kubelet stopped posting node status.

reason: NodeStatusUnknown

status: Unknown

type: Ready

Rancher logs

2022/03/03 22:12:23 [INFO] Handling backend connection request [c-m-msqrtvgq]

W0303 22:12:23.376225 32 reflector.go:442] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: watch of *v1.Secret ended with: an error on the server ("unable to decode an event from the watch stream: tunnel disconnect") has prevented the request from succeeding

W0303 22:12:23.376433 32 reflector.go:442] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: watch of *v1.ServiceAccount ended with: an error on the server ("unable to decode an event from the watch stream: tunnel disconnect") has prevented the request from succeeding

W0303 22:12:23.376654 32 reflector.go:442] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: watch of *v1.Role ended with: an error on the server ("unable to decode an event from the watch stream: tunnel disconnect") has prevented the request from succeeding

W0303 22:12:23.376836 32 reflector.go:442] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: watch of *v1.Namespace ended with: an error on the server ("unable to decode an event from the watch stream: tunnel disconnect") has prevented the request from succeeding

W0303 22:12:23.376838 32 reflector.go:442] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: watch of *v1.ResourceQuota ended with: an error on the server ("unable to decode an event from the watch stream: tunnel disconnect") has prevented the request from succeeding

W0303 22:12:23.376887 32 reflector.go:442] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: watch of *v1.ClusterRoleBinding ended with: an error on the server ("unable to decode an event from the watch stream: tunnel disconnect") has prevented the request from succeeding

2022/03/03 22:12:24 [INFO] Handling backend connection request [stv-cluster-c-m-msqrtvgq]

I0303 22:12:46.445297 32 trace.go:205] Trace[1856044629]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:12:03.193) (total time: 43252ms):

Trace[1856044629]: ---"Objects listed" 43252ms (22:12:46.445)

Trace[1856044629]: [43.252175226s] [43.252175226s] END

I0303 22:12:46.445935 32 trace.go:205] Trace[75811888]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:12:03.035) (total time: 43410ms):

Trace[75811888]: ---"Objects listed" 43410ms (22:12:46.445)

Trace[75811888]: [43.410276704s] [43.410276704s] END

2022/03/03 22:12:46 [INFO] [snapshotbackpopulate] rkecluster fleet-default/rhel122: processing configmap kube-system/rke2-etcd-snapshots

I0303 22:12:46.450192 32 trace.go:205] Trace[1398562026]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:12:03.199) (total time: 43250ms):

Trace[1398562026]: ---"Objects listed" 43250ms (22:12:46.450)

Trace[1398562026]: [43.250663856s] [43.250663856s] END

I0303 22:12:46.451737 32 trace.go:205] Trace[753672252]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:12:03.149) (total time: 43302ms):

Trace[753672252]: ---"Objects listed" 43302ms (22:12:46.451)

Trace[753672252]: [43.302648437s] [43.302648437s] END

I0303 22:12:46.451900 32 trace.go:205] Trace[742760657]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:12:03.118) (total time: 43332ms):

Trace[742760657]: ---"Objects listed" 43332ms (22:12:46.451)

Trace[742760657]: [43.332992701s] [43.332992701s] END

I0303 22:12:46.451737 32 trace.go:205] Trace[971905992]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:12:02.961) (total time: 43490ms):

Trace[971905992]: ---"Objects listed" 43490ms (22:12:46.451)

Trace[971905992]: [43.490083996s] [43.490083996s] END

I0303 22:12:46.719634 32 trace.go:205] Trace[780757849]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:12:03.577) (total time: 43142ms):

Trace[780757849]: ---"Objects listed" 43142ms (22:12:46.719)

Trace[780757849]: [43.142167239s] [43.142167239s] END

2022/03/03 22:12:46 [INFO] [snapshotbackpopulate] rkecluster fleet-default/rhel122: processing configmap kube-system/rke2-etcd-snapshots

I0303 22:12:54.905072 32 trace.go:205] Trace[2109130654]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:12:44.904) (total time: 10000ms):

Trace[2109130654]: [10.000954349s] [10.000954349s] END

E0303 22:12:54.905106 32 reflector.go:139] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: Failed to watch *v1.Node: failed to list *v1.Node: Get "https://XXXX/k8s/clusters/c-m-msqrtvgq/api/v1/nodes?resourceVersion=109801&timeout=10s": context deadline exceeded

I0303 22:13:56.542803 32 trace.go:205] Trace[1602264513]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:13:46.542) (total time: 10000ms):

Trace[1602264513]: [10.000719583s] [10.000719583s] END

E0303 22:13:56.542831 32 reflector.go:139] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: Failed to watch *v1.Node: failed to list *v1.Node: Get "https://XXXX/k8s/clusters/c-m-msqrtvgq/api/v1/nodes?resourceVersion=109801&timeout=10s": context deadline exceeded

I0303 22:14:42.020467 32 trace.go:205] Trace[331678667]: "Reflector ListAndWatch" name:github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168 (03-Mar-2022 22:14:32.019) (total time: 10001ms):

Trace[331678667]: [10.001042789s] [10.001042789s] END

E0303 22:14:42.020500 32 reflector.go:139] github.com/rancher/rancher/go/pkg/mod/github.com/rancher/client-go@v1.22.3-rancher.1/tools/cache/reflector.go:168: Failed to watch *v1.Node: failed to list *v1.Node: Get "https://XXXX/k8s/clusters/c-m-msqrtvgq/api/v1/nodes?resourceVersion=109801&timeout=10s": context deadline exceeded

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 16 (10 by maintainers)

The blocker has been removed and I just tested this morning with the latest 2.6-head. Everything restored just fine with a Windows worker present.