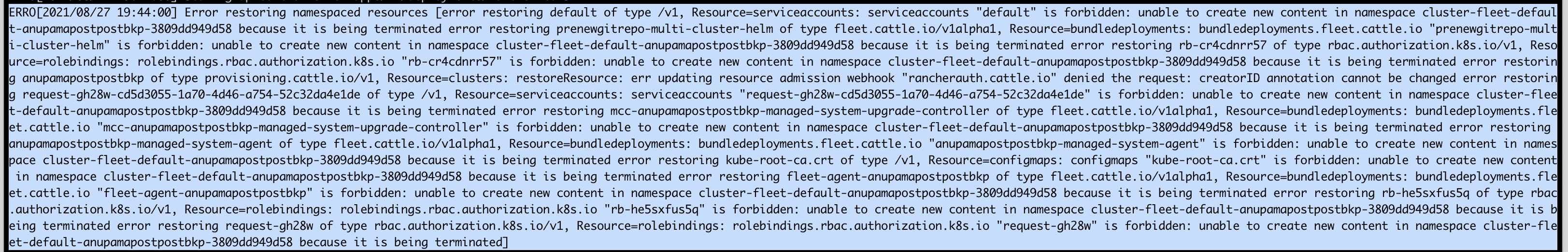

rancher: Restoring rancher from a backup on a migrated rancher server fails with the error unable to create new content in namespace cluster-fleet-default-anupamapostbkp because it is being terminated error restoring

Rancher Server Setup

- Rancher version: v2.6.0-rc8

- Installation option (Docker install/Helm Chart): Helm

- If Helm Chart, Kubernetes Info:

RKE1Cluster Type (RKE1, RKE2, k3s, EKS, etc): Node Setup: 3 nodes all roles Version:v1.21.4

- If Helm Chart, Kubernetes Info:

- Proxy/Cert Details: Self-signed

Information about the Cluster

- Kubernetes version:

- Cluster Type (Local/Downstream): Downstream node driver rke1 and rke2 clusters

- If downstream, what type of cluster? (Custom/Imported or specify provider for Hosted/Infrastructure Provider):

Describe the bug Restoring rancher server from the backup that is created on a rancher server that is migrated to a new rke cluster.

To Reproduce

- On rancher server created an rke1 and rke2 node driver clusters [3 worker, 1 etcd, 1 cp ] in each cluster

- Installed backup charts in the local cluster. version

2.0.0+up2.0.0-rc11 - from continuous delivery, created a git repo - fleet1, url

https://github.com/rancher/fleet-examples, path:multi-cluster/helm - Took a backup of the rancher server - bkp1

- Created another git repo - fleet2 [url:

https://github.com/rancher/fleet-examples, path:single-cluster/helm - Created another backup - bkp2 [will reuse after migration]

- Created a new rke1 cluster and installed the rancher backup charts version 2.0.0+up2.0.0-rc11.

- Restored the backup on the new rke cluster and migrated rancher to the new rke cluster. ref:https://rancher.com/docs/rancher/v2.x/en/backups/v2.5/migrating-rancher/

- All clusters come up fine. verify no errors in fleet logs in the downstream clusters and the clusters in continuous delivery are all up and active.

- Create a new rke2 cluster on this new rancher server.

- Take a backup bkp3 once the cluster is up.

- Perform restore from the backup - bkp2 [from the steps above]

- All clusters come up fine. verify no errors in fleet logs in the downstream clusters and the clusters in continuous delivery are all up and active.

- Perform another restore with bkp3.

Result

- restore fails with the errors seen in the logs continuously in the backup resource

rancher-backup-xxx.

- rancher server retries multiple times, after sometime the rancher server comes up and the cluster created after migration in the new rke cluster is stuck in `Wait Check-In` state on the cluster.

- rancher server retries multiple times, after sometime the rancher server comes up and the cluster created after migration in the new rke cluster is stuck in `Wait Check-In` state on the cluster.

Expected Result Restore should be successful and no errors should be seen. Downstream clusters should come up active.

Additional Info:

Issue is seen for both rke1 and rke2 downstream clusters:

Related: https://github.com/rancher/rancher/issues/33954

SURE-5358

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Comments: 19 (13 by maintainers)

@anupama2501 just pushed https://github.com/rancherlabs/release-notes/pull/328/commits/c94295f8dbcac8bc79bd637452110ce61aa4328d to address your comment

cc @btat @martyav Release note for this ticket needs to be updated as well.