quarkus: Vert.x Context doesn't get propagated when using Infrastructure.getDefaultWorkerPool()

Describe the bug

Vert.x Context doesn’t get propagated when using Infrastructure.getDefaultWorkerPool()

Whenever you use reactive programming and you run Mutiny with runSubscriptionOn(Infrastructure.getDefaultWorkerPool() Vert.x context doesn’t get propagated, whereas with Infrastructure.getDefaultExecutor() does get propagated.

This impacts OpenTelemetry, MDC and any other people/feature that uses Vert.x context for something, since context data is stored inside Vert.x Context and it doesn’t get propagated.

Expected behavior

Using Infrastructure.getDefaultWorkerPool() with Mutiny should behave the same as with Infrastructure.getDefaultExecutor() and Vert.x Context should be set

Actual behavior

Using Infrastructure.getDefaultWorkerPool() Vert.x Context is null

How to Reproduce?

OPENTELEMETRY IS ONLY BEING USED AS AN EXAMPLE AND REPRODUCER, THE ISSUE IS NOT RESTRICTED TO IT

https://github.com/luneo7/opentelemetry-reproducer

Just run ./mvnw clean test and you will see that Vert.x Context doesn’t get propagated so OTEL span doesn’t have the right parent id when using worker pool.

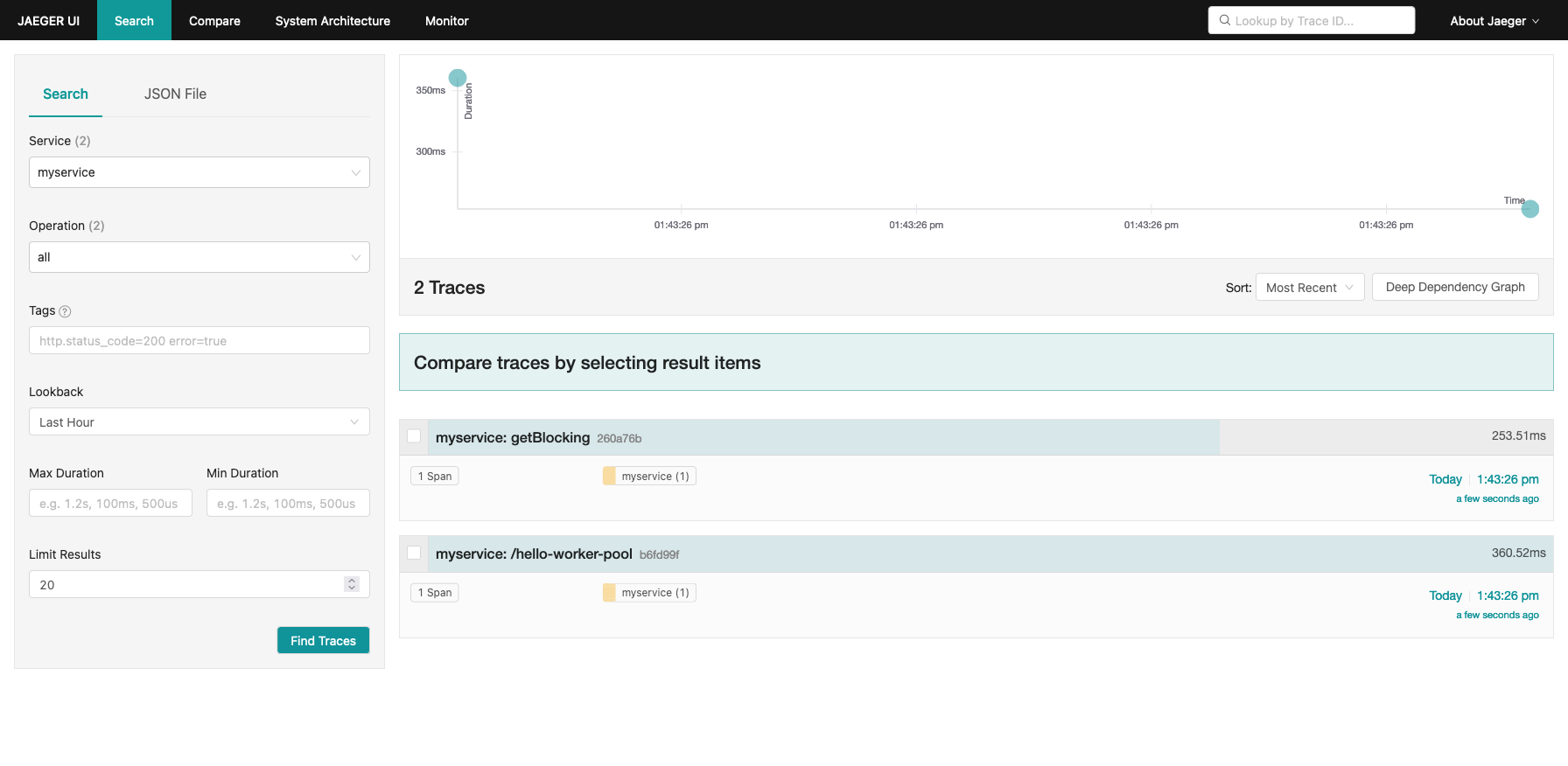

When doing curl http://localhost:8080/hello-worker-pool you will see that two distinct spans are created:

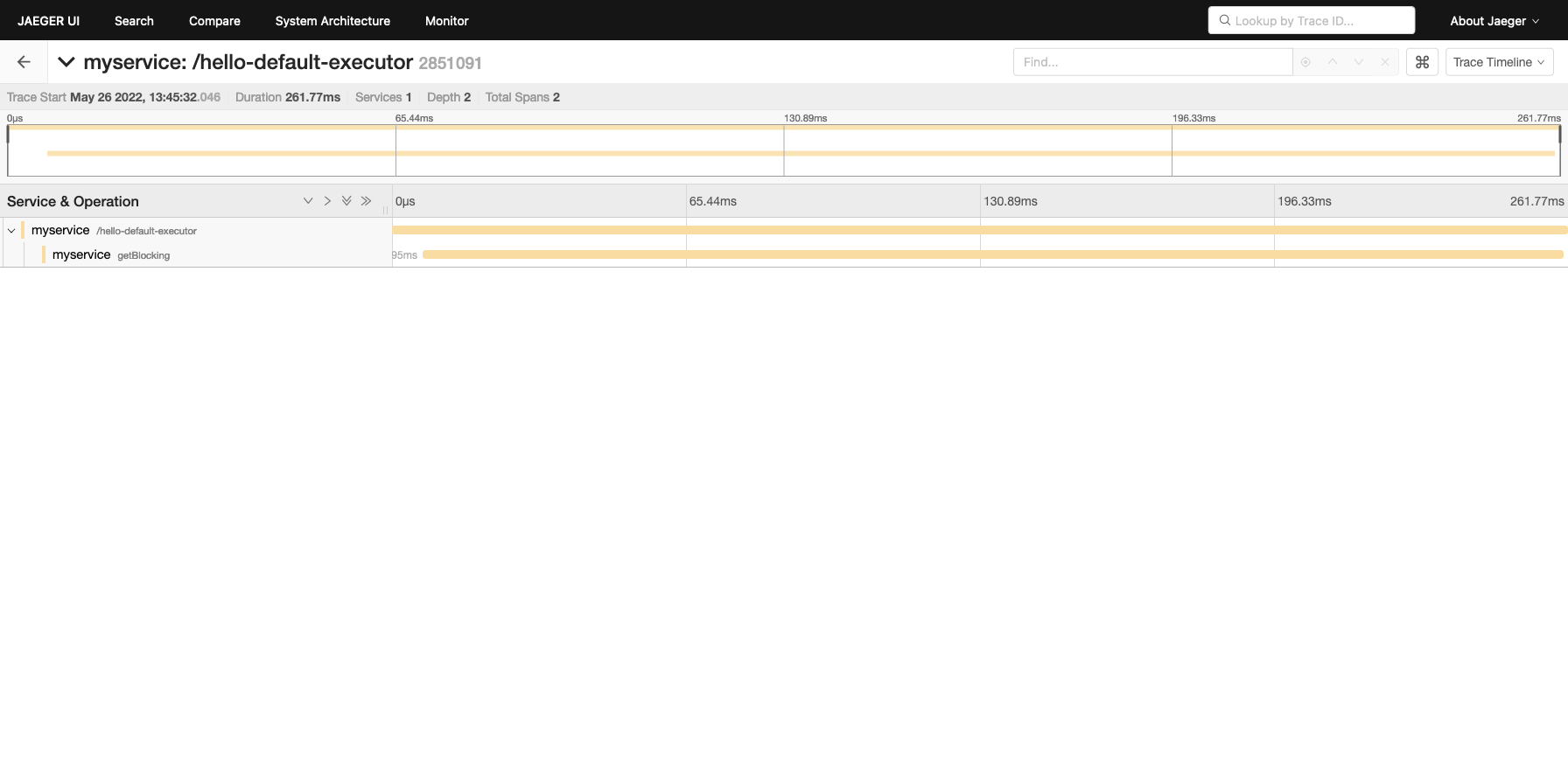

When doing curl http://localhost:8080/hello-default-executor you will see that the spans are created correctly and there are two spans that correlates to each other:

Output of uname -a or ver

No response

Output of java -version

No response

GraalVM version (if different from Java)

No response

Quarkus version or git rev

2.9.2.Final

Build tool (ie. output of mvnw --version or gradlew --version)

No response

Additional information

No response

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 31 (30 by maintainers)

Commits related to this issue

- Decorate callbacks for Infrastructure.getDefaultWorkerPool() See https://github.com/quarkusio/quarkus/issues/25818 for the original bug report. — committed to smallrye/smallrye-mutiny by jponge 2 years ago

- Decorate callbacks for Infrastructure.getDefaultWorkerPool() See https://github.com/quarkusio/quarkus/issues/25818 for the original bug report. — committed to smallrye/smallrye-mutiny by jponge 2 years ago

- Avoid wrapping scheduled executors This is part of a larger bug fix in Quarkus, see https://github.com/quarkusio/quarkus/issues/25818 — committed to smallrye/smallrye-mutiny by jponge 2 years ago

- Avoid wrapping scheduled executors This is part of a larger bug fix in Quarkus, see https://github.com/quarkusio/quarkus/issues/25818 (cherry picked from commit cceccf5c40f1a0de244aea66af709a1dcad82... — committed to smallrye/smallrye-mutiny by jponge 2 years ago

- Fix for #25818 and upgrade to Mutiny 1.6.0 This is part of a coordinated fix across Quarkus and Mutiny where scheduler wrapping would cause Vert.x context propagation not to be done. See https://git... — committed to jponge/quarkus by jponge 2 years ago

- Fix for #25818 and upgrade to Mutiny 1.6.0 This is part of a coordinated fix across Quarkus and Mutiny where scheduler wrapping would cause Vert.x context propagation not to be done. See https://git... — committed to jponge/quarkus by jponge 2 years ago

- Fix for #25818 and upgrade to Mutiny 1.6.0 This is part of a coordinated fix across Quarkus and Mutiny where scheduler wrapping would cause Vert.x context propagation not to be done. See https://git... — committed to jponge/quarkus by jponge 2 years ago

- Capture context across all scheduler methods Adapted from the draft code from @luneo7 in the discussions of - https://github.com/quarkusio/quarkus/pull/26242 - https://github.com/quarkusio/quarkus/is... — committed to jponge/quarkus by jponge 2 years ago

- Mutiny 1.6.0 upgrade and capture Vert.x contexts across all Mutiny schedulers Fixes #25818 This is part of a coordinated fix across Quarkus and Mutiny where scheduler wrapping would cause Vert.x con... — committed to jponge/quarkus by jponge 2 years ago

- Mutiny 1.6.0 upgrade and capture Vert.x contexts across all Mutiny schedulers Fixes #25818 This is part of a coordinated fix across Quarkus and Mutiny where scheduler wrapping would cause Vert.x con... — committed to jponge/quarkus by jponge 2 years ago

- Mutiny 1.6.0 upgrade and capture Vert.x contexts across all Mutiny schedulers Fixes #25818 This is part of a coordinated fix across Quarkus and Mutiny where scheduler wrapping would cause Vert.x con... — committed to jponge/quarkus by jponge 2 years ago

- Mutiny 1.6.0 upgrade and capture Vert.x contexts across all Mutiny schedulers Fixes #25818 This is part of a coordinated fix across Quarkus and Mutiny where scheduler wrapping would cause Vert.x con... — committed to jponge/quarkus by jponge 2 years ago

- Mutiny 1.6.0 upgrade and capture Vert.x contexts across all Mutiny schedulers Fixes #25818 This is part of a coordinated fix across Quarkus and Mutiny where scheduler wrapping would cause Vert.x con... — committed to gsmet/quarkus by jponge 2 years ago

@luneo7 We’ve had a look with @cescoffier and he’s found a potential solution across the Mutiny and Quarkus code bases. I’ll get back to you once I have PRs.

Nope, this is actually another bug in Quarkus where we are using

quarkus-resteasy-reactiveand notquarkus-resteasy-mutiny(https://github.com/quarkusio/quarkus/issues/16884 - this was closed but wasn’t fixed)… just changing the pom to usequarkus-rest-client-reactiveinstead ofquarkus-rest-clientmakes that warning vanish…