pytest: Tests collection is slow on high number of tests

Hi,

We have over 15k tests in the repository, and recently switched from nosetests to pytest. Apparently, tests collection in pytest is way slower than in nosetests - 130s vs 30s. Also, I need to mention that norecursedirs and testpaths parameters are both set, just to exclude the case of walking in .git or other unrelated directories.

It’s also interesting, that the number of collected tests is asymptotic to time:

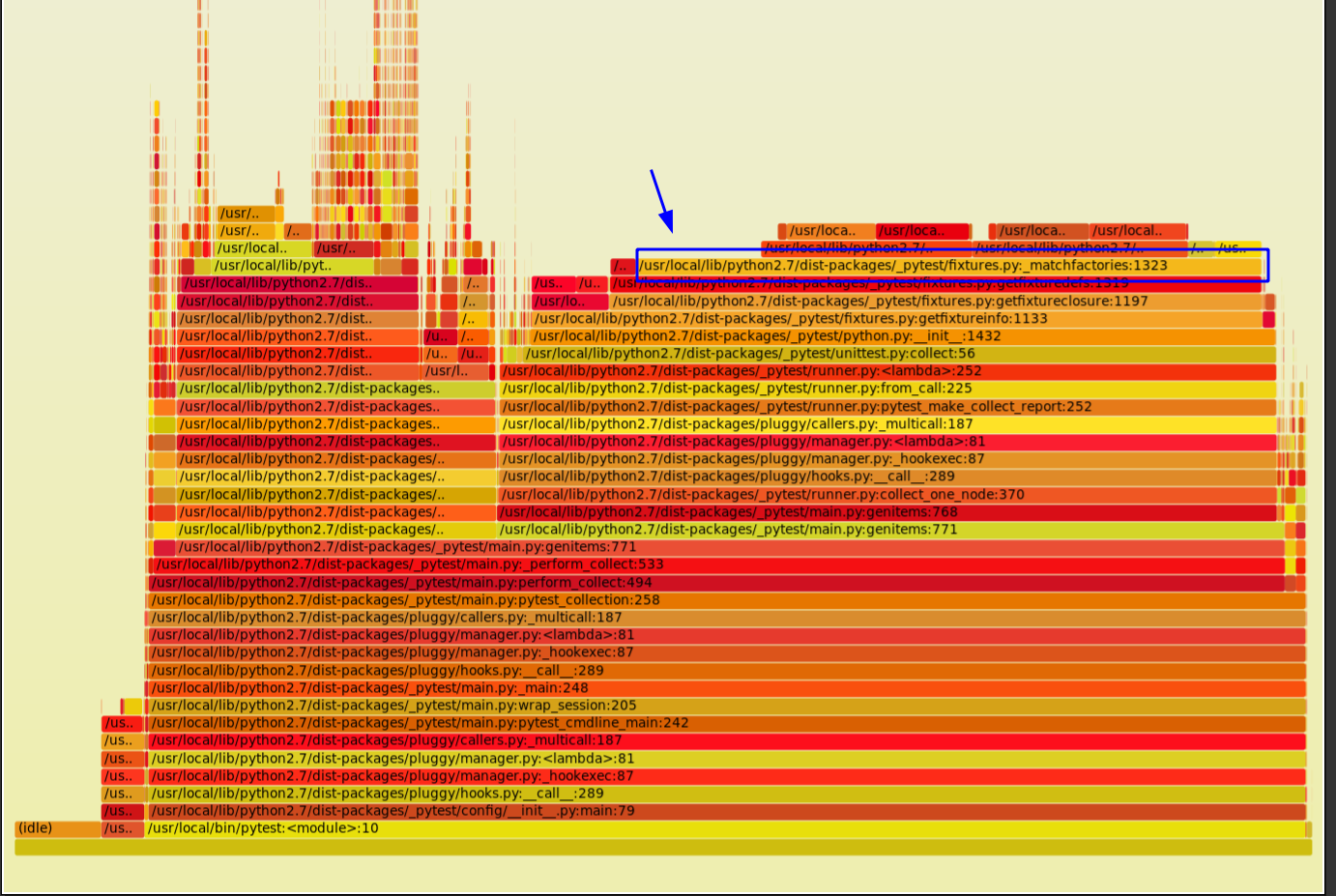

I tried to profile it using pyflame and cProfile, it seems like the slowest part is calling node.py:FixtureManager._matchfactories method call:

Here is a callgraph:

When I tried to log, what’s is happening, it seems like, the more tests, the more method is called with polynomial dependency.

Going deeper into _matchfactories method, I was able to reduce collection time from 130s to 85s just by caching the results of _splitnode method:

diff --git a/src/_pytest/nodes.py b/src/_pytest/nodes.py

index 491cf9d2c..62eb66086 100644

--- a/src/_pytest/nodes.py

+++ b/src/_pytest/nodes.py

@@ -13,6 +13,9 @@ SEP = "/"

tracebackcutdir = py.path.local(_pytest.__file__).dirpath()

+_node_cache = {}

+

+

def _splitnode(nodeid):

"""Split a nodeid into constituent 'parts'.

@@ -31,9 +34,13 @@ def _splitnode(nodeid):

if nodeid == "":

# If there is no root node at all, return an empty list so the caller's logic can remain sane

return []

- parts = nodeid.split(SEP)

- # Replace single last element 'test_foo.py::Bar' with multiple elements 'test_foo.py', 'Bar'

- parts[-1:] = parts[-1].split("::")

+

+ parts = _node_cache.get(nodeid)

+ if not parts:

+ parts = nodeid.split(SEP)

+ # Replace single last element 'test_foo.py::Bar' with multiple elements 'test_foo.py', 'Bar'

+ parts[-1:] = parts[-1].split("::")

+ _node_cache[nodeid] = parts

return parts

It doesn’t solve the asymptotic problem, but just making _splitnode and thus _matchfactories method faster:

Please let me know if you think there could be a better way to fix the problem.

Thanks! Alex

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 3

- Comments: 46 (36 by maintainers)

Commits related to this issue

- Merge #90 90: Update pytest to 5.1.0 r=rehandalal a=pyup-bot This PR updates [pytest](https://pypi.org/project/pytest) from **5.0.1** to **5.1.0**. <details> <summary>Changelog</summary> ... — committed to rehandalal/therapist by bors[bot] 5 years ago

- Merge #1963 1963: Scheduled weekly dependency update for week 35 r=jaredkerim a=pyup-bot ### Update [botocore](https://pypi.org/project/botocore) from **1.12.200** to **1.12.220**. <details> ... — committed to mozilla/normandy by bors[bot] 5 years ago

- Merge #32 32: Pin pytest to latest version 5.1.3 r=duckinator a=pyup-bot This PR pins [pytest](https://pypi.org/project/pytest) to the latest release **5.1.3**. <details> <summary>Changelog</s... — committed to duckinator/bork by bors[bot] 5 years ago

- Merge #1 1: Initial Update r=aragilar a=pyup-bot This PR sets up pyup.io on this repo and updates all dependencies at once, in a single branch. Subsequent pull requests will update one dependency... — committed to aragilar/pytest-info-collector by bors[bot] 4 years ago

- Merge #1 1: Initial Update r=aragilar a=pyup-bot This PR sets up pyup.io on this repo and updates all dependencies at once, in a single branch. Subsequent pull requests will update one dependency... — committed to aragilar/spaceplot by bors[bot] 4 years ago

- Merge #1 1: Initial Update r=aragilar a=pyup-bot This PR sets up pyup.io on this repo and updates all dependencies at once, in a single branch. Subsequent pull requests will update one dependency... — committed to aragilar/venv_tools by bors[bot] 4 years ago

- Merge #5 5: Initial Update r=aragilar a=pyup-bot This PR sets up pyup.io on this repo and updates all dependencies at once, in a single branch. Subsequent pull requests will update one dependency... — committed to aragilar/root-solver by bors[bot] 4 years ago

Note that on Python 3.7+, you can run

python3 -X importtime -m pytestto potentially find the worst offenders contributing to this.python-importtime-graph (hosted version, or you can download the

index.htmland use it locally) and importtime-waterfall (with a HAR viewer) can be useful in visualizing the information.Hi @ashb,

Thanks for posting the results.

Seems like a lot of time is spent in the import mechanism:

What is not clear sometimes is that, in order to import test modules, pytest needs to import the top-level imports in the file, which often import from other modules in the project and its dependencies, which can be costly.

A way to explore that is to remove all top-level imports from all the test files, and run

pytest --collect-only; this way we can actually measure only pytest’s collection overhead.@boxed pytest==5.2.2 collects 15k tests for 70 seconds. That’s the result of tests collection, when splitnode optimization PR is merged. However, collection time still seem to asymptotically depend on the number of tests, as the first 5k are collected just for 15 seconds.

Thanks @nicoddemus It will take me a while to obfuscate data, but I’ll prepare the output soon.

Thanks!

I tend to agree here, unfortunately the AST is not enough to collect all tests: besides perametrization and marks as mentioned by @the-compiler and @ashb, the entire collection mechanism is flexible enough to allow for plugins and normal testing code to generate tests dynamically. It could of course be made to work with the 99% of cases, but as @The-Compiler mentions I’m not sure it is worth the trouble.

@atzorvas Instead of using

-m pytest, you can provide the full path to thepytestscript. For example, on my machine (Linux), in a project which is using a virtualenv calledvenv, pytest is foundvenv/bin/pytest, so the command is:If you are using a unix-like OS, you can find where your pytest by running

which pytest.Sure, let me generate and obfuscate the report.

On Tue, May 5, 2020 at 4:45 PM Ran Benita notifications@github.com wrote:

Tested our case, without the fix - it’s 1m 33 seconds, with the fix - 1m 29 seconds (20k tests).

On Wed, Apr 29, 2020 at 11:11 AM Alexey Zankevich zancudero@gmail.com wrote:

Last I looked it hadn’t been fixed at least. Maybe 6 seconds is a lot better than it was but I’d bet it’s about 5 seconds too much 😃

Hi @boxed,

Thanks for getting back. Let me check the performance with the most recent pytest version.

@bluetech I’ll try to create a fake repo, which is slow to collect.

@nicoddemus great thanks for guidance in that PR!