puppeteer: page. setRequestInterception causes network requests to hang/timeout

Steps to reproduce

Tell us about your environment:

- Puppeteer version: 1.20.0

- Platform / OS version: macOS (10.14.5 (18F203))

- URLs (if applicable): https://www.mapbox.com/gallery/#ice-cream

- Node.js version: 10.15.2

What steps will reproduce the problem?

Setting request interception causes certain requests to never completely resolve (though the goto method doesn’t seem to mind).

Try the following:

const puppeteer = require('puppeteer');

async function run() {

let browser = null;

try {

browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.mapbox.com/gallery/#ice-cream', { waitUntil: 'networkidle0' });

await page.screenshot({ path : './temp.png' });

} catch (e) {

console.error(`Saw error:`, e);

} finally {

if (browser) {

browser.close();

}

}

}

run();

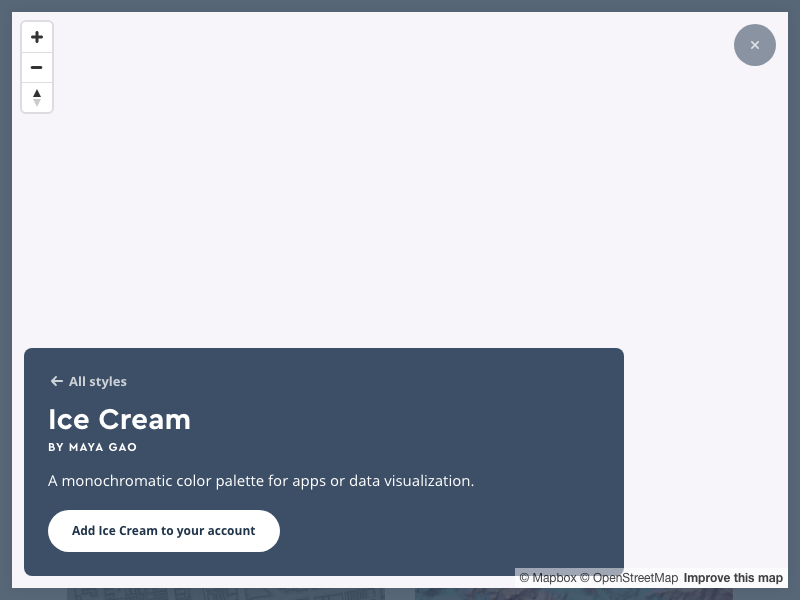

This returns a screenshot of:

Now, turn on request interception:

const puppeteer = require('puppeteer');

async function run() {

let browser = null;

try {

browser = await puppeteer.launch();

const page = await browser.newPage();

await page.setRequestInterception(true);

page.on('request', r => r.continue());

await page.goto('https://www.mapbox.com/gallery/#ice-cream', { waitUntil: 'networkidle0' });

await page.screenshot({ path : './temp.png' });

} catch (e) {

console.error(`Saw error:`, e);

} finally {

if (browser) {

browser.close();

}

}

}

run();

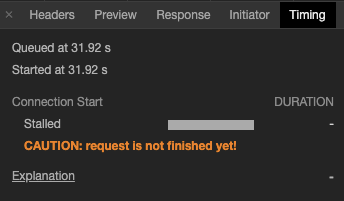

This produces the following, as mapping tiles don’t load (they hang)

What is the expected result? All network requests should resolve, when the condition matches

What happens instead? Network requests never resolve completely.

My suspicion is that this has to do with the version of Chromium bundled with pptr, but I haven’t checked prior version just yet.

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 24

- Comments: 25 (2 by maintainers)

Facing the issue in 2021 as well

Enabling await page.setRequestInterception(true); stops futher execution, and removing await page.setRequestInterception(true); will run smoothly. There is issue with await page.setRequestInterception(true);

Just in case you make the same mistake as me

Make sure you set up -

Before -

The docs state -

“Once request interception is enabled, every request will stall unless it’s continued, responded or aborted.”

So

page.on("request", (request) => request.continue())is mandatory for every request you want to continue.We are using mapbox as map provider The map is not loaded if using interceptor, similar to @joelgriffith’s issue described above Map requests get the warning message

this is my interceptor code:

I tried with Puppeteer 1.19.0 and Puppeteer 2.1.1 No difference between those versions

Facing the issue still.

Hey y’all, I found the solution to this. The download script for Chrome was pulling the latest nightly version, which is clearly full of bugs. I downloaded a stable binary of Chrome and symlinked it to the downloaded binary location in the ~/.local folder, and everything went smoothly.

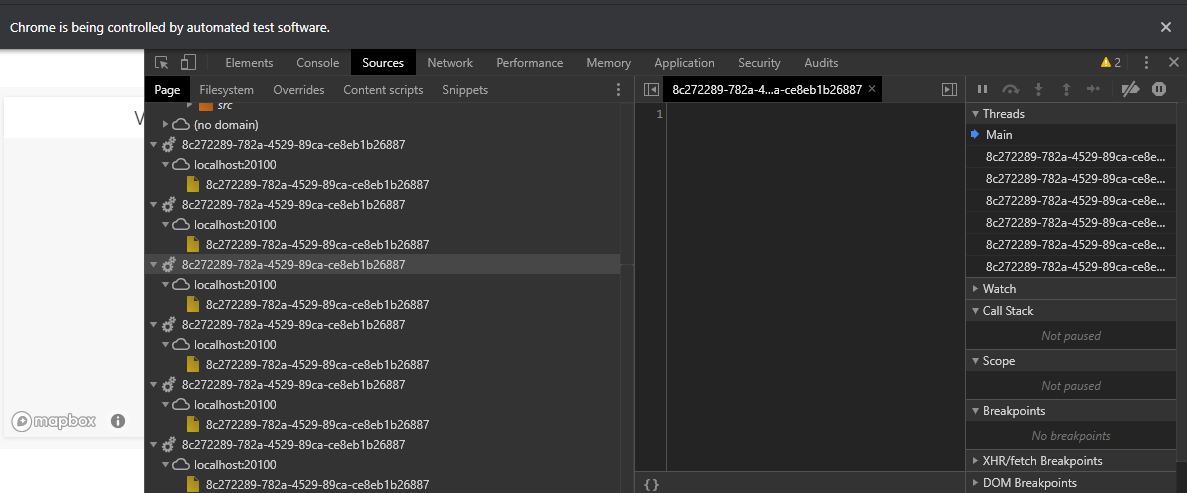

Tested with v2.1.1. Now the problem seems a bit different. Blob URLs are already loaded and there’s no “CAUTION: request is not finished yet!” in Timing panel.

However, workers are still empty.

Seems to be an issue with

blob:protocol url’sAre y’all still experiencing this with puppeteer 2.0.0 or 2.1.0 ?

Hello, any ideas here? having same issue. Thx

Check that you’re not using Google Fonts on the page. Google Fonts (and, I’m assuming, other Google properties?) have an implicit rate limit. I’ve just debugged it to removing Google Fonts from my tested pages and the network timeout disappeared. They don’t reject your request, they time out (at least at the time of this writing).

Same problem with Google + puppeteer 11.0.0, despite using

request.continue()Rolling back to 7.1.0 does not fix the issue. Other websites such ashttps://httpbin.org/ipwork well. I’m still investigating this issue, will report progress.Reproduction code

Edit: hacky solution