prisma: Error in PostgreSQL connection: Error { kind: Closed, cause: None }

Since upgrading to 2.18.0 (now on 2.19.0) I have been experiencing a large number (10+/day per ~1k req/day) of the below error, my application is running on Google Cloud Run and I am using Postgres 12 via Cloud SQL.

These errors happen for all different queries/mutations and there is no discernible pattern that triggers the error, they happen regardless of cold-start, mem/cpu usage, etc.

Hi Prisma Team! My Prisma Client just crashed. This is the report:

Versions

| Name | Version |

|---|---|

| Node | v14.16.0 |

| OS | linux-musl |

| Prisma Client | 2.19.0 |

| Query Engine | query-engine c1455d0b443d66b0d9db9bcb1bb9ee0d5bbc511d |

| Database | postgres 12 (Cloud SQL) |

Query

mutation {

updateOneBooking(

where: {

id: "X"

}

data: {

status: Reserved

events: {

create: []

}

jobs: {

upsert: [

{

where: {

id: "X"

}

create: {

id: "X"

type: Email

payload: "X"

}

update: {

}

}

]

}

}

) {

id

...

}

}

Logs

ing the database: connection closed

at NodeEngine.graphQLToJSError (/usr/src/app/node_modules/@prisma/client/runtime/index.js:29799:14)

at NodeEngine.request (/usr/src/app/node_modules/@prisma/client/runtime/index.js:29695:24)

at runMicrotasks (<anonymous>)

at processTicksAndRejections (internal/process/task_queues.js:93:5)

at async cb (/usr/src/app/node_modules/@prisma/client/runtime/index.js:34744:26)

at async Object.getCurrentUser (/usr/src/app/dist/services/auth.js:65:43)

at async ApolloServer.createContext [as context] (/usr/src/app/dist/context.js:43:20) {

clientVersion: '2.19.0'

}

}

prisma:engine stdout Error in PostgreSQL connection: Error { kind: Closed, cause: None }

prisma:engine {

error: PrismaClientUnknownRequestError2 [PrismaClientUnknownRequestError]: Error in connector: Error querying the database: connection closed

at NodeEngine.graphQLToJSError (/usr/src/app/node_modules/@prisma/client/runtime/index.js:29799:14)

at NodeEngine.request (/usr/src/app/node_modules/@prisma/client/runtime/index.js:29695:24)

at runMicrotasks (<anonymous>)

at processTicksAndRejections (internal/process/task_queues.js:93:5)

at async cb (/usr/src/app/node_modules/@prisma/client/runtime/index.js:34744:26)

at async Object.onBookingPayment (/usr/src/app/dist/services/booking/index.js:472:9)

at async onPaymentIntentSucceeded (/usr/src/app/dist/webhooks/stripe.js:118:5)

at async stripeWebhook (/usr/src/app/dist/webhooks/stripe.js:59:13) {

clientVersion: '2.19.0'

}

}

prisma:engine {

error: PrismaClientUnknownRequestError2 [PrismaClientUnknownRequestError]: Error in connector: Error querying the database: connection closed

at NodeEngine.graphQLToJSError (/usr/src/app/node_modules/@prisma/client/runtime/index.js:29799:14)

at NodeEngine.request (/usr/src/app/node_modules/@prisma/client/runtime/index.js:29695:24)

at runMicrotasks (<anonymous>)

at processTicksAndRejections (internal/process/task_queues.js:93:5)

at async cb (/usr/src/app/node_modules/@prisma/client/runtime/index.js:34744:26)

at async Object.onBookingPayment (/usr/src/app/dist/services/booking/index.js:472:9)

at async onPaymentIntentSucceeded (/usr/src/app/dist/webhooks/stripe.js:118:5)

at async stripeWebhook (/usr/src/app/dist/webhooks/stripe.js:59:13) {

clientVersion: '2.19.0'

}

}

prisma:engine {

error: PrismaClientUnknownRequestError2 [PrismaClientUnknownRequestError]: Error in connector: Error querying the database: connection closed

at NodeEngine.graphQLToJSError (/usr/src/app/node_modules/@prisma/client/runtime/index.js:29799:14)

at NodeEngine.request (/usr/src/app/node_modules/@prisma/client/runtime/index.js:29695:24)

at runMicrotasks (<anonymous>)

at processTicksAndRejections (internal/process/task_queues.js:93:5)

at async cb (/usr/src/app/node_modules/@prisma/client/runtime/index.js:34744:26)

at async Object.onBookingPayment (/usr/src/app/dist/services/booking/index.js:472:9)

at async onPaymentIntentSucceeded (/usr/src/app/dist/webhooks/stripe.js:118:5)

at async stripeWebhook (/usr/src/app/dist/webhooks/stripe.js:59:13) {

clientVersion: '2.19.0'

}

}

Client Snippet

// PLEASE FILL YOUR CODE SNIPPET HERE

Schema

// PLEASE ADD YOUR SCHEMA HERE IF POSSIBLE

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 1

- Comments: 20 (9 by maintainers)

Since I suspect many here will want to retry on failed connections, I wrote a prisma middleware for that:

I tested extensively with the next release (2.21) and it works as expected. @janpio might want to add it somewhere else so other users find it.

You can use it like:

Do not try to use something similar before the next 2.21 release as connections are not discarded on error (that is point 3 of https://github.com/prisma/prisma/issues/6329#issuecomment-811097812), so this would just loop for no benefit.

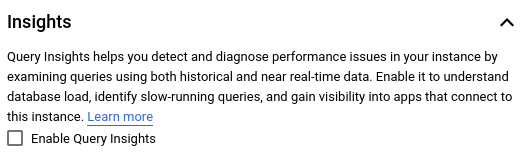

I disabled

Query Insightsin Google Cloud SQL database. Just go into DB settings and turn off the settings here:You do lose Query monitoring, which does seem like a very nice feature, but not as important as stability. Hopefully with next version I can enable it again.

Ok, so the upcoming update will change the following:

P1017: Server has closed the connection.If you have enabled Query Insights, this is maybe relevant for you:

I found out through help from Google Cloud Customer support that Query Insights if enabled have crashed databases.

Our logs was showing that our database was

the database system is in recovery modeI pushed the fix for problem 2 today, so next dev build will allow new connections parameters so we can set the idle lifetime to 2m, it should help a bit but won’t solve all issues. Problem 3 is still being worked on.

We are aware of that problem and are working on a fix, part of that fix was released in 2.20 so if you can test that it would be appreciated. To recap for everybody (since it is a complex problem with multiple sources):

Error { kind: Closed, cause: None }. There an issue tracking a possible solution to that https://github.com/prisma/quaint/issues/273 and @pimeys will be working on that.As for the connection limit of 100 and the calculation you are doing. From my personal experience, Google allows a surge to go over the limit when deploying a new version of the application. I had some issues on smaller databases (staging) of connection exhaustion. So just be conservative with the upper limit or put a pgbouncer between your instances and the DB (unfortunately there is no managed service for that on Cloud SQL, but aiven has one).

@Sytten I use the uptime check as well on Cloud Run and for an application handling ~5-10k req/day I see at least 10+ of these errors per day.

Having the same issue with Google App Engine and can confirm that upgrading to

2.20.1didn’t resolve it.@Sytten Do the read/writes get interrupted/lost once we get this error, do we need to manually handle it and do retries? Asking because I do not want the data to be lost on production.

Thanks for the detailed follow up, I will upgrade to 2.20.x and see if we notice any differences.

Our Cloud Run service only has a max instance count of 4 so it’s likely due to one of the other issues you mentioned.

Thanks for all the hard work!

Thanks, we seems to have some problem there in relation to Google Cloud’s something, see the labels I applied to this issue and the issues in there. It has been pretty spotty in the past, but seems to be more present now. We have a hunch that it is about connection management with the Cloud SQL instance, and are working on a fix already - but as we have no real reproduction (which we can reproduce all the time, reliably) this is pretty hard.