prefect: `ValueError: Path /root/.prefect/storage/...` does not exist

Prefect 2.0b15 has an issue where a task will occasionally fail if it cant find the serialized file. It almost looks like a race condition? Is the task starting before the file is written? The error will appear occasionally have ~100 or more tasks have executed, not consistently.

18:55:13.371 | DEBUG | prefect.engine - Reported crashed task run 'mouse_detection-49dd9cf8-37-b6710e8b93d84a3ea96ea1d76f546466-1' successfully.

18:55:13.371 | INFO | Task run 'mouse_detection-49dd9cf8-75' - Crash detected! Execution was interrupted by an unexpected exception.

18:55:13.372 | DEBUG | Task run 'mouse_detection-49dd9cf8-75' - Crash details:

Traceback (most recent call last):

File "/root/venv/lib/python3.8/site-packages/prefect_dask/task_runners.py", line 236, in wait

return await future.result(timeout=timeout)

File "/root/venv/lib/python3.8/site-packages/distributed/client.py", line 294, in _result

raise exc.with_traceback(tb)

File "/root/venv/lib/python3.8/site-packages/prefect/engine.py", line 957, in begin_task_run

task_run.state.data._cache_data(await _retrieve_result(task_run.state))

File "/root/venv/lib/python3.8/site-packages/prefect/results.py", line 38, in _retrieve_result

serialized_result = await _retrieve_serialized_result(state.data)

File "/root/venv/lib/python3.8/site-packages/prefect/client.py", line 104, in with_injected_client

return await fn(*args, **kwargs)

File "/root/venv/lib/python3.8/site-packages/prefect/results.py", line 34, in _retrieve_serialized_result

return await filesystem.read_path(result.key)

File "/root/venv/lib/python3.8/site-packages/prefect/filesystems.py", line 79, in read_path

raise ValueError(f"Path {path} does not exist.")

ValueError: Path /root/.prefect/storage/b371ce283437427e8f66dc8bb6de5d7c does not exist.

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Reactions: 3

- Comments: 29 (11 by maintainers)

Commits related to this issue

- Add debug level log for task run rehydration Additional information is needed to debug cases like https://github.com/PrefectHQ/prefect/issues/6086 — committed to PrefectHQ/prefect by zanieb 2 years ago

- Add debug level log for task run rehydration (#6307) Additional information is needed to debug cases like https://github.com/PrefectHQ/prefect/issues/6086 — committed to PrefectHQ/prefect by zanieb 2 years ago

- Publish docs from release 2.0.4 (#6366) * Update task runner docs * Add map and async examples * Update docs/concepts/task-runners.md Co-authored-by: Jeff Hale <discdiver@users.noreply.githu... — committed to PrefectHQ/prefect by github-actions[bot] 2 years ago

UPDATE: Turns out the error was entirely due to the way we configured our k8s container. Really sorry for the trouble! We customized the k8s container spec with a

readinessProbeandlivenessProbe. These are the default settings we use for our FastAPI k8s deployment. However, it seems that they do not play well with Prefect and the k8s cluster prematurely destroys “successful” pods. The symptom of k8s destroying the pod is the[alueError: Path /root/.prefect/storage/... does not existerror (and a “crashed” state for the very first task).We removed the two probes and our flow runs were working again. From this experience here (and this thread from slack), perhaps this specific

ValueError: Path... does not existis the first exception that is raised whenever the flow run’s server crashes?Also getting this same error (Prefect 2.2.0, KubernetesJob, tested on both default and Dask task runners), not sure if this helps but noticed that I only get this error for flows when a task takes longer than ~5:30 min to run… Are there any timeout / magic config numbers related to

anyioorprefect.engineat thetasklevel that might be set to 330 seconds?NOTE: I do not get this error when running the flow locally.

Traceback:

I have been working on a Prefect 2.0 POC deployed to OpenShift with:

Using this test flow deployed to an S3 bucket on the standalone Minio instance I was able to reproduce it quite consistently:

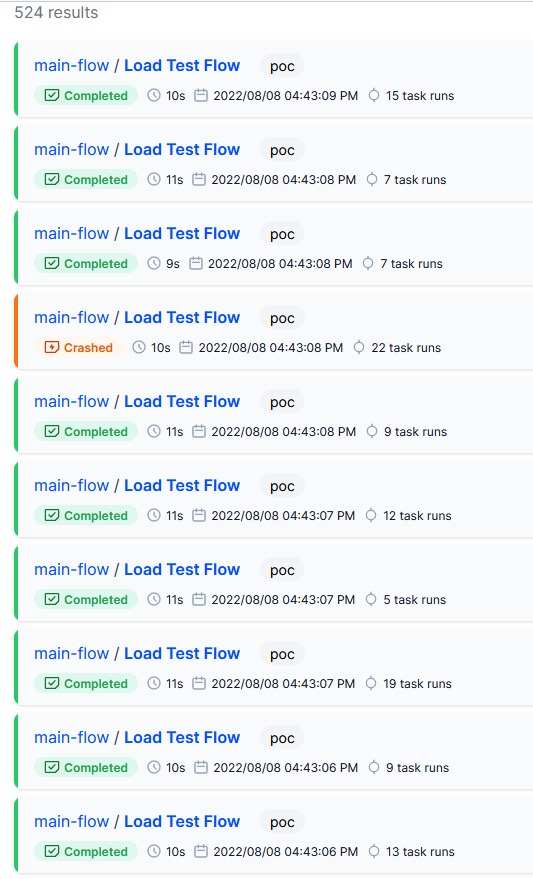

For example, using Postman to trigger the flow 10 times in quick succession to

{{prefect_host}}/api/deployments/:id/create_flow_runwith this payload:I got a crash on 1/10 flows:

Here is the debug output: