RootEncoder: Crashed application RTMP || IP camera

Hi all.

I’m writing an application, I used your libraries to transfer video to the server by RTMP.

Has connected all as it is necessary in a gradle and the manifest.

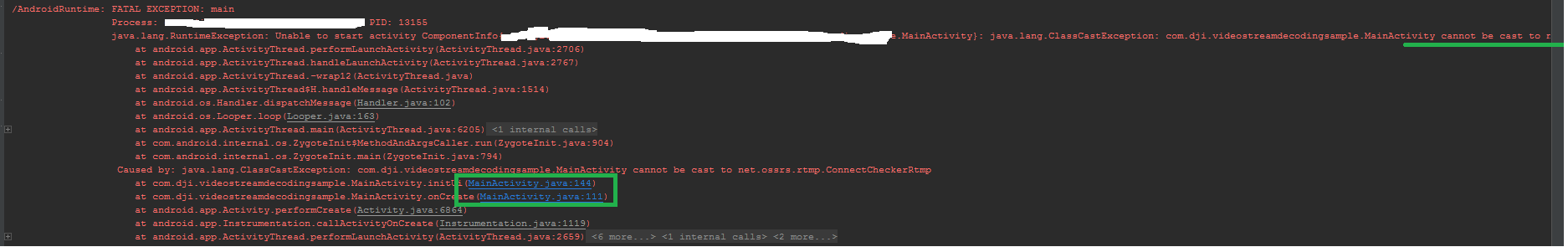

There are MainActivities and SurfaceView, I wanted to start broadcasting video from SurfaceView directly to the server, as in your examples. But there was an error and the application closes as soon as it opens.

He swears on two lines:

// create builder

RtmpCamera1 rtmpCamera1 = new RtmpCamera1 (videostreamPreviewSf, (ConnectCheckerRtmp) this);

// start stream

rtmpCamera1.startStream ("rtmp: //adress...");

About this issue

- Original URL

- State: closed

- Created 6 years ago

- Comments: 33 (16 by maintainers)

This could be useful for you. It is a little code to use that should work(Of course, I cant test it so maybe not 😦). Read commentary because you need create 2 new methods in VideoDecoder class to work:

This look audio permission missed. Remember get permissions on runtime if you use API23+

AndroidViewFilter work with all views that draw something in onDraw (it means that library get canvas from the view and draw this canvas) method except a GlSurfaceView.

If your camera is device camera (You still have device camera in use so you can’t open with the library) nothing to do by default. You need modify the library. If camera is like a IP camera and you want re stream it to other server you can try use AndroidViewFilter like I said but if not work you can modify the project to stream using opengl to copy surface frames to surface from VideoEncoder class (like I do using OpenglView that copy camera frames in surfaceview surface and in VideoEncoder surface) or send h264 and aac bytebuffer directly to rtmp or rtsp modules.

Other way (maybe not useful for you) is stream your device screen like in DisplayRtmpActivity class

You can do a “hacky way”. You can do stream with openglview and use AndroidViewFilter to send other view of course this will use camera but your view will overlay it so you can set same size to openglview than your surfaceview and overlay all camera content. You can see OpenglRtmpActivity to know how to use openglview and androidviewfilter. If you need more info you can see this issue still closed: https://github.com/pedroSG94/rtmp-rtsp-stream-client-java/issues/125

The error is here:

You need implement ConnectCheckerRtmp in your class.