openvino: [Bug] Memory Leak on inferance

System information (version)

- OpenVINO = 2022.1.0.643

- Operating System / Platform => Windows 64 Bit

- Compiler => Visual Studio 2019

- Problem classification: Model Conversion

- Framework: pytorch(if applicable)

- Model name: OCR (if applicable)

Detailed description

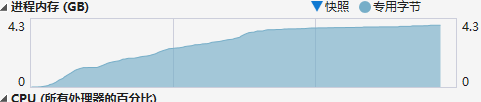

when i use OpenVINO2022.1.0.643 to inferance a text image “infer_request.infer();” always Memory leaks. code list: ############################################################################

######################################################################

Steps to reproduce

```.cpp

// C++ code example

```

reco_network400 = core400.read_model(string(modelPath), modelPathBin);

auto d400 = reco_network400->input(0).get_partial_shape();

d400[3] = -1;

reco_network400->reshape(d400);

const ov::Layout tensor_layout400{ "NHWC" };

ov::preprocess::PrePostProcessor ppp400(reco_network400);

ov::preprocess::InputInfo& input_info400 = ppp400.input();

input_info400.tensor().set_element_type(ov::element::f32).set_layout(tensor_layout400);

input_info400.model().set_layout("NCHW");

ppp400.output().tensor().set_element_type(ov::element::f32);

reco_network400 = ppp400.build();

compiled_model400 = core400.compile_model(reco_network400, "CPU");

infer_request400 = compiled_model400.create_infer_request();

......

image.convertTo(image, CV_32FC1, 1.0f / 255.0f);

image = (image - 0.588) / 0.193;

vector<vector<float>> output;

size_t size = long(width) * long(height) * long(image.channels());

std::shared_ptr<float> _data;

_data.reset(new float[size], std::default_delete<float[]>());

cv::Mat resized(cv::Size(width, height), image.type(), _data.get());

cv::resize(image, resized, cv::Size(width, height));

ov::element::Type input_type = ov::element::f32;

ov::Shape input_shape = { 1, (long unsigned int)height, (long unsigned int)width, 1 };

//ov::Shape input_shape = { 1, 1,(long unsigned int)height, (long unsigned int)width };

ov::Tensor input_tensor = ov::Tensor(input_type, input_shape, _data.get());

infer_request400.set_input_tensor(input_tensor);

infer_request400.infer();

ov::Tensor& output_tensor = infer_request400.get_output_tensor();

Issue submission checklist

- I report the issue, it’s not a question

- I checked the problem with documentation, FAQ, open issues, Stack Overflow, etc and have not found solution

- There is reproducer code and related data files: images, videos, models, etc.

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 46 (17 by maintainers)

Most upvoted comments

Is there any memory optimization we can do?

Is there any memory optimization we can do?

+1

cxf2015 on Jul 7, 2022