opentelemetry-collector-contrib: [processor/attributes] resource attributes sent to incorrect tenant

Describe the bug multi-tenant use cases in the Loki exporter were introduced through https://github.com/open-telemetry/opentelemetry-collector-contrib/pull/12415, which allows setting and creating tenantIDs from resource attribute values. This is awesome and is the exact functionality we are looking for, but we are somehow seeing attribute values being sent to the wrong tenant, especially when logging at scale.

For example, if we configure the loki exporter to set the tenantID from the “namespace” attribute, we are seeing many logs from namespace foo show up in the namespace bar tenant in Loki.

Steps to reproduce This has been occurring using the opentelemetry collector helm chart with the following configuration. We are following the recommendations in the documentation to use the groupbyattrs processor, as well as batching.

config:

receivers:

fluentforward:

endpoint: 0.0.0.0:8006

processors:

groupbyattrs:

keys:

- namespace_name

filter/exclude:

logs:

exclude:

match_type: regexp

resource_attributes:

- Key: namespace_name

Value: istio-system|kube-system

exporters:

loki:

endpoint: http://loki-distributed-gateway.loki.svc.cluster.local/loki/api/v1/push

format: json

tenant:

source: attributes

value: namespace_name

labels:

resource:

namespace_name: ""

attributes:

stream: ""

app: ""

pod_name: ""

account: ""

cluster_name: ""

region: ""

container_name: ""

service:

pipelines:

logs:

exporters: [loki]

processors: [memory_limiter, batch, groupbyattrs, filter/exclude]

receivers: [fluentforward]

To reproduce, we noticed this occur more frequently during spiky loads at a higher volume, but also noticed it occur sometimes when the opentelemetry collector was killed or rescheduled onto a new node.

As part of troubleshooting we have also tested multiple combinations of this config, such as batching and grouping after the filter, as well as before, and also not batching at all. None of these have resulted in proper tenants.

What did you expect to see?

We expect to see all logs from namespace foo only be in tenant foo and all logs from namespace bar only be in tenant bar.

What did you see instead? Logs from every namespace appear in other tenants.

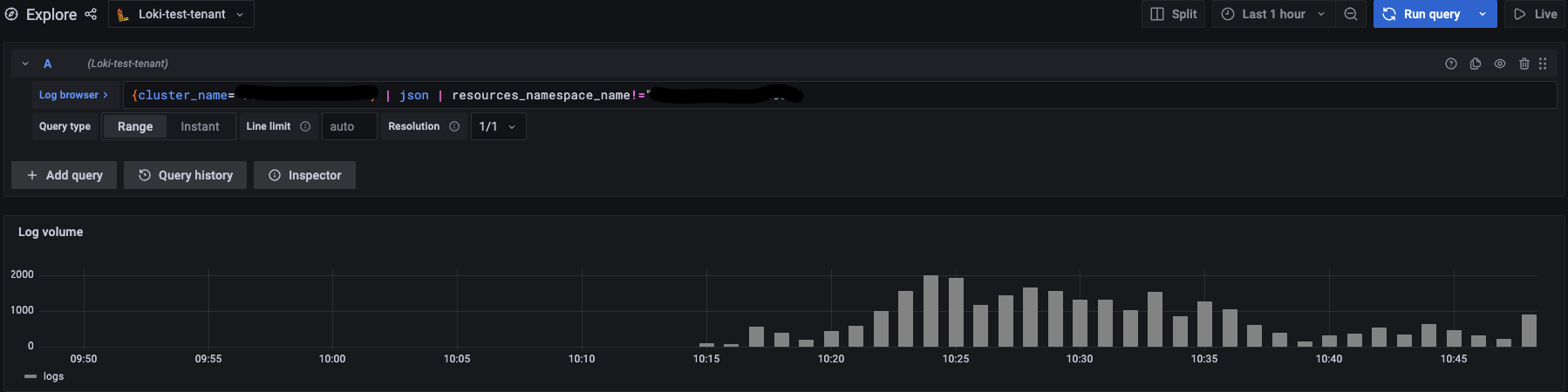

below are some screenshots from Grafana where we expect to only see logs from the foo namespace.

Instead, we see many logs from other namespaces as well (even though our X-Scope-OrgID is set to the foo tenant)

What version did you use? Version: v0.57.2

Additional context We noticed in testing environments that with small non-spiky loads the logs were grouped and exported to the correct tenant, but in high-volume spiky environments the logs were not.

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Reactions: 1

- Comments: 19 (19 by maintainers)

Despite the title, this isn’t about the Loki exporter but probably a problem with the attributes processor.

That’s true, but only because the Loki Exporter received different tenants as part of the same batch. The groupbyattr should have split this into ten batches, one per tenant, resulting in 10 calls to the Loki exporter, each building one HTTP request with one stream to Loki.

Sorry, I should have been clearer here. I’m calling a “batch” an instance of

plog.Logsas received by the Loki Exporter from the previous component (likely the groupbyattr). One batch contains multiple ResourceLogs, and we assume all ResourceLogs within the same batch belong to the same tenant. So, a call toplog.Logs.ResourceLogs[0].Resource.Attributes["tenant"]would yield the same result for every otherResourceLogson the sameplog.Logs.Pinged you there 😃

@jpkrohling you mean like:

as described in the first comment? 😉

Please do let us know if we do something wrong regardless. Thanks for taking a look 👍