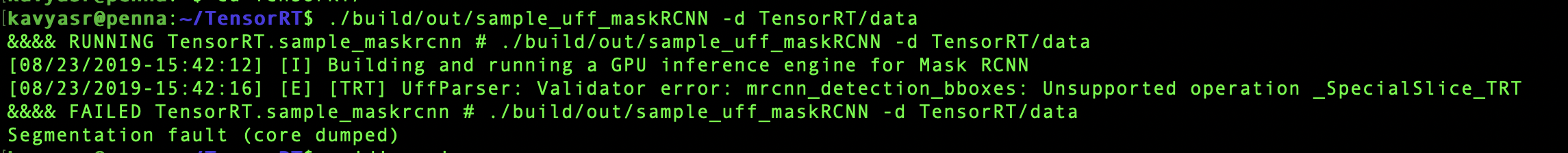

TensorRT: [TRT] UffParser: Validator error: mrcnn_detection_bboxes: Unsupported operation _SpecialSlice_TRT &&&& FAILED TensorRT.sample_maskrcnn # ./build/out/sample_uff_maskRCNN -d TensorRT/data

I get an error when I try to run MaskRCNN as below:

I have installed libnvinfer 6 also:

$ apt list --installed | grep -i -e nvinfer -e nvparser It gives the following output:

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

libnvinfer-dev/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

libnvinfer-plugin-dev/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

libnvinfer-plugin6/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

libnvinfer5/unknown,unknown,now 5.1.5-1+cuda10.1 amd64 [installed]

libnvinfer6/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

libnvparsers-dev/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

libnvparsers6/unknown,now 6.0.1-1+cuda10.1 amd64 [installed,automatic]

python-libnvinfer/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

python-libnvinfer-dev/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

python3-libnvinfer/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

python3-libnvinfer-dev/unknown,now 6.0.1-1+cuda10.1 amd64 [installed]

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Comments: 32 (3 by maintainers)

I downgraded keras to 2.1.6. It could convert model to uff successfully. Hope it could help anyone here. @SongXia84 @fuman5000

If you have install TensorRT before you try this sample , you may fail to build and run it. Because in your computer there has been a library called libnvinfer_plugin.so without the needed plugins in this sample.So, I think maybe you should reinstall TensorRT. At least, don’t let the exe link to wrong .so

I think it’s the problem that exe cannot link libnvinfer_plugin.so correctly.

I finally succeed in the

sampleUffMaskRCNNexample by using https://github.com/NVIDIA/TensorRT/issues/123#issuecomment-551269792. Many thanks to @rmccorm4.@ShawnNew What environment are you trying to convert the UFF -> TRT like in your screenshot? That still just looks something like auto conversion from add->addV2, which I think happens in TF >= 1.15.

I think you need to use TF <= 1.14 for the whole process TF -> UFF -> TRT, but you only did the (TF -> UFF) part in your container.

Once you’ve converted UFF -> TRT, your tensorflow version shouldn’t matter anymore because you should strictly be using the TensorRT API at that point to do inference on your TRT engine.

You might be able to also convert UFF -> TRT in that

tensorflow/tensorflow:1.9.0-gpu-py3container, but I’m not sure. If you’re going to try using a container for parsing UFF -> TRT, I would suggest going withnvcr.io/nvidia/tensorflow:19.10-py3at the moment.