TensorRT: TensorRT 7.1 produce incorrect results when batch size >1

Description

Environment

TensorRT Version: 7.1.3.0 GPU Type: Jetson TX2 (Jetpack 4.4) Nvidia Driver Version: CUDA Version: 10.1 CUDNN Version: Operating System + Version: (LTS from Jetpack 4.4) Python Version (if applicable): 3.6.9 PyTorch Version (if applicable): 1.6.0

Relevant Files

|----rfb.onnx |----trt_utils.py |----produce_bug.py |____________________ These files can be downloaded from google drive

I reproduce produce_bug.py as below

import os

import sys

import pickle

import argparse

import numpy as np

from pprint import pprint

import pdb

import torch

import torch.backends.cudnn as cudnn

cudnn.benchmark = True

import pycuda.autoinit

import tensorrt as trt

import trt_utils as tu # trt utils

import pdb

TRT_LOGGER = trt.Logger()

def test_bs_1():

#engine, context, h_input, d_input, h_output, d_output, stream = onnx_2_tensorrt.main()

onnx_file_path = 'rfb.onnx'

fp16_mode = False#args.fp16

int8_mode = False#args.int8

batchsize =1

engine_file_path = "rfb_fp16_{}_int8_{}_bs_{}.trt".format(fp16_mode,int8_mode,batchsize)

print("Building Engine")

engine = tu.get_engine(batchsize,onnx_file_path,engine_file_path,fp16_mode=fp16_mode,int8_mode=int8_mode)

#pdb.set_trace()

context = engine.create_execution_context()

inputs, outputs, bindings, stream = tu.allocate_buffers(engine) # input, output: host # bindings

trt_outputs = []

#img = np.random.randn(1,3,512,512) + 100

#img = img.astype(np.uint8)

global img

x = img

inputs[0].host = x.reshape(-1)

trt_outputs = tu.do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)

#

locs, scores = tu.process_outputs(trt_outputs,batchsize, 6) # The locs is corr

#print(np.mean(locs))

#print(np.mean(scores))

pdb.set_trace()

def test_bs_2():

onnx_file_path = 'rfb.onnx'

fp16_mode = False#args.fp16

int8_mode = False#args.int8

batchsize = 2

engine_file_path = "rfb_fp16_{}_int8_{}_bs_{}.trt".format(fp16_mode,int8_mode,batchsize)

print("Building Engine")

engine = tu.get_engine(batchsize,onnx_file_path,engine_file_path,fp16_mode=fp16_mode,int8_mode=int8_mode)

#pdb.set_trace()

context = engine.create_execution_context()

inputs, outputs, bindings, stream = tu.allocate_buffers(engine) # input, output: host # bindings

trt_outputs = []

#img = np.random.randn(1,3,512,512) + 100

#img = img.astype(np.uint8)

global img

x = np.concatenate([img,img])

inputs[0].host = x.reshape(-1)

trt_outputs = tu.do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)

#

locs, scores = tu.process_outputs(trt_outputs, batchsize, 6) # The locs is c

#print(np.mean(locs))

#print(np.mean(scores))

pdb.set_trace()

if __name__ == '__main__':

img = np.random.randn(1,3,512,512) + 100

img = img.astype(np.uint8)

test_bs_1()

test_bs_2()

Steps To Reproduce

python produce_bug.py

There are two test functions in the produce_bug.py,

In test_bs_1(), the code generate an engine whose maxBatchSize is 1, when I generate a random input img and set up a input x = img (batch size=1)

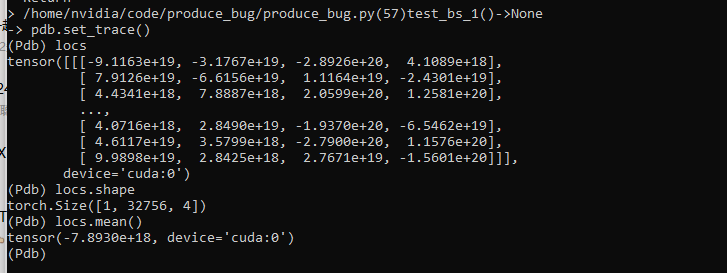

the shape of locs is [1, 32756, 4] and the mean of locs is -7.8930e+18 (non zero)

In test_bs_2(), the code generate an engine whose maxBatchSize is 2, and the input becomes the concatenation of img that x = np.concatenation([img, img]) (batch size =2)

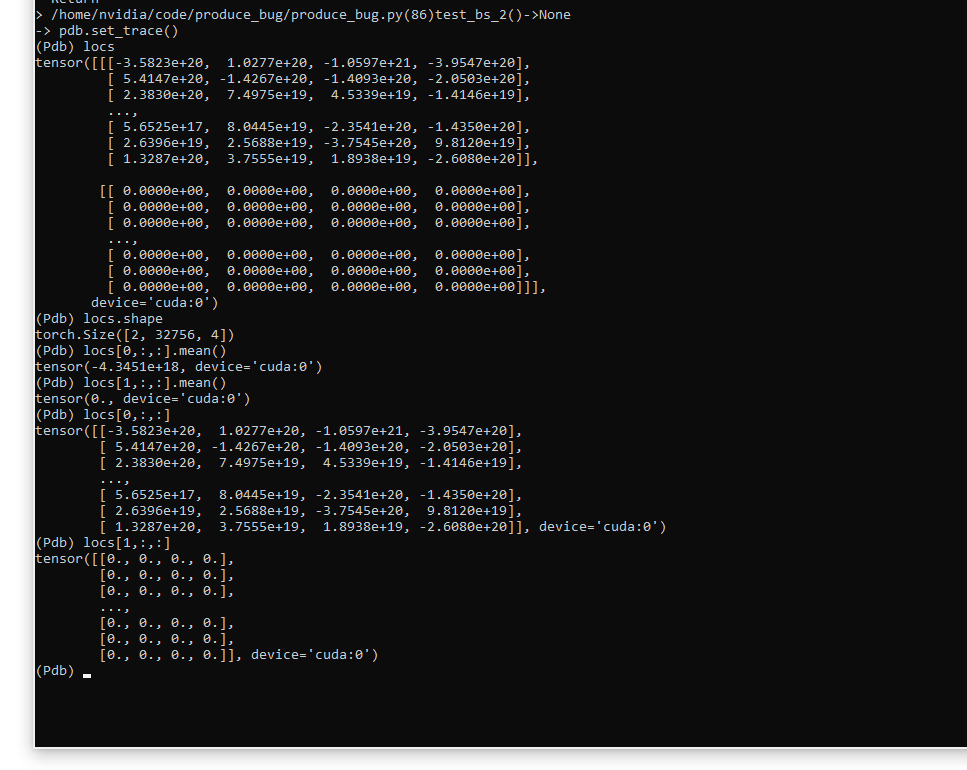

If the engine works correctly, the mean of locs.

We can see from the screenshot above that the output locs has a lot of zeros values.

The first output locs[0, :, :] in the batch seems normal, but the second output locs[1,: , : ] in the batch has all zero values.

Besides, another output boxes have also a lot zero values when batch size > 1

Therefore, the TensorRT Engine does not work properly.

If I used TensorRT 6.0 to run the code, locs[1,: , : ] will be normal (non zero).

This problem is very urgent for me. Please help me! Appreciate any suggestions!

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 22

@NgTuong Same issue. I have tried many methods. But all failed.

Hi @dongyi-kim , there will be problem. we have to export onnx model which have batch size = -1 or 2 if you want creating trt engine with batch size 2. thanks!

@NgTuong BTW, besides read the code, you can also run

trtexecfollow https://github.com/NVIDIA/TensorRT/tree/master/samples/opensource/trtexec#example-4-running-an-onnx-model-with-full-dimensions-and-dynamic-shapes to first verify that the batchsize = 2 works when usingtrtexec.