napari: Color mapping on label images using color dictionaries displays wrong for some objects

🐛 Bug

Assigning unique colors to labels via label_layer.color dictionary does not work as expected and shows the color of some objects in other objects as well.

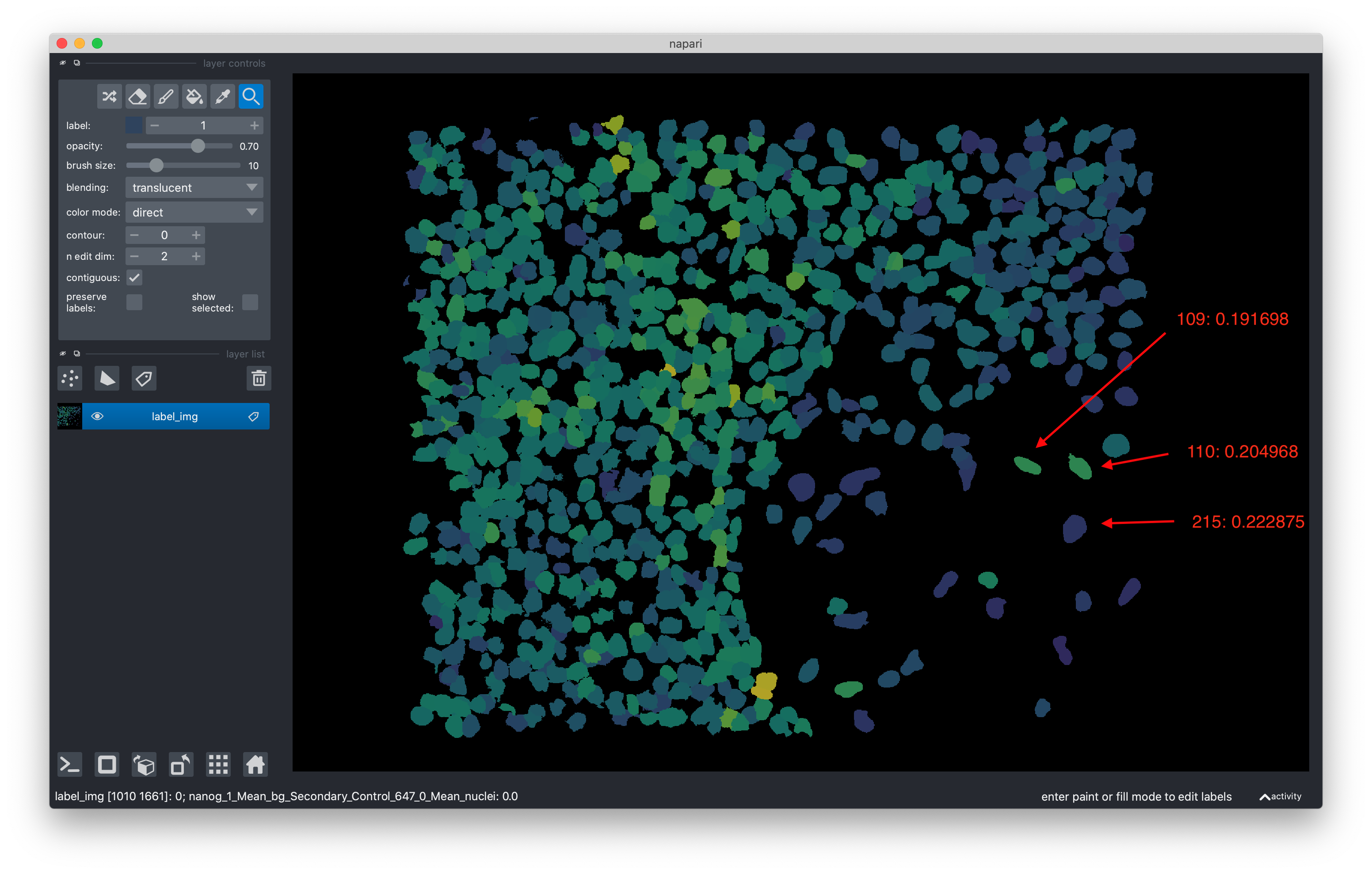

I color-code a label image using a color dictionary (e.g. {1: [0.3, 0.3, 0.5, 1.0], 2: …}). This mostly displays the correct colors for each label in the label image, but displays wrong colors for a handful of labels. I think this only occurs when there are higher numbers of labels in the image (in the hundreds), at least I’ve never observed it in my smaller test cases. It also isn’t always happening for the same label objects, but seems to vary depending on the colormap that is provided (e.g. rescaling the features differently before applying a colormap: Displays wrongly with one rescaling setting for a given object, but not when using a different rescaling setting). But it reproducibly happens when using the same setting. It’s hard to map the scope of the issue, because it’s not always obvious whether the correct or a wrong color is displayed. By hard-coding a single label to a color that is very different from the rest of the colormap, I can see the issue pop up in a handful of label objects though.

To Reproduce

Steps to reproduce the behavior:

- Load the example label image (below) into napari

- Create a color dictionary for the label image using the csv file, rescaling the values & mapping them to a colormap

- Assign that dictionary to label_image.color4.

- Check whether colors are displayed correctly. E.g. cell 109 displays the correct color when the features were rescaled between 0 & 40, but not when rescaling is done between 0 and 50.

- (Optionally hard-code a single label to a very different color to see where that color is used mistakenly)

Sample code:

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import imageio

import napari

label_img = imageio.imread('Example_img.png')

df = pd.read_csv('Example_data.csv')

lower_contrast_limit = 0.0

upper_contrast_limit = 50.0 # vary the upper limit to see changes

df['feature_scaled'] = (

(df['feature'] - lower_contrast_limit) / (upper_contrast_limit - lower_contrast_limit)

)

df.loc[df['feature_scaled'] < 0, 'feature_scaled'] = 0

df.loc[df['feature_scaled'] > 1, 'feature_scaled'] = 1

# Create the colormap based on the feature measurements

colors = plt.cm.get_cmap('viridis')(df['feature_scaled'])

colormap = dict(zip(df['label'], colors))

# Also calculate a properties dict that displays the actual measurement on hover

properties_array = np.zeros(int(df['label'].max() + 1))

properties_array[df['label'].astype(int)] = df['feature']

label_properties = {'feature': np.round(properties_array, decimals=2)}

viewer = napari.Viewer()

label_layer = viewer.add_labels(label_img)

label_layer.color = colormap

label_layer.properties = label_properties

Expected behavior

Cells 109 & 110 have lower values in the feature than the cell 215 below them. Thus, I’d expect them to be darker in the viridis colormap. If I check the colors that were passed to them in the colormap dictionary (and are stored in label_layer.color), that works for some rescaling, but not for every rescaling.

When rescaling 0, 40: Label: Color 109: [0.235526, 0.309527, 0.542944, 1. ] for a feature value of 0.239 110: [0.227802, 0.326594, 0.546532, 1. ] for a feature value of 0.256 215: [0.21621 , 0.351535, 0.550627, 1. ] for a feature value of 0.279

But when just changing the feature rescaling slightly ( upper_contrast_limit = 50 instead of 40), it displays the colors wrong:

The colors in the colormap dict would describe 109 & 110 as darker colors than 215, but it’s not displayed that way: 109: [0.257322, 0.25613 , 0.526563, 1. ] for a feature value of 0.192 110: [0.252194, 0.269783, 0.531579, 1. ] for a feature of 0.205 215: [0.243113, 0.292092, 0.538516, 1. ] for a feature value of 0.223

Additional examples

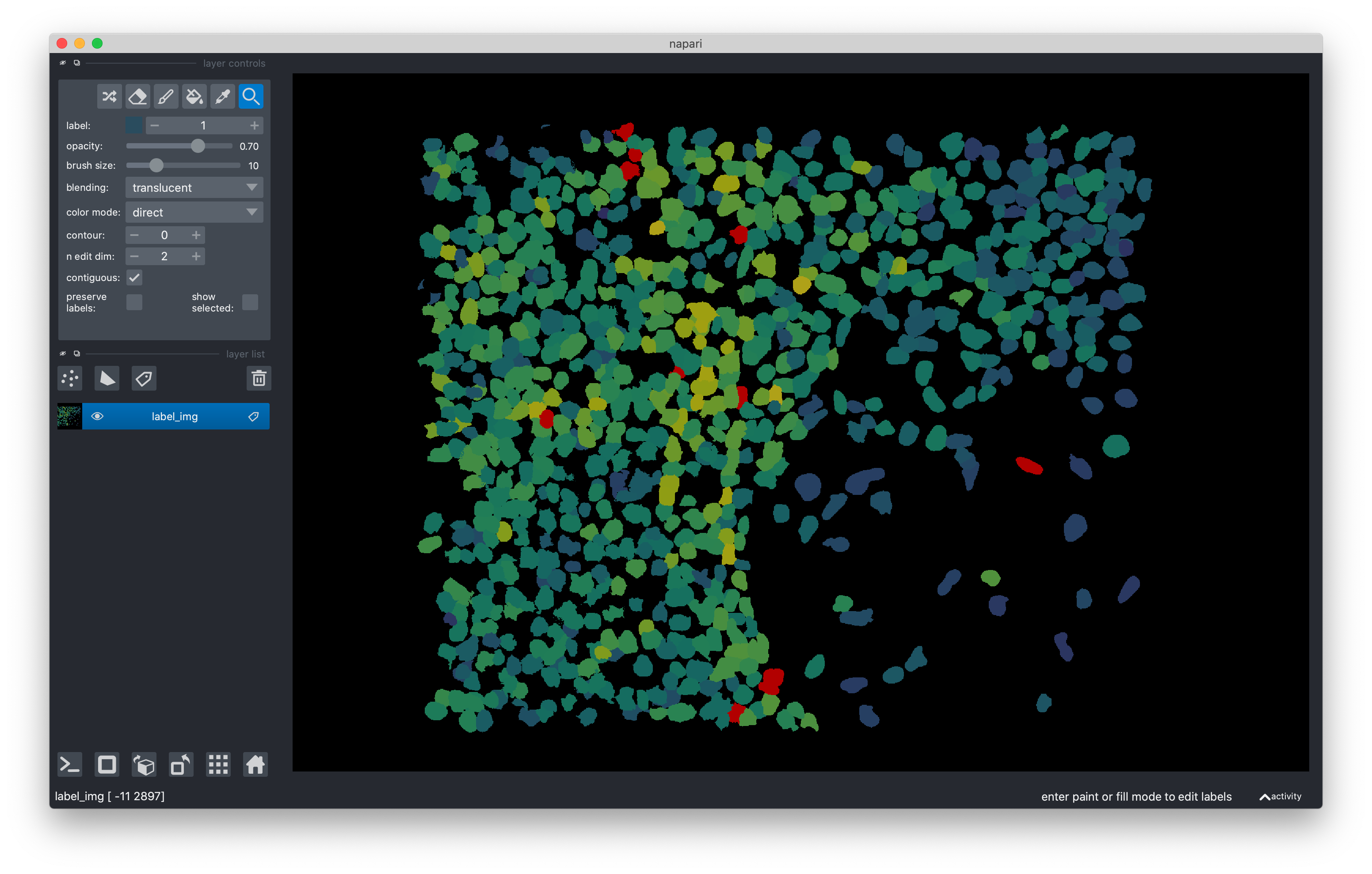

To reproduce step 5 above, do this:

Run with upper_contrast_limit = 40.0

Then set colormap[109] = [1.0, 0.0, 0.0, 1.0]

Then open in the viewer again. We’d expect only the single object to change color to red, but actually multiple objects change color to red:

Weirdly though, if the same thing is run with upper_contrast_limit = 50.0, only the object 109 changes color

=> Depending on the colormap that is passed to napari, different objects display the wrong color

Environment

- Please copy and paste the information at napari info option in help menubar here:

napari: 0.4.14

Platform: macOS-10.15.7-x86_64-i386-64bit

System: MacOS 10.15.7

Python: 3.9.10 | packaged by conda-forge | (main, Feb 1 2022, 21:27:48) [Clang 11.1.0 ]

Qt: 5.15.2

PyQt5: 5.15.6

NumPy: 1.21.5

SciPy: 1.8.0

Dask: 2022.01.1

VisPy: 0.9.6

OpenGL:

- GL version: 2.1 INTEL-14.7.23

- MAX_TEXTURE_SIZE: 16384

Screens:

- screen 1: resolution 2560x1440, scale 2.0

- screen 2: resolution 1440x900, scale 2.0

Plugins:

- console: 0.0.4

- napari-feature-visualization: 0.1.dev58+gd167650

- scikit-image: 0.4.14

- svg: 0.1.6

- Any other relevant information:

Additional context

The issue is a big problem for me when using e.g. this plugin I wrote to visualize feature measurements on label images: https://github.com/jluethi/napari-feature-visualization

Example data

Label image & feature data

Example_data.csv

Example_data.csv

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 16 (11 by maintainers)

Commits related to this issue

- Fix for display of label colors (#4935) As described in https://github.com/napari/napari/issues/4384, using a label layer with a color dictionary behaves poorly when the number of colors exceeds ~400... — committed to napari/napari by perlman 2 years ago

- Colour 'Labels' layers by cell statistics (#53) Fixes #51 https://user-images.githubusercontent.com/29753790/230074652-1bf638b0-7e98-47fa-8288-bbf9f8479aef.mp4 - set minimum version of `... — committed to epitools/epitools by p-j-smith a year ago

- Use a shader for low discrepancy label conversion (#3308) The pull request will move the current label color calculation onto the GPU. This is the result of dozens of hours of hacking between @perlm... — committed to napari/napari by perlman a year ago

- Use a shader for low discrepancy label conversion (#3308) # Description The pull request will move the current label color calculation onto the GPU. This is the result of dozens of hours of ha... — committed to perlman/napari by perlman a year ago

I think I have it; I’ll draft a PR shortly. If you want to try the branch before then, please let me know if it works.

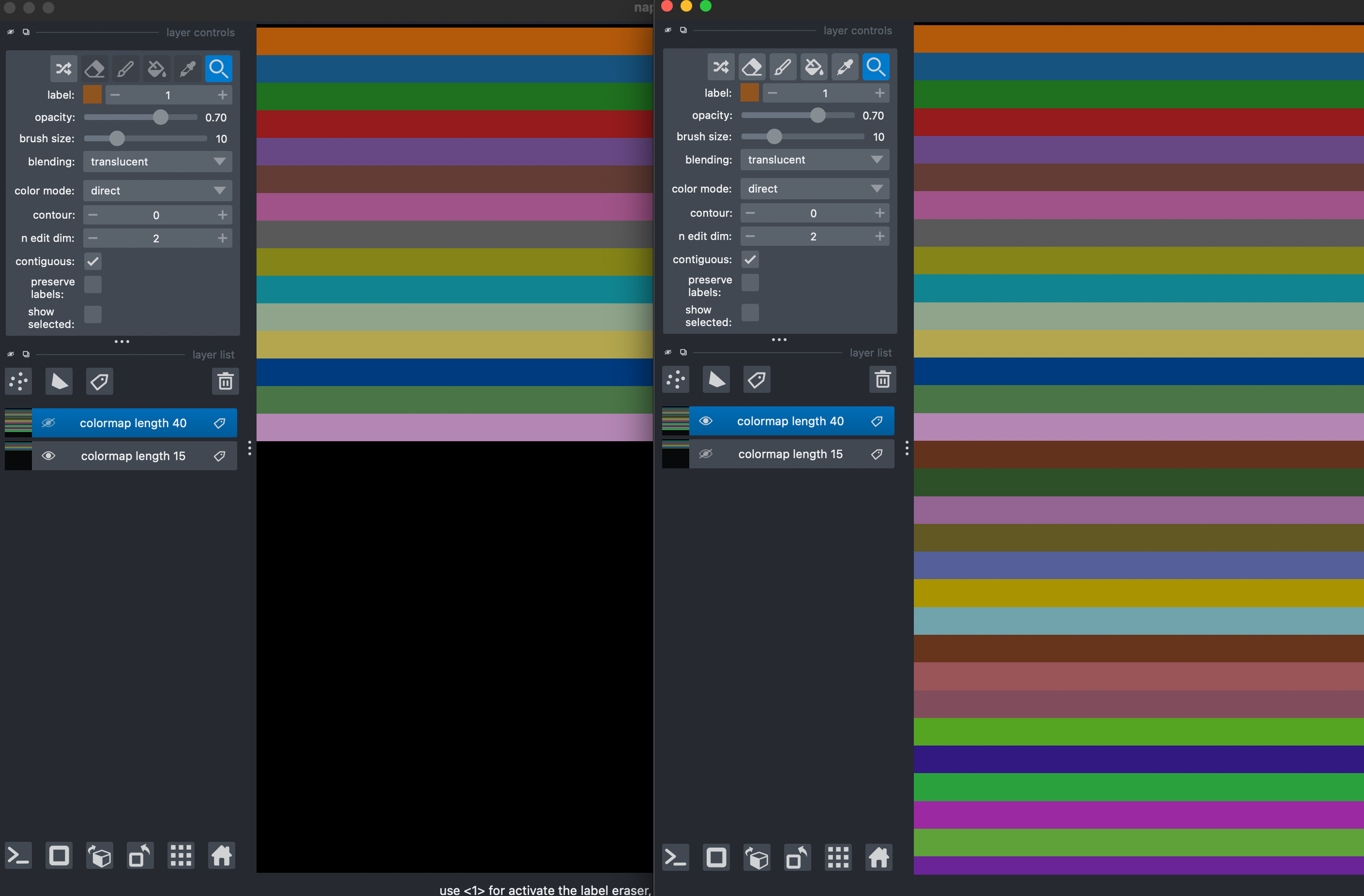

Jules Vanaret’ example (from ImageSC) looks like this:

@Cryaaa’s example…

Unfortunately, @aeisenbarth’s example won’t be fixed. The underlying implementation uses a 1024-element 2D texture for storing colors. Your example creates 262144 unique colors… Not to say you’re forgotten – I believe this calls for some documentation and/or warning before we implement a proper fix.

I have a minor update. I was able to determine that color_dict_to_colormap is generating incorrect control points.

For example, with five colors, this function will generate control points like [0, 0.25, 0.5, 0.75, 1.0], while

Colormapgenerates [0, 0.2, 0.4, 0.6, 0.8, 1.0]. The extra control point (1.0) is needed withColormapInterpolationMode.ZERO.I have a branch with this fix, but it’s insufficient. Something is still going wonky when this data is sent to the GPU.

More soon (hopefully).

I recently fell into this problem and created a test case, not knowing that this issue exists already.

viridis; not forgrayand also not noticeable forgist_earth).My code may be useful since it has small, artificial data and assertions.

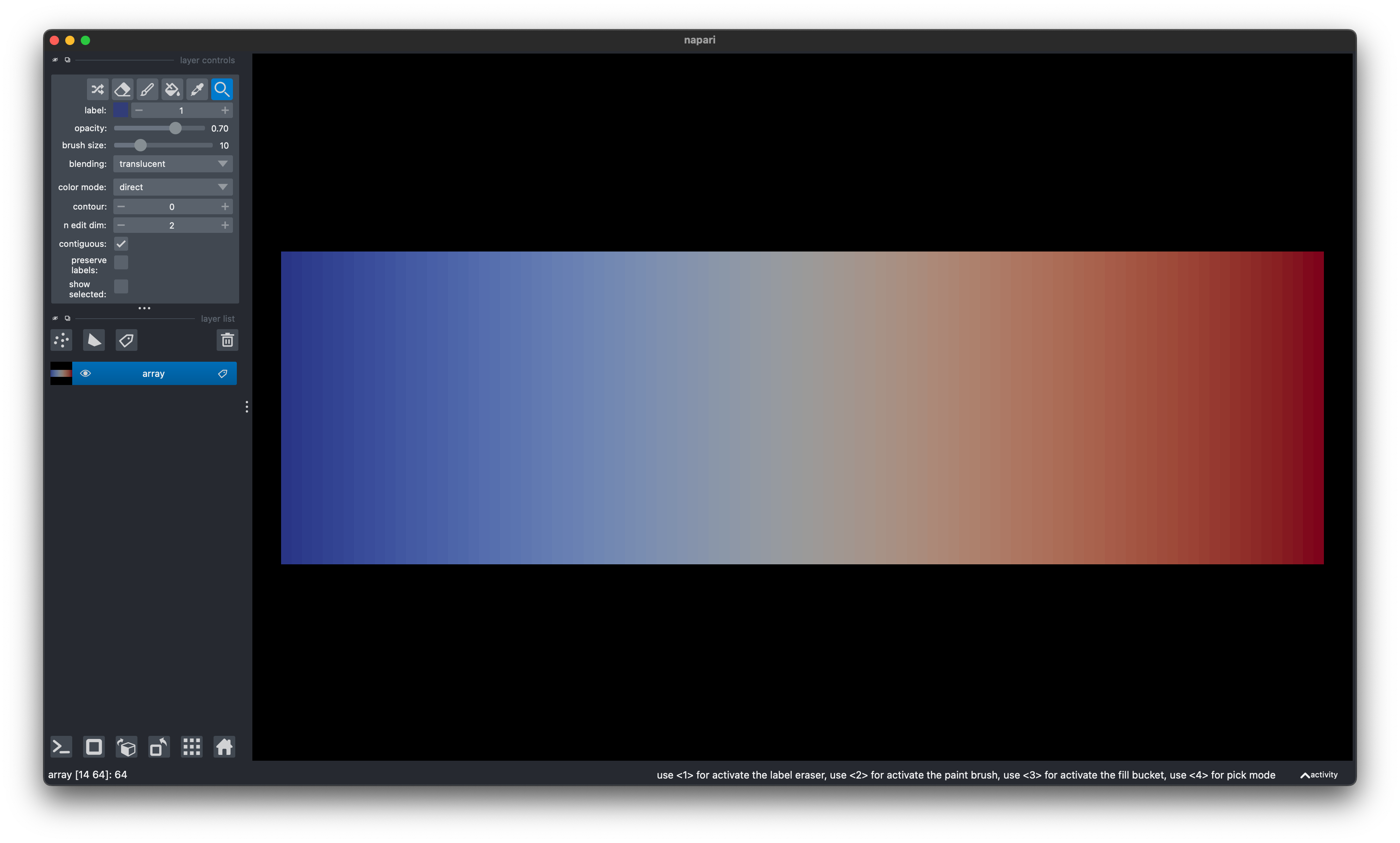

The promised example where it still fails at 1000 colors:

I made two test cases with 1000 colors (or an arbitrary number): Once with all the colors in order. And a slightly more complex test case where the label values are “shuffled”, such that they aren’t in order left to right. That way, rounding errors should stand out more, I thought. The results were surprising to me.

Code is here

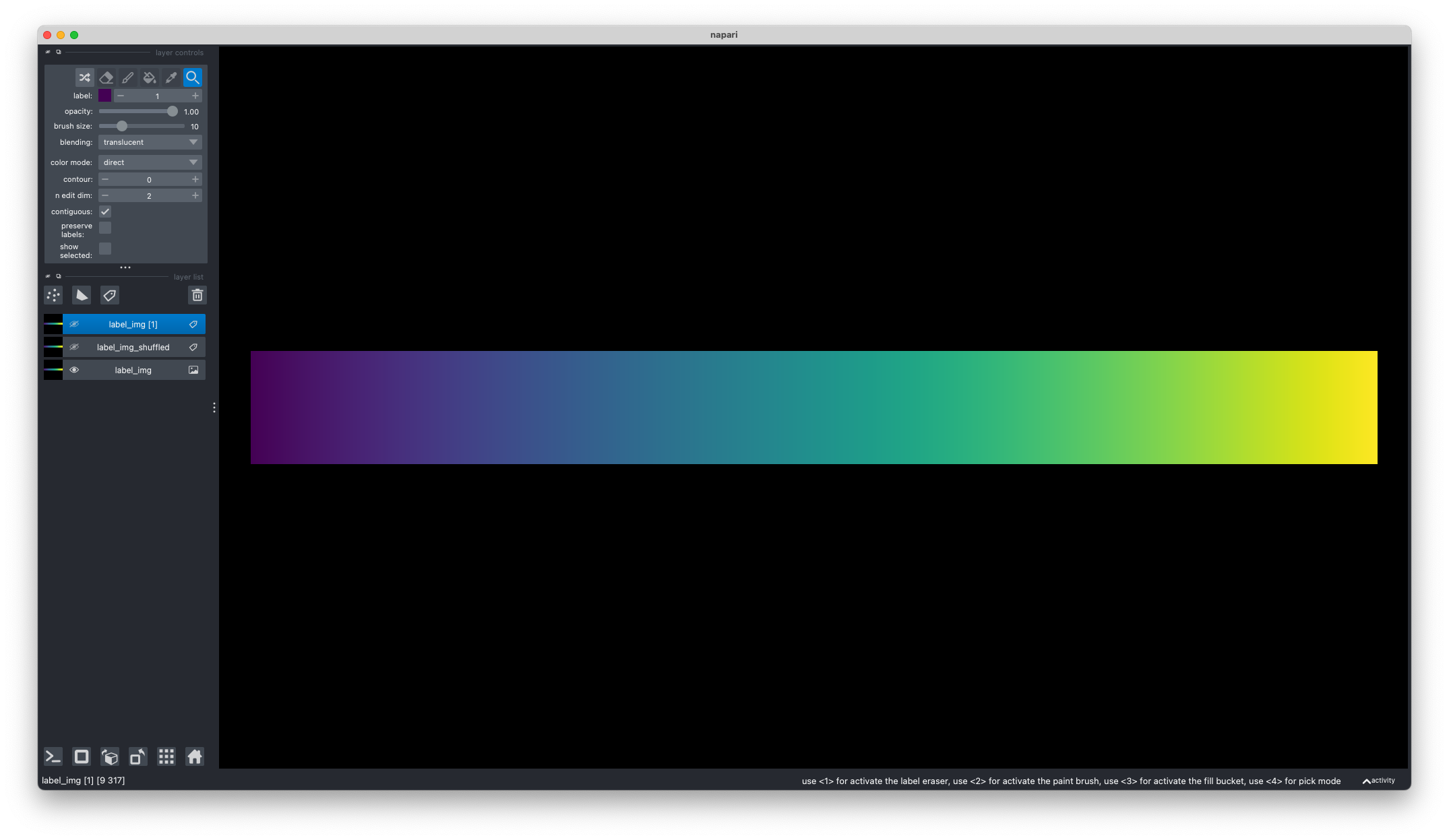

When just using the viridis colormap on the intensity image, we get this nice gradient (based on the non-shuffled image)

When using napari

0.4.16, using the direct color mode on the label maps leads to this:Whole blocks are assigned the wrong color. Interestingly enough, the ordered labels and the shuffled labels are the same, reinforcing again how it depends on underlying rounding somewhere of the color we provide to what is displayed, not e.g. on the matching of a color to its label value.

When using the branch with the fix for the colormaps, it looks way better (thanks a lot @perlman !). But it still makes a bit of a mistake on the left side. 4 consecutive labels (4 colors that should be close to each other) get assigned the same, wrong value

There are 2 interesting takeaways here:

nb_steps = 100, I can’t see any errors in the viridis colormap anymore, while one can see multiple errors in the old implementation. When settingnb_steps = 1000, there is an error again though. So the limit where it starts to fail is much higher, but <1000.nb_steps = 1000: This stripe is actually 4 label values broad. And, while it’s a big hard to distinguish, when zooming in on a good monitor, one can actually see that it’s always blocks of 4 labels that get the exact same color assigned. So there appears to be some rounding going on for very similar colors that get match together to the same underlying thing. And most of the time, that is close to being correct. But in rare cases, it apparently is not (=> 1, matching the 4 colors to something that is far away from what it should be)EDIT: The binning of 4 colors together when there are 1000 different colors also already happened in

0.4.16, I just didn’t really notice there because there are much more problems there 😃Thank you for the data & code.

As to the specific issue here with the layer colormap different from the picker, I think this is being caused by some floating point precision oddities. (The relevant code is here, mostly as a placeholder for myself to step through later.)

@jni We do need to come up with a strategy for handling Labels-as-LUT in #3308, as this seems to be a popular use for the labels!

I’m hoping that we can fix this as part of #3308, though we haven’t yet looked at manual color settings.