milvus: [Bug]:The node server is powered off and restarted,some collection search failure

Is there an existing issue for this?

- I have searched the existing issues

Environment

- Milvus version:2.2.2

- Deployment mode(standalone or cluster):cluster(Helm)

- MQ type(rocksmq, pulsar or kafka):pulsar

- SDK version(e.g. pymilvus v2.0.0rc2):2.2.2

- OS(Ubuntu or CentOS): Ubuntu

- CPU/Memory: 256g

- GPU:

- Others:

Current Behavior

2/5000 some collection search failure

Expected Behavior

collection search success

Steps To Reproduce

1.The node server is powered off and restarted (k8s-node01)

2.all collection loaded

3.some collection search failure (eg: CollectionName:vehicle_search_20221228)

Milvus Log

milvus_log1.tar.gz milvus_log2.tar.gz milvus_log6.tar.gz my-release-milvus-indexcoord-78c4947846-n4n4j.zip

Anything else?

No response

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 20 (9 by maintainers)

@hukang6677 Thanks for the logs. Looking into the logs, we found that the datanode does not watch the expected channel successfully, which caused the search requests keep waiting for the timetick sync up until timeout.

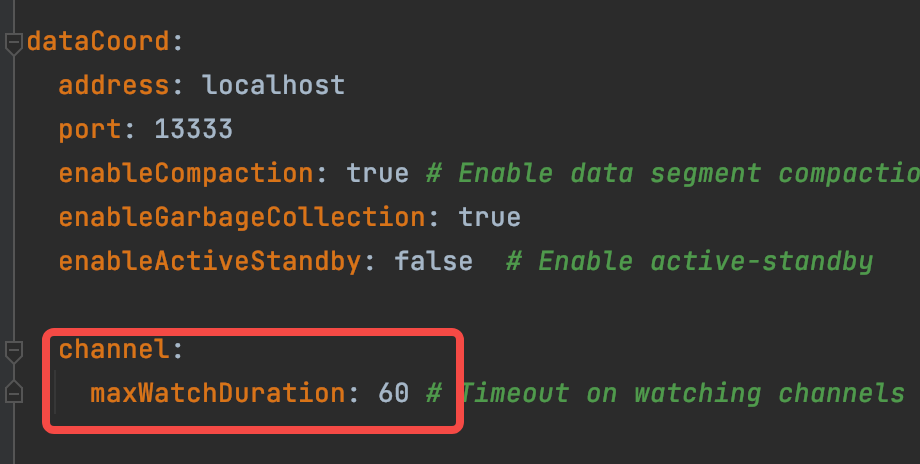

{"level":"WARN","time":"2023/01/30 03:36:45.784 +00:00","caller":"flowgraph/node.go:103","message":"some node(s) haven't received input","list":["nodeCtxTtChecker-dmInputNode-query-438321495194033264-by-dev-rootcoord-delta_5_438321495194033264v1","nodeCtxTtChecker-fdNode-by-dev-rootcoord-delta_5_438321495194033264v1","nodeCtxTtChecker-dmInputNode-query-438321495194033264-by-dev-rootcoord-delta_4_438321495194033264v0","nodeCtxTtChecker-fdNode-by-dev-rootcoord-delta_4_438321495194033264v0","nodeCtxTtChecker-stNode-by-dev-rootcoord-delta_4_438321495194033264v0","nodeCtxTtChecker-dNode-by-dev-rootcoord-delta_5_438321495194033264v1","nodeCtxTtChecker-stNode-by-dev-rootcoord-delta_5_438321495194033264v1","nodeCtxTtChecker-dNode-by-dev-rootcoord-delta_4_438321495194033264v0"],"duration ":"2m0s"}Could you please try to search with consistency_level=eventual? check here for more info about consistency level. Furthermore, if that works for you, you can update the maxWatchDuration to 900 to ensure the watch channel operations not fail for timeout.