milvus: [Bug]: Size of `rdb_data` folder keeps increasing

Is there an existing issue for this?

- I have searched the existing issues

Environment

- Milvus version: 2.1.1

- Deployment mode(standalone or cluster): standalone

- SDK version(e.g. pymilvus v2.0.0rc2): pymilvus 2.1.1

- OS(Ubuntu or CentOS): Ubuntu

- CPU/Memory: PVC for standalone pod is 50GB

- GPU:

- Others:

Current Behavior

The size of the rdb_data folder keeps increasing, despite the following rocksmq configuration:

rocksmq:

retentionTimeInMinutes: 60 ## 1 hour

retentionSizeInMB: 500

rocksmqPageSize: "2147483648" ## 2 GB

lrucacheratio: 0.06 ## rocksdb cache memory ratio

It ultimately hits 50GB, the size of the PVC for the standalone pod, and causes the standalone pod go into CrashLoopBackoff.

Expected Behavior

Given the rocksmq configuration above, I would expect rdb_data folder to be cleared every hour.

Steps To Reproduce

Deploy Milvus standalone to K8s via Helm chart, with following values.yaml:

https://gist.github.com/devennavani/3629603122333e8a245a1f564d691838

Milvus Log

No response

Anything else?

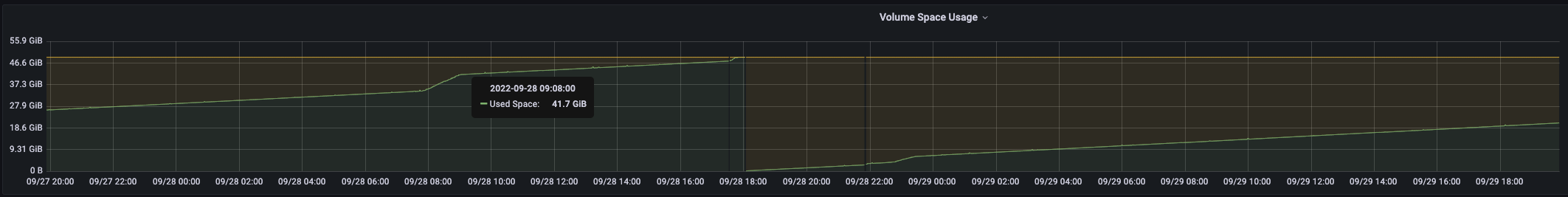

milvus PV usage:

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Reactions: 1

- Comments: 34 (20 by maintainers)

This is only an issue with rocksmq (in milvus standalone).

sry, we had a week’s holiday because of the festival, and now it is over. I will work for this immediately.

Actually change default channel number to 16 on 2.2.8 may also helps