milvus: [Bug]: Milvus hang occasionally when received search request after running some days.

Is there an existing issue for this?

- I have searched the existing issues

Environment

- Milvus version: 1.1

- Deployment mode(standalone or cluster):standalone

- SDK version(e.g. pymilvus v2.0.0rc2): milvus-sdk-java matching milvus server's version

- OS(Ubuntu or CentOS): CentOS

- CPU/Memory: 8cpu/34GB

- GPU: No

- Others: run docker

Current Behavior

I try analyze this bug by gdb and log. As following:

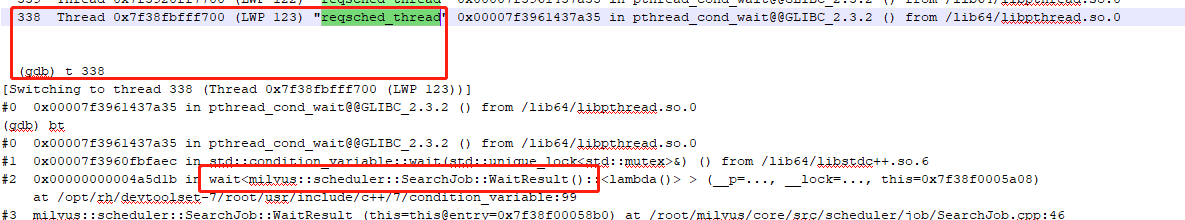

When some search request comed, reqsched_thread will hang forever. The reason is waiting for result for current job. This thread trace as following:

Block point of code is:

Block point of code is:

src/scheduler/job/SearchJob.cpp

void

SearchJob::WaitResult() {

std::unique_lock<std::mutex> lock(mutex_);

cv_.wait(lock, [this] { return index_files_.empty(); }); <<<<<<<<<<<<<<<<<<<<<<<<<

LOG_SERVER_DEBUG_ << LogOut("[%s][%ld] SearchJob %ld all done", "search", 0, id());

}

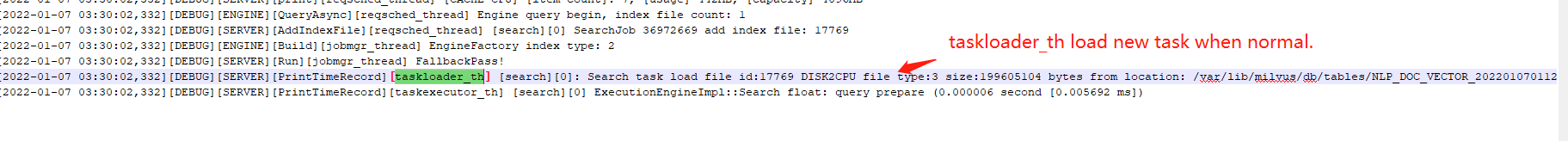

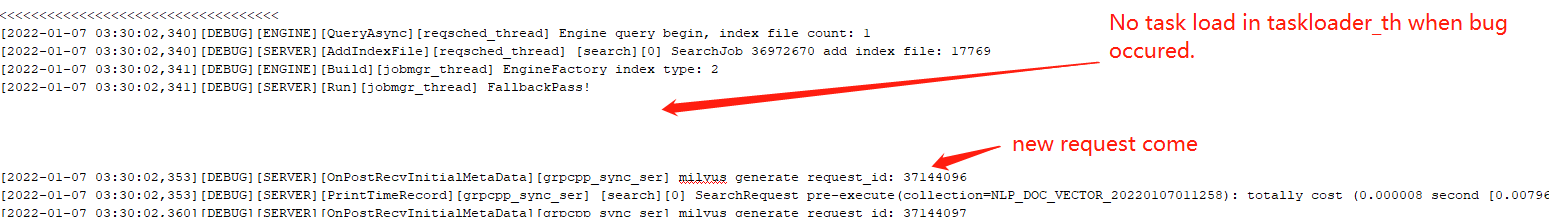

This job task had not been reloaded by taskloader_th as following debug log:

normal:

bug occured:

bug occured:

Expected Behavior

No response

Steps To Reproduce

1. Start 10 or more standalone milvus server, each runs in a docker container.(the more services, the faster bugs found)

2. Some indexes are created and deleted every hour, the size of indexes is 100K to 1M and the search QPS per server is about 100. Indexes' type are ivf_flat and nlist=1000.

3. After running for some time, unsually one day, 1 or 2 milvus server will trigger the bug.

4. The bug is often found no longer after a new collection/index created and a old one deleted, but not unsually.

Anything else?

I found all wait between threads is wait forever like this:

src/scheduler/job/SearchJob.cpp

void

SearchJob::WaitResult() {

std::unique_lock<std::mutex> lock(mutex_);

cv_.wait(lock, [this] { return index_files_.empty(); }); <<<<<<<<<<<<<<<<<<<<<<<<<

LOG_SERVER_DEBUG_ << LogOut("[%s][%ld] SearchJob %ld all done", "search", 0, id());

}

No error recovery codes is ok? especially in online service…

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 32 (14 by maintainers)

You can try my solution. https://github.com/milvus-io/milvus/issues/15038#issuecomment-1035801176

This bug is critical, I do want to fix it. The problem is I could not reproduce it to address the crash point, and could not deduce the crash point by reading the source code(some suspicious places have been excluded).

The 1.x is in maintainance state and will not have new features so the “on-disk index” is only available on 2.x.