milvus: [Bug]: [Memory]Release and load collection get exception no queryNode to allocate

Is there an existing issue for this?

- I have searched the existing issues

Environment

- Milvus version: master-20211123-73f18c5

- Deployment mode(standalone or cluster): cluster

- SDK version(e.g. pymilvus v2.0.0rc2): pymilvus 2.0.0rc9.dev11

- OS(Ubuntu or CentOS):

- CPU/Memory: limit querynode memory 2Gi

- GPU:

- Others:

Current Behavior

- deploy milvus cluster master-20211123-73f18c5 and limit querynode memory 2Gi

- load collection (dim=512, num_entities=399360 about 780Mi)

- search successfully

- release collection (No exception, but the memory usage of querynode did not drop)

- re-load failed

[2021-11-23 04:13:43,651 - INFO - ci_test]: ################################################################################ (conftest.py:162)

[2021-11-23 04:13:43,651 - INFO - ci_test]: [initialize_milvus] Log cleaned up, start testing... (conftest.py:163)

[2021-11-23 04:13:44,673 - DEBUG - ci_test]: {

auto_id: False

description:

fields: [{

name: int64

description:

type: 5

is_primary: True

auto_id: False

}, {

name: float

description:

type: 10

}, {

name: float_vector

description:

type: 101

params: {'dim': 512}

}]

}

(test_chaos_memory_stress.py:63)

[2021-11-23 04:13:44,673 - DEBUG - ci_test]: 2 (test_chaos_memory_stress.py:64)

[2021-11-23 04:13:48,047 - ERROR - ci_test]: Traceback (most recent call last):

File "/root/milvus/tests/python_client/utils/api_request.py", line 18, in inner_wrapper

res = func(*args, **kwargs)

File "/root/milvus/tests/python_client/utils/api_request.py", line 45, in api_request

return func(*arg, **kwargs)

File "/usr/local/lib/python3.6/site-packages/pymilvus/orm/collection.py", line 449, in load

conn.load_collection(self._name, timeout=timeout, **kwargs)

File "/usr/local/lib/python3.6/site-packages/pymilvus/client/stub.py", line 58, in handler

raise e

File "/usr/local/lib/python3.6/site-packages/pymilvus/client/stub.py", line 42, in handler

return func(self, *args, **kwargs)

File "/usr/local/lib/python3.6/site-packages/pymilvus/client/stub.py", line 322, in load_collection

return handler.load_collection("", collection_name=collection_name, timeout=timeout, **kwargs)

File "/usr/local/lib/python3.6/site-packages/pymilvus/client/grpc_handler.py", line 75, in handler

raise e

File "/usr/local/lib/python3.6/site-packages/pymilvus/client/grpc_handler.py", line 67, in handler

return func(self, *args, **kwargs)

File "/usr/local/lib/python3.6/site-packages/pymilvus/client/grpc_handler.py", line 820, in load_collection

raise BaseException(response.error_code, response.reason)

pymilvus.client.exceptions.BaseException: <BaseException: (code=1, message=call query coordinator LoadCollection: rpc error: code = Unknown desc = , no queryNode to allocate)>

(api_request.py:26)

[2021-11-23 04:13:48,047 - ERROR - ci_test]: (api_response) : <BaseException: (code=1, message=call query coordinator LoadCollection: rpc error: code = Unknown desc = , no queryNode to allocate)> (api_request.py:27)

Server log: milvus_logs.tar.gz

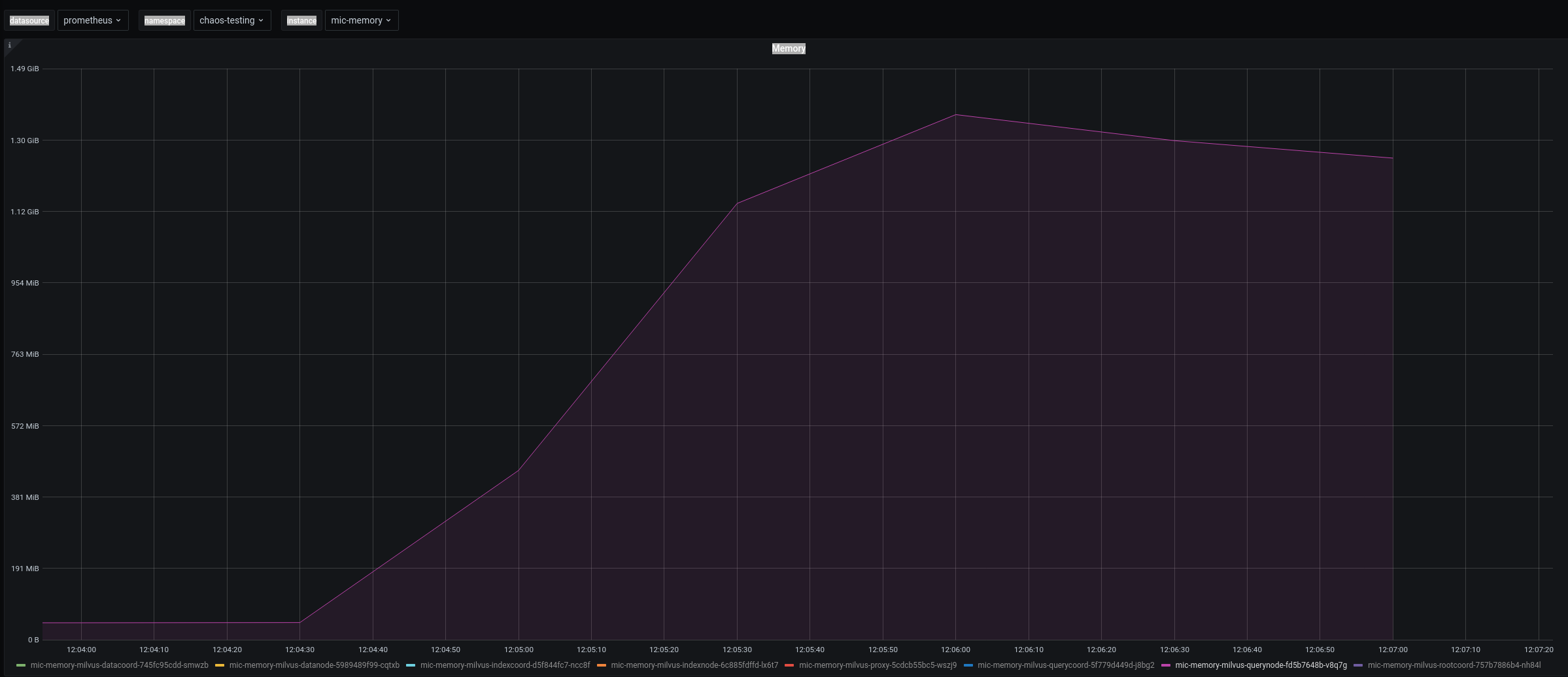

Memory usage:

Expected Behavior

No response

Steps To Reproduce

No response

Anything else?

No response

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Comments: 18 (18 by maintainers)

This issue exposed many problems.

I will open two more issues to solve 2 and 3 issues. Problem 1 mentioned in this issue can be closed now.

Experiment codes: