longhorn: [BUG] RWX doesn't work with release 1.4.0 due to end grace update error from recovery backend

Describe the bug (🐛 if you encounter this issue)

I reinstall longhorn 1.4.0 with k3s 1.25.5, everything is fine but RWX volume mount is repeatedly failed

To Reproduce

Steps to reproduce the behavior:

- Make a volume with RWX

- Mount it with a pod

Expected behavior

RWX should be mounted, as was in 1.3.2

Log or Support bundle

Here is the log from share-manager-<volume-name>:

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_FILEHANDLE from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_DISPATCH from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_CACHE_INODE from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_CACHE_INODE_LRU from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_HASHTABLE from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_HASHTABLE_CACHE from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_DUPREQ from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_INIT from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_MAIN from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_IDMAPPER from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_NFS_READDIR from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_NFS_V4_LOCK from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_CONFIG from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_CLIENTID from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_SESSIONS from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_PNFS from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_RW_LOCK from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_NLM from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_RPC from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_TIRPC from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_NFS_CB from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_THREAD from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_NFS_V4_ACL from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_STATE from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_9P from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_9P_DISPATCH from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_FSAL_UP from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_DBUS from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] SetComponentLogLevel :LOG :NULL :LOG: Changing log level of COMPONENT_NFS_MSK from NIV_EVENT to NIV_INFO │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] nfs_set_param_from_conf :NFS STARTUP :EVENT :Configuration file successfully parsed │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] init_fds_limit :INODE LRU :EVENT :Setting the system-imposed limit on FDs to 1048576. │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] init_server_pkgs :NFS STARTUP :INFO :State lock layer successfully initialized │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] init_server_pkgs :NFS STARTUP :INFO :IP/name cache successfully initialized │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] init_server_pkgs :NFS STARTUP :EVENT :Initializing ID Mapper. │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] init_server_pkgs :NFS STARTUP :EVENT :ID Mapper successfully initialized. │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] nfs4_recovery_init :CLIENT ID :INFO :Recovery Backend Init for longhorn │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] longhorn_recov_init :CLIENT ID :EVENT :Initialize recovery backend 'share-manager-shared-volume' │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] nfs_start_grace :STATE :EVENT :NFS Server Now IN GRACE, duration 90 │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] longhorn_read_recov_clids :CLIENT ID :EVENT :Read clients from recovery backend share-manager-shared-volume │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] read_clids :CLIENT ID :EVENT :response={"actions":{},"clients":[],"hostname":"share-manager-shared-volume","id":"share-manager-shared-volume","links":{"self":"http://longhorn-re │

│ │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] nfs_start_grace :STATE :EVENT :grace reload client info completed from backend │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] nfs_try_lift_grace :STATE :EVENT :check grace:reclaim complete(0) clid count(0) │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] longhorn_recov_end_grace :CLIENT ID :EVENT :End grace for recovery backend 'share-manager-shared-volume' version LUUZWL8T │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] http_call :CLIENT ID :EVENT :HTTP error: 500 (url=http://longhorn-recovery-backend:9600/v1/recoverybackend/share-manager-shared-volume, payload={"version": "LUUZWL8T"}) │

│ 31/12/2022 22:40:52 : epoch 63b0ba74 : share-manager-shared-volume : nfs-ganesha-29[main] longhorn_recov_end_grace :CLIENT ID :FATAL :HTTP call error: res=-1 ((null)) │

│ time="2022-12-31T22:40:52Z" level=error msg="NFS server exited with error" encrypted=false error="ganesha.nfsd failed with error: exit status 2, output: " volume=shared-volume │

│ W1231 22:40:52.523325 1 mount_helper_common.go:133] Warning: "/export/shared-volume" is not a mountpoint, deleting │

│ time="2022-12-31T22:40:52Z" level=debug msg="Device /dev/mapper/shared-volume is not an active LUKS device" error="failed to run cryptsetup args: [status shared-volume] output: error: exit status 4"

Environment

- Longhorn version: 1.4.0

- Installation method (e.g. Rancher Catalog App/Helm/Kubectl): Helm

- Kubernetes distro (e.g. RKE/K3s/EKS/OpenShift) and version: k3s 1.25.5

- Number of management node in the cluster: 2

- Number of worker node in the cluster: 2

- Node config

- OS type and version: Ubuntu 20.04

- CPU per node: 64

- Memory per node: 384Gi

- Disk type(e.g. SSD/NVMe): SSD

- Network bandwidth between the nodes: 10G + 10G (link aggregated)

- Underlying Infrastructure (e.g. on AWS/GCE, EKS/GKE, VMWare/KVM, Baremetal): On-prem

- Number of Longhorn volumes in the cluster: 7

Additional context

Add any other context about the problem here.

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 133 (73 by maintainers)

Thanks @jinserk ! You are heavily contributing to Longhorn 🙂 . Helpful feedback!

From the log and code flow, the full story is

To improve the resilience to the conflict error

Jinserk Baik @.***>於 2023年1月1日 週日,上午11:20寫道:

done 👍 @derekbit

Yes I used longhorn from v1.3.1, and experienced this very frequently, especially everytime I reinstall the k3s cluster.

I already applied the https://github.com/longhorn/longhorn/issues/3207 workaround, and found that it works in my case too. Every volumes in my use has 2 replicas on both nodes, which hit the case in https://github.com/longhorn/longhorn/issues/3207

@derekbit Wow it looks working! It is amazing! Did you resolve the 4-yr long issue? 👍

The new image is applied:

The rwx-volume test is applied:

I’ll do it with my Jupyterhub env and test it under the multiple pods mounting!

Works expectedly in my env, but I used the k3s embeded db and without HA in this test. I can try the HA + external db tomorrow.

Thank you for the update.

It is the workaround. Will fix the it as I mentioned in the next release.

Jinserk Baik @.***>於 2023年1月1日 週日,下午4:12寫道:

@derekbit It looks working!!

Thank you so much @derekbit! for the nice workaround. I guess this will resolve my problem, but if you need to fix the issue to the next release, I’ll help for the test. 😃

@tbertenshaw Because your issue is different than the issue @jinserk encountered, can you open a ticket for tracking what you encountered? Provide the env, steps, symptons and the latest support bundle. We will handle it in the new ticket. Thank you.

supportbundle_cf947ccc-9d7e-490f-8df5-f22db6f413f7_2023-01-06T14-28-35Z.zip @derekbit 😃

Thanks, @tbertenshaw to cooperate with us for testing to figure out this rare cause. 👍

@innobead From the patched image. @tbertenshaw You can fall back to

longhornio/longhorn-manager:v1.4.0. Looks the patched one has some issues.@derekbit longhorn-manager-x5p6j.log as well as the share manager restarting, i can see that one of the longhorn-manager pods has restarted 123 times longhorn-manager-x5p6j.log

@tbertenshaw Sorry, my bad. Checked the wrong node before.

Yes, as you mentioned. The share-manager pod is created and deleted repeatedly. The share-manager controller complains

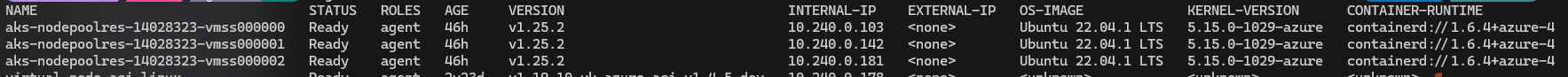

cannot check IsNodeDownOrDeleted() when syncShareManagerPodbecause of the errorno node name provided to check node down or deleted. I also checked the log, and the share-manager pod’sspec.nodeNameis actually set toaks-nodepoolres-14028323-vmss000001which is ready.I suspect this is probably caused by that immediately checking

pod.spec.nodeNameafter creating a share-manager pod.I’m going to work on a patch, and I would appreciate it if you help check it.

@innobead @derekbit scaled workload to 3 pods and this is the support bundle after an hour of this config.

supportbundle_cf947ccc-9d7e-490f-8df5-f22db6f413f7_2023-01-04T18-53-12Z.zip

share-manager-pvc-f5952e3a-d202-4e8f-9fcf-277d70bec191.log

Any more detail needed?

@jinserk Can you try the workaround mentioned in https://github.com/longhorn/longhorn/issues/3207? I will continue digging in the new issue. BTW, does the issue happen in v1.3.x as well?

@timbo

Sorry, I didn’t explain clearly. Should replace the longhorn-recovery-backend deployment’s image with

derekbit/longhorn-manager:v1.4.0-update, because the NFS recovery-backend logics are in this deployment.@derekbit The volume name is

jinserk-baik-volumeand the pvc name isclaim-jinserk-2ebaik. The timing is more than 10 min – as you can see the pod description above.I see, the functions in

datastore/kubernetesdo not containverifyCreateorverifyUpdateNo… I just knew the comments Sheng left in the ticket…Hello @jinserk I added the retry of confimap update on conflict in image

derekbit/longhorn-manager:v1.4.0-update. Could you please try again? Thank you.@longhorn/qa @chriscchien I cannot reproduce the issue in my env. Can you help check if you can reproduce it using v1.4.0 images?

No, my colleague added the verification for some resources creation operations for a workaound. I just thought of the verification and added it to the share-manager’s configmap creation flow. 😃 Look forward to your test results. Many thanks for your patience and help.

From the issue, I think we should verify all creations for all resource creation for avoiding any accident. cc @innobead

@innobead Do you mean the k3s database? no, I’m using a remote postgresql server for k3s instead of sqlite or etcd.

/var/lib/rancherand/var/lib/kubeletresides in a separated SSD, on both nodes.Also I’d like to state that the physical volume for the longhorn use on each nodes are software-raid1 (mirroring) MD volume with 2 10TB HDDs.

By the way, can you try the simple steps in #5183 (comment)? I’d check if the simple test still hits the error.

Sure, I’ll check it too!

One more thing is, I’m using two master nodes (and also work as worker nodes) with HA configuration – a remote postgresql k3s database. I’m not sure this could be affect to the Longhorn’s operation.

I just tried creating 10 pods with the same RWX volume, but they were created successfully.

My steps are changing the

ReadWriteOncetoReadWriteManyof pod_with_pvc.yaml and changing the pod name. Create them bykubectl -f <all pod_with_pvc.yaml manifests>.@innobead

After successfuly creating the share-manager’s configmap, recovery-backend somehow failed to read get the configmap with

not founderror. Then, a new pod was created and then operated the configmap. So, I guesses there is a concurrent issue and try to scale down replicas.But, I’m still not quite sure if it is a valid workaround. Additionally, the very beginning

not founderror is very weird, because the configmap was acutally created.Need more time to investigate the issue.

No, we didn’t encounter the error.

Still thinking how to reproduce it and check if there is a workaround.

David Ko @.***>於 2023年1月1日 週日,下午1:13寫道:

@derekbit Here are the logs from the pods: longhorn-recovery-backend-logs.zip

BTW, how to make the support bundle? Do you have any doc to howto?

@innobead It’s my pleasure! I appreciate for the great project!

Can you send us the support bundle? BTW, can you show the logs of

longhorn-recovery-backend-864d6fb7c-c8wlvandlonghorn-recovery-backend-864d6fb7c-z7ptn?I have two nodes in my cluster and the pods under

longhorn-systemnamespace are:I couldn’t find the matched logs from the backend pod logs, but can see repeated errors with different version numbers:

Please let me know what logs you need to figure it out. I’ll do my best to provide the info. Thank you so much and Happy New Year!