longhorn: [BUG] Restoring backup fails when validating volume spec

Describe the bug (🐛 if you encounter this issue)

I have periodic backups to S3. Today I thought I’d try restoring one today but it fails when trying to validate the volume spec:

unable to create volume: unable to create volume foo: Volume.longhorn.io "foo" is invalid: [spec.snapshotDataIntegrity: Unsupported value: "": supported values: "ignored", "disabled", "enabled", "fast-check", spec.unmapMarkSnapChainRemoved: Unsupported value: "": supported values: "ignored", "disabled", "enabled", spec.dataLocality: Unsupported value: "": supported values: "disabled", "best-effort", "strict-local", spec.replicaAutoBalance: Unsupported value: "": supported values: "ignored", "disabled", "least-effort", "best-effort"]

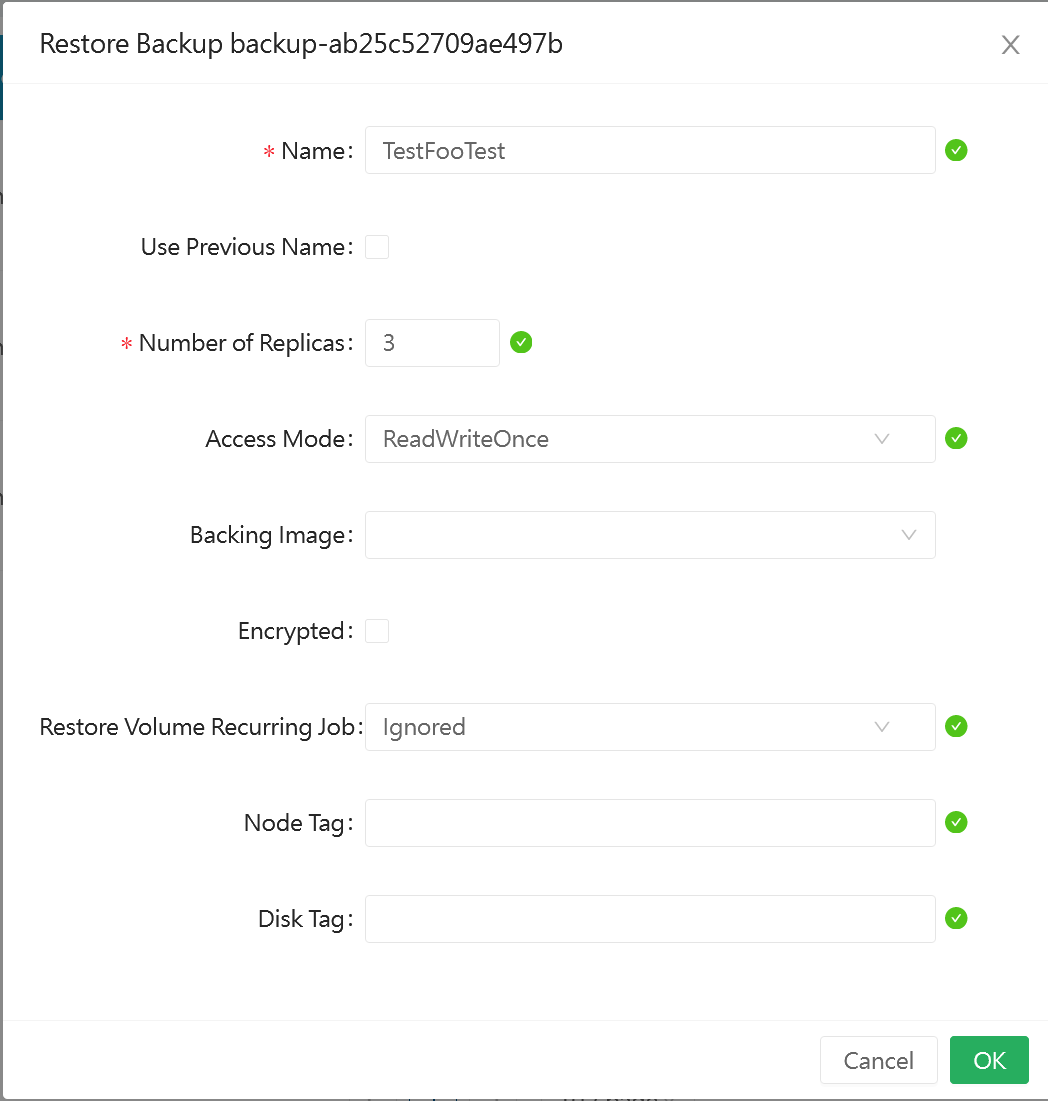

As far as I can tell I cannot control these values when restoring in the UI, so I’m assuming it is passing empty string to mean “inherit” but the validation is rejecting that.

To Reproduce

Steps to reproduce the behavior:

-

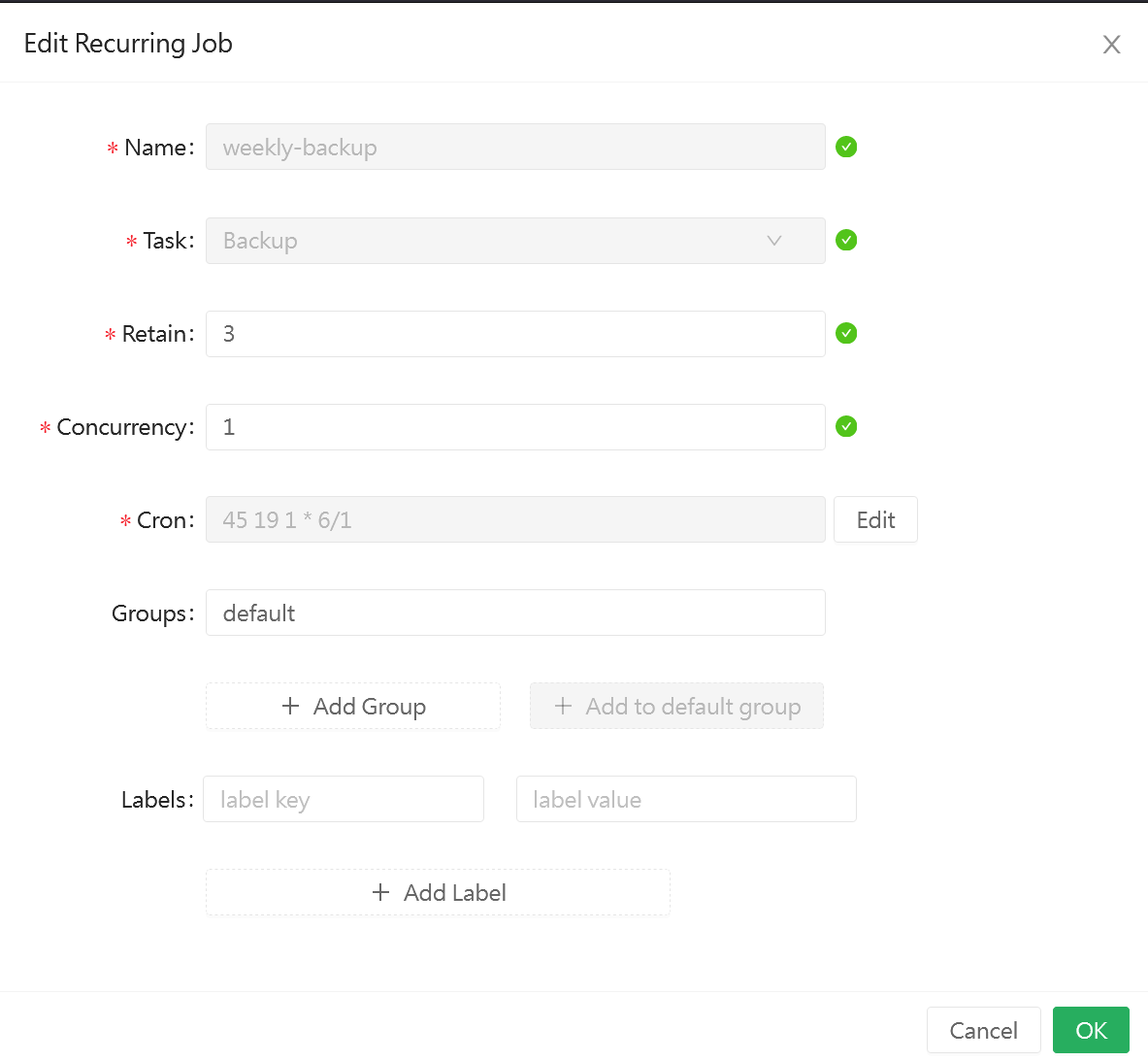

Create a period backup job

-

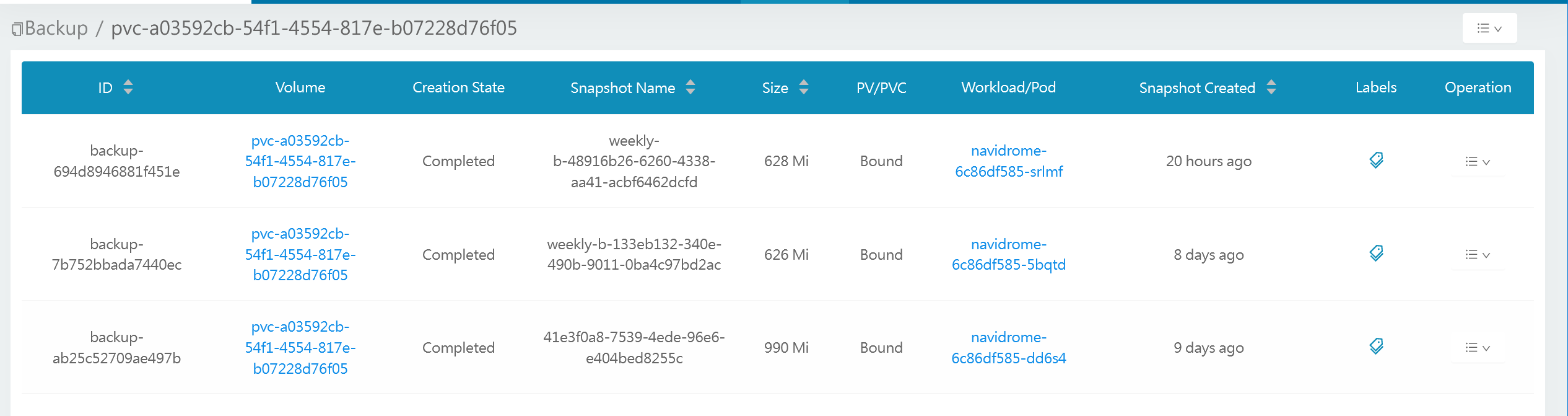

Go to the backups list for a volume and attempt to restore one

-

Restore config I used:

-

Observe backup restore failure

Expected behavior

The backup to be restored.

Log or Support bundle

If applicable, add the Longhorn managers’ log or support bundle when the issue happens. You can generate a Support Bundle using the link at the footer of the Longhorn UI.

Environment

- Longhorn version: 1.4.0

- Installation method (e.g. Rancher Catalog App/Helm/Kubectl): Kubectl

- Kubernetes distro (e.g. RKE/K3s/EKS/OpenShift) and version: kubeadm cluster, selfhosted

- Number of management node in the cluster: 3

- Number of worker node in the cluster: 4

- Node config

- OS type and version: Ubuntu 20.04.5 LTS

- CPU per node: 10

- Memory per node: 24GB

- Disk type(e.g. SSD/NVMe): NVME

- Network bandwidth between the nodes: 10gbit

- Underlying Infrastructure (e.g. on AWS/GCE, EKS/GKE, VMWare/KVM, Baremetal): KVM

- Number of Longhorn volumes in the cluster: 11

Additional context

Backups are stored via Minio/S3.

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 19 (12 by maintainers)

Got it. It looks the default values of the newly added spec fields in LH v1.4.0 are not set correctly.

The message also complains the wrong value of

spec.replicaAutoBalanceadded prior to v1.4.0. 😕Yep, deleted the admission pods and they were recreated and now it works. Now I feel like an idiot 😄 sorry for the bother friends.

Yes, we mutate the values if they are empty strings. https://github.com/longhorn/longhorn-manager/blob/v1.4.0/webhook/resources/volume/mutator.go#L101

Update: I created a volume and backed up it in v1.3.2 and sucessfully restored it in v1.4.0.

It fails even with backups created by 1.4.0, as in this case they are backups of a volume originally created by 1.3.2 (or possibly older, hard to verify)

No, the last upgrade was only the kubectl apply script as above. Not sure why the pods were not regenerated honestly. Maybe it’s time to rebuild my control plane.

Just did a quick test. The validatingWebhookConfiguration and mutatingWebhookConfiguration are regenerated after upgrade. Not sure why your environemtn didn’t do the regeneration. 😕

I also tried to reproduce the issue, but I couldn’t reproduce it either.

The test steps