longhorn: [BUG] Instance Manager Memory Leak

Describe the bug

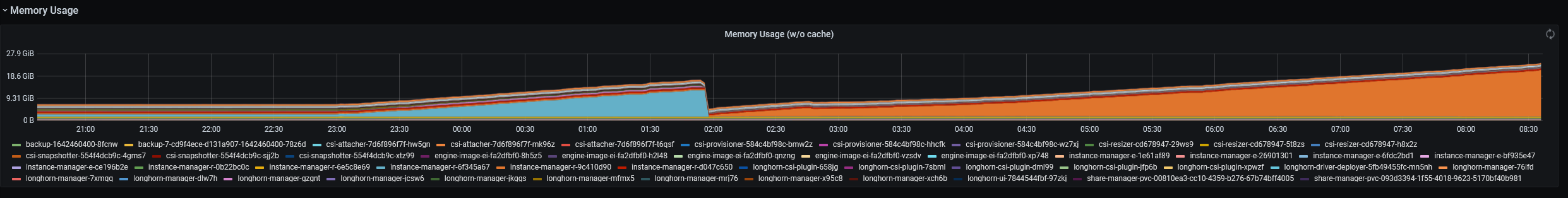

One of the instance manager is using 4 CPU cores and ballooning in memory. Currently it’s 19GB memory use and fast rising.

We killed the instance-manager last night but it continues right on.

Log or Support bundle

The afftected process is in: instance-manager-r-9c410d90

root 763 18.2 44.4 26153392 18236828 ? DLl Jan11 1916:59 /host/var/lib/longhorn/engine-binaries/longhornio-longhorn-engine-v1.2.3/longhorn sync-agent --listen 0.0.0.0:10152 --replica 0.0.0.0:10150 --listen-port-range 10153-10162

Is this specific to a volume? If so how can we determine it? The node is soon OOM.

Thanks for support!

Support Bundle attached. longhorn-support-bundle_55903fa6-26ed-4355-a4bf-17d3650572cc_2022-01-18T08-39-31Z.zip

Environment

- Longhorn version: 1.23

- Installation method (e.g. Rancher Catalog App/Helm/Kubectl): Rancher Catalog App

- Kubernetes distro (e.g. RKE/K3s/EKS/OpenShift) and version:

- Number of management node in the cluster: 3

- Number of worker node in the cluster: 5

- Node config

- OS type and version: ubuntu 20.04

- CPU per node: 12

- Memory per node: 32GB

- Disk type(e.g. SSD/NVMe):

- Network bandwidth between the nodes:

- Underlying Infrastructure (e.g. on AWS/GCE, EKS/GKE, VMWare/KVM, Baremetal):

- Number of Longhorn volumes in the cluster: 30 active

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 16 (8 by maintainers)

Reproduce steps:

quay.io/jenting/longhorn-engine:master-head-ten-mins-sleepas the default engine imagesTest steps:

Thanks @timmy59100 for reporting

We are looking into the support bundle. It seems that there is a problematic failed backup CR (

backup-a2023cc97cd9492a) that trigger the volume to constantly initiate new backups. There are many logs like this every 2-3 minutes:If you try to delete the backup CR by running

kubectl delete backups.longhorn.io backup-a2023cc97cd9492a -n longhorn-system, the problem might have gone awayThis problem looks like a bug to me. cc @jenting We need to investigate the code path for this failure handling. Looking into the longhorn manager logs, I see there are 800 messages like this

It seems that the backup init command failed because of timeout and the backup controller keeps retrying to create a new backup monitor:

It seems we need to re-consider the timeout here and/or add a backoff-limit in the backup controller

Today we had the same issue again on a different cluster.

Prefer 3, because I would like us to fix the real cause and make the operation nearly asynchronous but the short-term workaround is 2.